Buildings sometimes seem as if they’ve been left behind by technological progress. Many, perhaps most, of the systems most buildings are built from (drywall, trusses, bricks, concrete) have been in use for decades, if not centuries. Changes that do occur tend to be evolutionary, rather than revolutionary, and mostly don’t appear to impact the actual capabilities of the building itself, especially for residential construction. “Take out anything with a screen in your living room”, the critique goes, “there’s no way to tell if your house was built in the 2010s or the 1970s.”

There’s a sense in which this critique is true. But viewed from another angle, we’re in the middle of a huge rethinking of the job a building is expected to perform. Increasingly, there’s a desire to create a comfortable indoor climate, while simultaneously minimizing a building’s energy consumption. To achieve both of these, builders are increasingly designing buildings to be sealed bubbles of air, where any sort of flow across the outside boundary (air, water, energy) is minimized and controlled.

It may not seem like a huge deal, but treating a building this way changes how it works, and requires a departure from how buildings have traditionally gone together. To understand this change, we need to look back at the way that managing the climate of a building has changed over time. So let’s take a look at the last 250 years of indoor climate control in the US [0]. As we’ll see, trying to manipulate indoor climate has always been closely tied with being able to do it efficiently.

(The focus will be on residential construction, but should apply to buildings more generally.)

Heating

Up through the late 1700s, most homes in the US were heated via large wood-burning fireplaces constructed from brick or stone and built into the structure of the house itself. This was a heating tradition brought from England, and while it performed its basic task of providing warmth, it left a lot to be desired. An open fire provided ample heat as long as you were standing directly in front of it, but did a poor job of heating the house itself - most of the heated air escaped up through the chimney, pulling cold air into the house from outside. One New York physician in the 1720s described rising in the morning to find a bottle of wine that, despite being in a room with an open fire all night, had frozen solid.

Wood burning fireplaces also required large amounts of firewood to keep them fed (this was partially due to the fact that early US colonies had a colder climate than England.) A typical colonial home might require 10-15 cords of wood to make it through the winter, with a skilled woodcutter being able to chop perhaps 1 cord a day (a cord of wood is 128 cubic feet - 15 cords is a cube of wood 12 feet on a side.) America’s large forests initially made firewood easy, if time consuming, to secure, but as time went on, cities grew, and easily accessible sources of firewood were exhausted, obtaining it became a larger and larger issue. In 1744 Ben Franklin wrote:

“Wood, our common [fuel], which within these 100 years might be had at every man’s door, must now be fetch’d near 100 miles to some towns, and makes a very considerable article in the expense of families.”

Firewood got more and more expensive - it was, on average, 3 times as expensive in 1800 as it was in 1754, and an especially cold winter would drive that price even higher. The price of firewood in Philadelphia in the winter of 1805 was double what it had been in 1804.

Difficulty obtaining it also meant firewood shortages were common. Boston reported fuel shortages as early as the 1630s, and during the Revolution wood shortages forced residents to burn horse dung to keep warm. Even affluent residents weren’t immune, with the staff of Harvard bemoaning “the great scarcity of wood in the College.” In an attempt to prevent wood fraud (such as selling cords that weren’t quite full cords) and secure a steady supply, the cordwood market was regulated early on. By the 1760s both New York and Philadelphia had cordwood inspectors, and obtaining firewood was an important focus for many colonial officials.

There were many attempts to address this by developing a more efficient fireplace. The most famous of these is undoubtedly the Franklin Stove, but there were many others - the Rittenhouse stove (a Franklin Stove descendent), the Rumford fireplace, etc. But these were expensive, complex, offered relatively minor improvements, and saw comparatively little adoption.

Ultimately, the technology that would replace the fireplace as the method for home heating was the cast-iron stove. A stove delivered a much greater fraction of its heat into the house than a fireplace, and was much cheaper to install than something like a Franklin stove or a Rumford fireplace.

Cast iron stoves had long been used as heat sources in Northern Europe, and were being manufactured in the US by the 1700s. But they remained relatively uncommon - one study of pre-Revolution New York and New England homes found that only 3% had an iron stove.

Partially this was due to cultural preferences favoring open fires over closed stoves. But the bigger factor was distribution costs. Early iron foundries were located in remote locations near sources of iron and wood, and anything they produced had to be moved over land via horse and cart. This increased the cost and limited the distribution of large, heavy stoves, and they remained a luxury item.

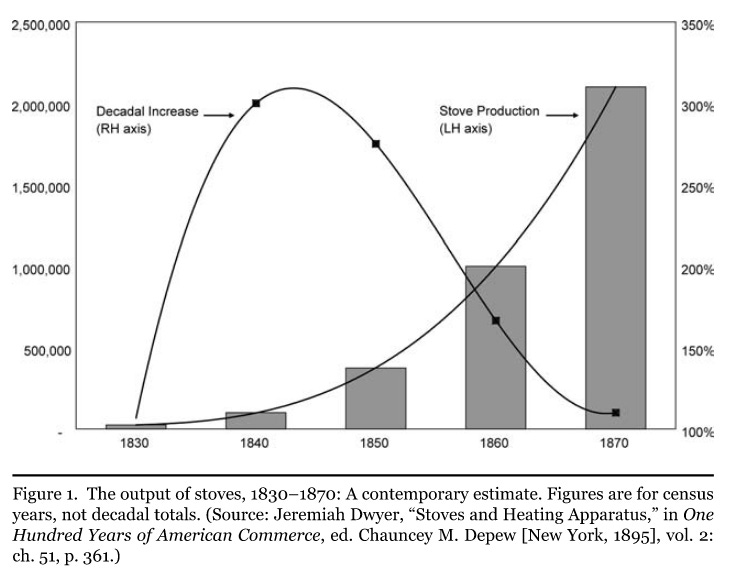

As the US’s transportation network evolved, this constraint relaxed. The construction of canals, turnpikes, and railroad networks made it easier to transport large, heavy goods, which in turn allowed centralization of manufacturing, enabling economies of scale and lowering costs even more. Between 1810 and 1860 the cost of transporting bulky goods over land had dropped by 95%, and the cost of stoves had dropped by 50% or more. By 1860 an estimated 1 million stoves per year were being sold:

Though they were more efficient than a fireplace, early stoves still burned wood. Though coal was available (the first coal was mined in the US as early as 1701), similar issues of logistics made it difficult and costly to transport, limiting its use as a fuel for heating. Improving transportation networks made coal increasingly available until it became cheaper than wood, supplanting firewood as the main method of home heating, with coal fired stoves replacing wood stoves. By 1860 90% of New York and 85% of Philadelphia’s home heating was powered by coal.

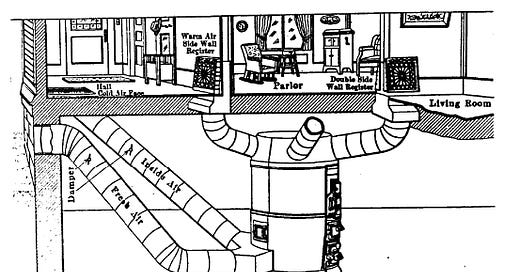

However, stoves remained limited by the fact that they were a point heat source. The next stage in the evolution of heating systems was the development of centralized systems, where a central furnace (burning coal or some other fuel) would carry heat to where it was needed via some medium (either air, hot water, or steam) via a series of ducts or pipes.

Like with stoves, widespread central system adoption followed well behind the technology. Central systems appeared in the US as early as 1808, but didn’t see any sort of substantial adoption until the 1840s. Initial central systems were limited to large commercial installations (factories, apartment buildings, etc.), but by the late 1800s the technology started to diffuse into single family homes. But adoption was slow - by 1940 only 42% of homes had a central heating system.

Initially, steam, hot water, and air-based central systems were all popular - hot-air furnaces were less expensive, but steam and hot water heat were more effective. Among homes that had a central system in 1940, it was roughly 50/50 between steam/hot water and hot air systems. But this split varied by geography. In New England, where hot air systems often had trouble coping with the extreme cold, 60% of dwelling units had some sort of steam or hot water heat as late as 1970, compared to only 25% having warm air heat (in the Midwest, these percentages were reversed.)

The development of forced air systems (where a fan is added to a hot air furnace to drive the air where it’s needed) eventually changed this calculus, as forced air systems were simpler and less expensive than hot water or steam heat. Forced air systems were first developed in the early 1900s, and became popular during WWII (partially due to the fact that homes built as “defense housing” were limited to air systems, as they used less metal than steam or hot water heat.) Today over 90% of new homes have some type of forced air system (either a furnace or a heat pump), with only 3% having some type of steam or hot water heat.

Coal remained popular as a heating fuel well into the 20th century - as of 1940, more than 50% of homes were heated by coal. But this rapidly changed after WWII - by 1973, less than 1% of homes were coal-heated, instead being largely heated by gas (56%), fuel oil (22%), or electricity (9%). These remain popular fuel choices today, though electricity continues to gain popularity as a heating source (currently around 40% of homes are heated with electricity.)

Cooling

Cooling a building was much more difficult than warming it, and the technology to do it lagged heating technology. Cooling had traditionally been accomplished by what we might call “vernacular” methods - using large eaves to block the sun from windows, building walls from mass masonry to create a “thermal lag” that would delay the inside of a building from warming up, arranging windows or other openings to encourage cross drafts, etc.

In the mid 1800s, the technology for mechanical refrigeration was developed. Initially this was largely used to manufacture ice, and though there were some efforts to use refrigeration to cool buildings directly (such as the St. Louis Ice Palace), most early efforts at cooling systems were based around blowing air over ice [1]. These were expensive, of limited effectiveness, and mostly limited to buildings like theaters (which tended to have extreme difficulty maintaining a comfortable temperature due to the lack of windows and the large mass of people in them.)

“Air conditioning” systems capable of mechanically cooling a building started to appear in the US at the beginning of the 20th century - Alfred Wolf designed one of the first HVAC systems capable of mechanically cooling the air for the New York Stock Exchange in 1903. However, in the early days it was mostly an industrial technology, used for creating controlled conditions inside a factory. And it was as much concerned with manipulating humidity as it was temperature - in 1911 Willis Carrier described air conditioning as “the artificial regulation of atmospheric moisture.” Many industrial processes worked best at a particular temperature or humidity - for instance, multicolor printing worked best at low humidity, but textile production worked best at high humidity. By manipulating the environmental conditions of the factory, air conditioning could improve yield, increase the time when the factory could produce, improve worker conditions, and make it possible to build factories in locations that previously wouldn’t have been sustainable (such as the South.) Between 1916 and 1925 Carrier sold more than $1 million worth of air conditioning systems to manufacturers of chocolate, chewing gum, and hard candy, and producers of textiles, tobacco, sausage, black powder, and flour also made use of air conditioning systems to improve their efficiency.

The burgeoning movie theater industry became the second primary customer for air conditioning, and the place that most people first encountered it. By 1914, there were an estimated 14,000 movie theaters in the US, attended by an estimated 26 million people. Like traditional theaters, movie theaters tended to become excessively hot and uncomfortable due to the lack of windows and the concentration of people - theaters were often forced to shut down in the summer months because they became too uncomfortable. Competition between them (as well as laws requiring a minimum amount of mechanical ventilation) caused theaters to start installing air conditioning systems - even a relatively small bump in theater occupancy could justify the expense of installing one.

Like with central heating, air conditioning technology gradually spread from large commercial installations to individual homes. However, prior to WWII it remained niche - by 1938, only 0.25% of homes had an air conditioning system. Air conditioning systems were expensive, and needed to be custom designed to match the building characteristics of the house they were building installed in (a war building scientists are still fighting today.) To try to increase adoption (incentivized in part by electric utilities who were looking for ways to encourage more electricity use) more mass-producible and simpler to install systems were developed, such as the window unit, which first appeared in the early 1930s [2].

As these became more successful, they retreated from the idea that air conditioning should manipulate humidity. The early humidity-focused installations included an ‘air washer’, a complex piece of equipment which sprayed down the incoming air with water. While this gave the ability to precisely manipulate humidity, it added expense, and required plumbing and sewer connections. Removing it made residential air conditioning equipment simpler and less expensive, but also removed the ability to precisely control humidity (something consumers never seemed to think was very important anyway.) Home air conditioning became a way to inject cool air into the house, rather than a system for carefully controlling the indoor air conditions.

It was the post-WWII building boom that saw air conditioning dramatically increase in popularity. Soldiers returning home and the end of wartime rationing, combined with many years of low building volume during the depression, saw the release of a huge pent-up demand for houses. Huge tract builders built enormous developments, sometimes thousands of homes at a time This scale of building ruled out many vernacular methods of cooling - rather than try to adapt the house to the specific site, builders produced huge numbers of identical houses, clearing the site of trees and landscaping that might have provided shade. The inclusion of large picture windows (which buyers favored), the increased heat load from consumer appliances, and the popularity of light-weight construction meant that increasingly, new homes required air conditioning to be habitable at all.

And because air conditioning was expensive, including it encouraged moving even further away from vernacular cooling methods. Builders would remove things like large eaves, attic fans, corners that encouraged cross-breezes, and window screens (in some cases, windows wouldn’t be openable at all) to try to defray the costs of the air conditioner, with the idea that the air conditioner could fully supply any cooling needs [3]. And because the builder of the home was generally not the owner, they often ignored things such as using sufficient insulation, which reduced their upfront costs but increased operational costs.

(Similar trends occurred in office buildings, with the rise of the International Style of skyscrapers, which turned the building into a huge slab of glass requiring climate control to make it habitable.)

By 1960, 1 in 8 homes in the US had an air conditioner (though only 1 in 50 homes had central air.) By 1970 this had risen to 1 in 2 homes with an air conditioner, and cities Dallas, Austin, and Washington DC 40% or more of homes had a central air system (indicating that virtually every new home was now being built with one.) Today, air conditioning is in around 90% of US homes.

This will conclude with Part II next week!

These posts will always remain free, but if you find this work valuable, I encourage you to become a paid subscriber. As a paid subscriber, you’ll help support this work and also gain access to a members-only slack channel.

Construction Physics is produced in partnership with the Institute for Progress, a Washington, DC-based think tank. You can learn more about their work by visiting their website.

You can also contact me on Twitter, LinkedIn, or by email: briancpotter@gmail.com

I’m also available for consulting work.

[0] - Other countries will of course have different climate control traditions.

[1] - Traces of this history remain with us in the form of measuring air conditioner capacity in “tons”, which is the cooling provided by a ton of ice melting in a 24-hour period.

[2] - Home cooling thus developed in reverse as home heating - starting with large, central systems and eventually moving to point sources.

[3] - Modern building scientists now perform this same strategy in reverse - by adding expense to other parts of the building (increased insulation, higher performing windows, etc.) they can reduce the cost of the HVAC system.

Sources:

“Air Conditioning America” by Gail Cooper

“Cool” by Salvatore Basile

“Home Fires” by Sean Patrick Adams

“Keeping Warm in New England”, Doctoral thesis by David Brown

Census data

Great article! I love learning about the history of building systems. Incredible to see how much fuel switching happened in the 30 years after 1940.

One small note: I'm curious where these numbers came from: "Today over 90% of homes have some type of forced air system (either a furnace or a heat pump), with only 3% having some type of steam or hot water heat." I'm seeing 70% forced air (furnace or heat pump) and 8% hydronic in RECS 2015 and 77% forced air and 9% hydronic in AHS 2019.

Above you write: "Between 1916 Carrier sold more than $1 million worth of air conditioning systems to..." between 1916 and what year?