The Long Road to Fiber Optics

Over the past six decades, advances in computers and microprocessors have completely reshaped our world. Thanks to Moore’s Law — the observation that the number of transistors on a microchip doubles every two years — computers have become immensely powerful, and have found their way into nearly every facet of our lives. But this progress has required more than Moore’s Law and advances in microprocessors. Taking advantage of all this computational capacity has required advances in a whole host of associated technologies, from batteries, to displays, to various storage media.

One such technology is fiber optics, bundles of impossibly clear glass threads that carry information sent via pulses of light. Fiber optic cables have become a key part of internet infrastructure, and are used in large “backbone” data lines that span the country, connections to homes and businesses for high-speed internet access, and the submarine cables that carry data from continent to continent. Fiber optic cables also wire together computers inside data centers, and very high-capacity fiber-optic lines may allow us to bypass (to some extent) the AI energy bottleneck by allowing for highly distributed training runs of AI models.

The path to getting fiber optics was long and meandering. It required a broad range of technological advances in a variety of different fields to make possible, and for many years the technology did not appear particularly promising. Many, perhaps most, experts felt that other technology like millimeter waveguides were a better bet for the next step in telecommunications. And even once fiber optics seemed possible, it took over a decade to go from the early development efforts to the first field trials, and several more years before it was used for major commercial installations. Unpacking how fiber optic technology came about can help us better understand the nature of technological progress, and the difficulty of predicting its path.

Bending light

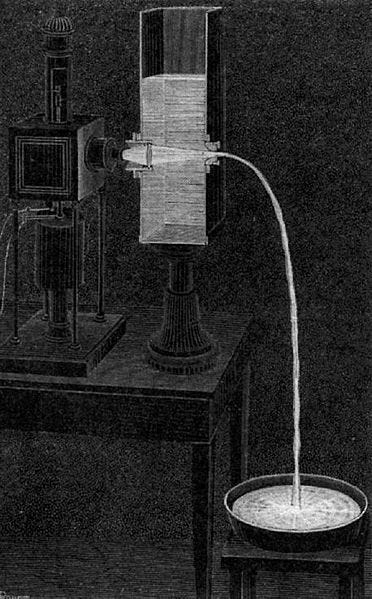

The fact that light can be guided along the path of a transparent material has been known since at least the middle of the 19th century. In 1841, Swiss professor Daniel Colladon directed light through a jet of water as part of a demonstration of fluid flow, and found that the light would follow the path of the water. Around the same time, French optics specialist Jacques Babinet demonstrated the same effect in curved glass rods. By the 1850s, the Paris Opera was using Colladon’s light-bending tricks for special effects, and in the 1880s the effect was used in the design of large, luminous fountains.

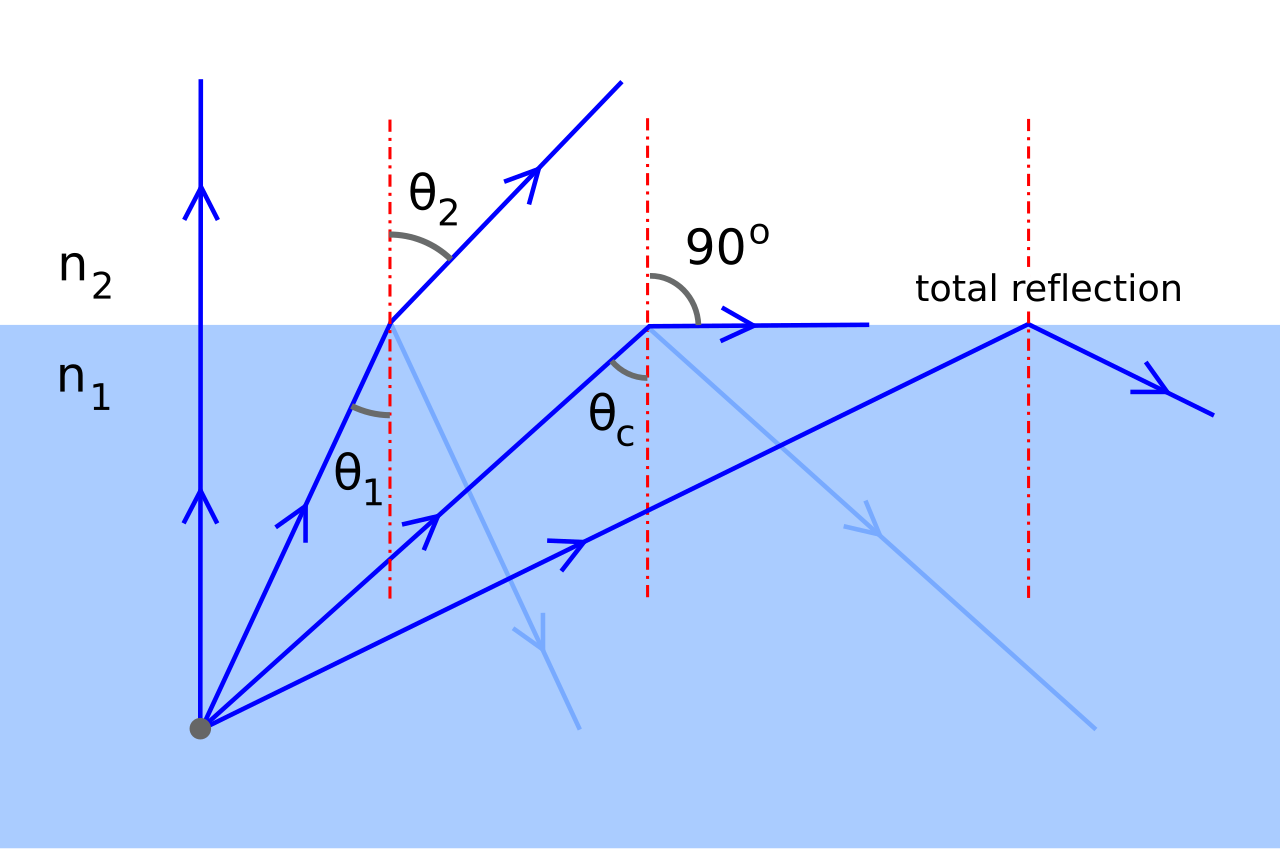

The phenomenon at work in these demonstrations was something known as total internal reflection. When light passes at an angle from one transparent material to another that has a different index of refraction (meaning that light travels at different speeds in the materials), its path is bent. But if the difference between indices of refraction is large enough, and the angle of the light is shallow enough, the light will be reflected off the surface instead of being bent, staying within the material. Total internal reflection is why diamond, with its high index of refraction, sparkles when cut properly.

Turning this curiosity of nature into a modern communications system required advances along two tracks: glass fibers that could carry light, and the source of light itself. It took many innovations, achieved over decades, to create fiber optics technology and make it reliable, robust, and inexpensive enough to be practical.

Glass fibers and imaging

Glass fibers were created by the Egyptians as early as 1600 BC, and by the 1870s it was possible to make glass fiber threads thinner than silk that could be woven into garments. These fibers were very fragile. But in 1887, physicist Charles Boys made long, exceptionally strong fibers of glass by heating a glass rod and attaching the molten end to a crossbow bolt, which he then fired.

The first use of glass fibers to transmit images came in the early 20th century. At the time, there was no good way for physicians to look into a patient’s stomach: early gastroscopes were straight and rigid, and trying to put one down a patient’s throat was often catastrophic (one doctor said gastroscopes were “one of the most lethal instruments in the surgeon’s armamentarium”). These early devices were later improved to allow them to flex up to 30 degrees, but they still worked poorly and were exceptionally uncomfortable for the patient. What was needed was a flexible tube capable of transmitting light through it. In 1930, medical student Heinrich Lamm used bundles of glass fibers to build the first flexible gastroscope. But shortly afterward, Lamm fled Germany to escape the rise of Hitler, and never further developed his idea. In 1952, physicist Harold Hopkins independently developed his own gastroscope made from bundles of glass fibers, successfully viewing the letters “GLAS” through it.

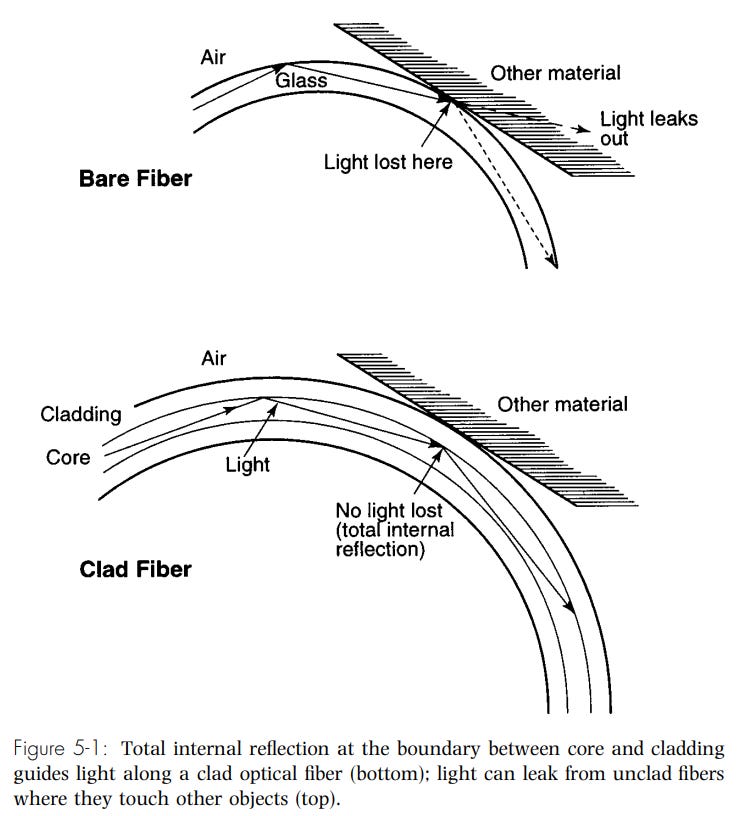

One problem with Lamm’s and Hopkin’s gastroscopes was that light would leak between fibers wherever they touched, spoiling the image being transmitted. Coating the fibers with a reflective material failed to solve the problem; the reflective surface absorbed a small amount of light, and after enough reflections, almost all the light would be absorbed. But in the early 1950s, researchers Brian O’Brien and Abraham van Heel, working on building a flexible periscope for submarines, came up with the idea of using glass fibers clad with another transparent material with a lower index of refraction to maintain total internal reflection. By 1957, a flexible gastroscope made from clad glass fibers had been patented and used successfully, and by the late 1960s fiber-optic gastroscopes had almost completely taken over the market.

Coherent light

As fiber optic gastroscopes were being developed, other researchers were considering the future of telecommunications infrastructure. Since its invention in the late 19th century, radio technology had slowly marched up the electromagnetic spectrum, taking advantage of shorter and shorter wavelengths: from radio waves tens of meters long in the 1920s, to meters long by the 1930s, to centimeters long in the 1940s. In the 1950s, AT&T was constructing a microwave relay system for transmitting data across the U.S. that used wavelengths around 7 centimeters long, and it was expected that the next advance in communications would use even shorter millimeter waves. Because millimeter waves were blocked by water droplets in the air, it was planned to shield these waves inside hollow tubes, called waveguides.

The shorter the wavelength and the higher the frequency of an electromagnetic signal, the more data can be transmitted. This makes visible light an attractive medium for communications: visible light has wavelengths in the 400 to 700 nanometer range, around 100,000 times shorter than microwaves.

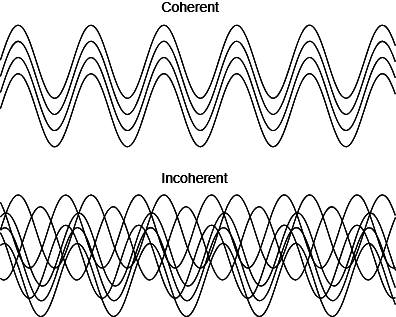

But transmitting an electromagnetic signal effectively required generating coherent waves: waves all at the same frequency and in the same phase. Such a “pure” signal can be used as a carrier wave, which then gets modulated to carry data. Radio systems that generated coherent waves using electronic oscillators had been in use since the early 20th century, but in the 1950s there was no such source of coherent light. Existing light sources, like incandescent bulbs, were “dirty,” generating light in a variety of different wavelengths. In 1951, Bell Labs looked at using optical communications methods, but concluded that without a coherent light source they would be inferior to a millimeter wave system.

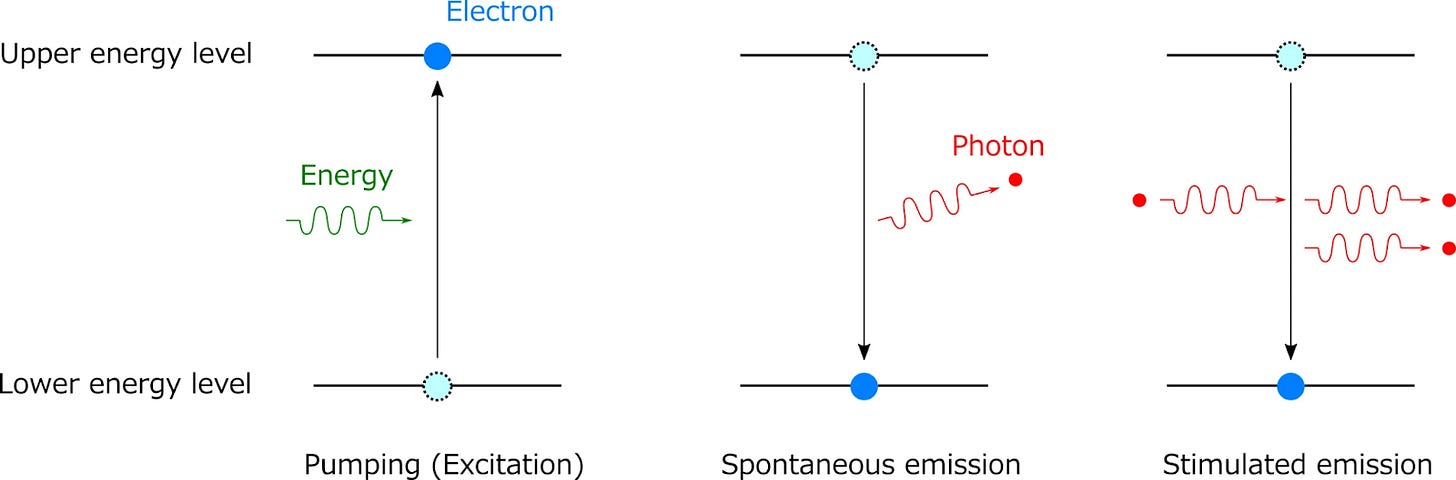

But in 1960 a source of coherent light appeared in the form of the laser. The laser leveraged the phenomenon of stimulated emission. Atoms and molecules have different levels of energy, like different rungs on a ladder. If an atom absorbs energy from a photon (an individual “packet” of electromagnetic energy), it can jump up a rung on the ladder; when it falls back down, it will emit a photon. Normally, this happens spontaneously. But if an atom in an excited state encounters a photon with the exact energy that it would emit from dropping down a rung, it can instead emit two photons as it drops back down. Create enough excited atoms (a state known as a “population inversion”) and you can create a cascade as each photon released potentially triggers more photons. Critically, this light will be coherent: all the same wavelength and all in phase.

The laser had developed from the maser, invented in 1951 by Charles Townes, which used stimulated emission to emit microwaves. By 1960 the phenomena had successfully been used to emit light in the visible spectrum by Thomas Maiman, an engineer at Hughes Aircraft who built the first laser using a small cylinder of ruby. The ruby laser was quickly followed by a host of other laser inventions and discoveries as researchers figured out how to get other materials to “lase” — the continuous helium-neon laser appeared later that year, followed by the semiconductor laser in 1962, and the carbon dioxide laser in 1964.

Clear glass

With a source of coherent light available, researchers began to consider ways that it might be used for communications. By 1966, Scientific American reported that “there are probably more physicists and engineers working on the problem of adapting the laser for use in communication than any other single [laser] project.” Initially it was hoped that lasers could simply be directed through the open air, but that quickly proved infeasible: even high-powered laser beams would be disrupted by fog or rain (though some engineers suggested using lasers mounted to high-altitude balloons that floated above cloud level). It seemed that even laser beams would need to travel through some sort of pipe to be an effective communications system. Early experiments with mirrors, conventional lenses, and gas lenses (where air at different temperatures acts as a lens) were tried, but also proved to be difficult. Small fluctuations in temperature would send the beam off course, a problem that proved perniciously difficult to address.

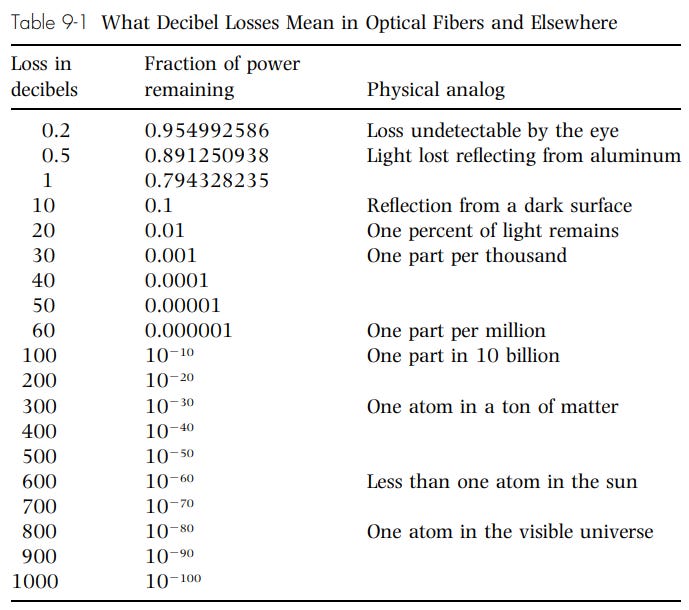

Researchers eventually turned to glass fibers as a possible transportation medium for light, but this was out of desperation more than anything else. At the time, even the best glass fibers reduced light intensity by 20 decibels over 20 meters, a 99% reduction. Over a kilometer, the signal would be reduced down to 1/10^100th its original strength: that is, a 99.9999..(90 more ‘9s’)..99% reduction. To make a workable communications system, losses would have to be reduced to 20 decibels over a kilometer. This required glass astronomically clearer than anything than had ever been produced. To most engineers, this seemed unattainable.

No one really knew if it was possible to make glass that clear, or what the fundamental limits on glass clarity were. Charles Kao, a researcher at Britain’s Standard Telephone Laboratories and an early fiber optics advocate, studied the problem, and felt there was no fundamental reason that one couldn’t make glass that clear. In 1969, Kao produced a fused silica glass made from pure silicon dioxide that had a loss of just 5 decibels per kilometer.

Kao’s experiments showed that ultra clear glass fibers might be possible, and a race ensued to try and produce them. By 1970, researchers at Corning, a glass manufacturer, had successfully made doped titanium fused silica glass fibers with an attenuation of just 16 decibels per kilometer, below the 20 decibel goal. Two years later they had reduced it even further, down to 4 decibels per kilometer. And while early fibers had been too brittle to be practical, Corning researchers found that by doping the glass with germanium instead of titanium, fibers could be made flexible enough to actually be used.

Lasers get better

As glass became clearer, progress was also being made on laser light sources. The most obvious candidate for a light source was the semiconductor laser, which could be made extremely small and was controllable by electrical currents. But early semiconductor lasers were far from practical. They needed to be extremely cold to operate (-300 degrees Fahrenheit), had lifetimes measured in seconds, failed unpredictably, could only fire intermittently, and were incredibly inefficient (requiring as much electrical current as a refrigerator). Instead of producing a narrow beam of light, early semiconductor lasers produced fuzzy, spread out beams, for reasons that were difficult to understand. Faced with this array of problems, many researchers abandoned the semiconductor laser.

But thanks to military funding, research on semiconductor lasers continued, and these problems were gradually resolved. Lasers made from layers of different material (called heterojunctions) reduced current requirements and eventually allowed lasers that could operate at room temperature. Changing the geometry of the layer arrangement to include a narrow “stripe” in which the current would flow improved beam quality and made it possible to fire the laser continuously. Laser lifetimes improved, first to minutes, then to hours, then to days. By the second half of the 1970s, semiconductor lasers were lasting for a million hours or more at room temperature.

Fiber optics breaks through

At the end of the 1960s, fiber optics was still a longshot, and many still expected millimeter waveguides to be the next step in communications. But as glass fiber quality and semiconductor lasers improved, those perceptions began to change. Not only did the technology offer potentially much higher transmission capacity than the millimeter waveguide, it had a host of other potential benefits. With millimeter waveguides, any bends — even small ones caused by soil settling — caused signals to leak. This caused losses in signal and meant that the waveguides would have to be laid down in gentle curves, like interstate highways, making installation expensive. Fiber optics, however, were much more flexible; not only could they be bent around corners much more readily, but they could often be routed through existing pipes and ducts, making them cheaper to install and possible to use within dense urban areas. And because fiber optics were based on light, they weren’t susceptible to the sort of electromagnetic interference that often plagued systems that used longer wavelengths.

By the second half of the 1970s, the millimeter waveguide had been abandoned as the next generation of data transmission infrastructure in favor of fiber optics. In 1976, AT&T turned on its first test fiber optic system in a factory in Atlanta, and installed the first fiber optic system for customer use in Chicago in 1977. British telephone companies began fiber optic field trials that same year, and the Japanese were in the game as well. In 1980, AT&T chose fiber optics for its Northeast corridor link between Boston and Washington, one of the most high-traffic and important communication lines in the country.

From there, use of fiber optics took off. In 1982 MCI placed an order with Corning for 100,000 kilometers of optical fiber, and other telecom companies followed with similarly large orders. By 1983, fiber optics was a $300 million market; by 1986, it had reached $1 billion. That year, over two million kilometers of fiber optic cable were shipped in the U.S. alone. In 1988, the first submarine fiber optic cable was laid, replacing the previous technology of coaxial cable and allowing submarine cables to remain competitive with satellite systems.

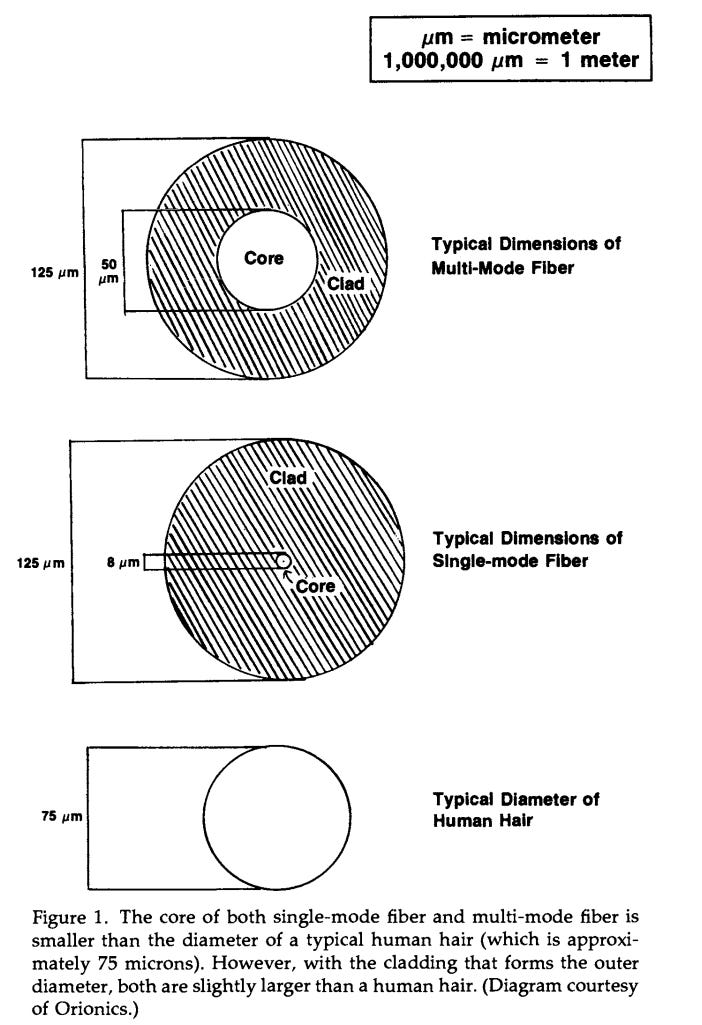

As fiber optics were deployed more widely, the technology continued to improve. AT&T’s early systems used “multi-mode” fibers, where the fiber was wide enough that light could take multiple paths through it, but this was eventually superseded by “single-mode” fibers that had much lower losses. Lasers became more reliable; several early installations (such as the northeast corridor) used LEDs instead of lasers as a light source, but soon lasers became standard. Longer wavelength lasers, which had lower losses due to scattering, were introduced. Faster-pulsing lasers increased transmission capacity, as did technologies like wave division multiplexing (where a single fiber carried multiple signals transmitted at different wavelengths). Early fiber optic systems had transmission capacities of 45 megabits per second; by the early 2000s they had reached 40 gigabits per second, nearly 1,000 times as large. Erbium-doped amplifiers that were entirely optical began to replace electronic-based amplifiers (which had to convert the light into an electronic signal, then back into light). Costs for fiber optic systems fell, as did the minimum installation distance where fiber optics were economical.

So much fiber was installed, in fact, that installations vastly exceeded demand, creating a huge bubble, which was followed by the collapse of many telecom companies in the early 2000s, and the existence of huge amounts of “dark fiber” (fiber installed but not connected) that paved the way for the growth of the internet in the 21st century.

Conclusion

There are several notable trends visible in the history of fiber optics. One is that it takes a huge array of innovations to make a new technology possible. Fiber optics required, among other things: figuring out how to make strong, flexible glass fibers, figuring out how to prevent them from leaking light, making them exceptionally clear, and creating light sources that could emit coherent light.

Not only did these individual capabilities all need to be created, but they needed to reach a certain level of performance to be practical. Glass fibers have existed for millennia, but it wasn’t until the glass was sufficiently clear and free of impurities that it became possible to use it as a long-distance transmission medium. Similarly, early sources of coherent light were completely impractical to actually use in a communications system. Progress in fiber optics was as much due to resolving various practical problems as it was to breakthrough discoveries like the laser.

Similarly, as we saw when we looked at fusion power, many fiber optic technologies needed to improve immensely over a short period of time. Over a few years, semiconductor laser lifetimes improved by more than a factor of a million, and glass clarity improved by a factor of 50. It was this rapid pace of improvement that made fiber optics possible, and is part of the reason why technological progress is often difficult to predict. The extremely high losses of light through even the best glasses in the early 1960s led many to write fiber optics off completely and focus their attention on what seemed like more promising technologies.

Finally, the development of fiber optics provides something of a counterpoint to the point in my recent piece that Bell Labs was a unique innovation engine. While Bell Labs was a key participant in the development of fiber optics technology, most of the important firsts (the first laser, the first semiconductor laser, the first ultra-high clarity glass fibers) were developed elsewhere. And while AT&T had historically been known for being slow to introduce new technology (its northeast corridor project made relatively conservative technology choices, such as multi-mode fibers and LED light sources), the rush of many telecom companies to deploy fiber and get an edge over their competitors following AT&T’s breakup probably helped accelerate not only the deployment of fiber optics but its rapid improvement. There’s some evidence that despite its long record of achievements, AT&T and Bell Labs actually held back innovation overall. Fiber optics provides an example.

In the early 90's I got to know Dr. Kao through volleyball - his daughter was a teammate. I was working in Optical Communications at Bell Labs Area 11, but didn't realize he was "THAT C. Kao" ! A great guy in general and here's a little insight into him: A group of us were going to move him from an apartment in mid-Manhattan to a house in NJ, and we were doing a walk-through to size up the work necessary. I saw a large (6') framed scroll hanging on the wall; lovely hand-brushed calligraphy. Dr. Kao asked "Would you like me to translate?". It had been presented to him by the CUHK Dept. of Electronics on his retirement. With a slight grin he said "This is what it SAYS" and marked each character with his finger: "You .. see .. greatness .. in .. the .. smallest .. of .. things". But then with a devilish grin he remarked "But this is what it MEANS" and jabbing with the finger: " YOU .. MAKE .. LOTS .. OF .. TROUBLE .. OVER .. NOTHING !". It being Fathers Day he took us all out to a very nice restaurant for dim sum. In my book, that's a Mensch.

I don't believe the way in which you characterize the glass clarity improvement (10^98) is correct and it exaggerates the extent of the improvement that took place. Given that light attenuates exponentially with distance, the calculation you've done is sensitive to the distance used (1 kilometer) rather than being unitless. For instance, if a distance of 500m was used instead, the losses would be 10 dB and 500 dB, respectively and you'd report an improvement of 10^49. The relevant unitless value to report as the improvement is the ratio of these decibel improvements (i.e. both 1000/20 and 500/10 are a 50x improvement). This is the required decrease in defects per unit length of the fiber optic to produce these results.

Of course, a 50x improvement is impressive, but in line with the type of improvements of Moore's law, rather than fiber optics being a novel case of a 10^98 improvement factor.