Anyone involved with developing a new building system knows that it’s incredibly difficult to get anyone in the industry to actually use your system. Anything that requires the slightest bit of process change faces an enormous uphill battle that mostly doesn’t get easier or gain momentum. Even if you win one big client, the next client will still be just as hard to convince (21 years after the introduction of Revit, a significant fraction of architects and engineers are still using AutoCAD). The result is that innovation in the construction industry tends to proceed one tiny, evolutionary change at a time.

This usually gets explained away with something to the effect of “construction doesn’t have a culture of innovation” or “construction is too traditional”. But we should find this situation at least a little surprising. Construction is a high dollar value, low profit margin, highly competitive industry - the potential rewards for someone who can find a cheaper, more efficient way of doing things is enormous. A “traditional culture” is a hundred dollar bill lying on the ground - we should expect someone to come along and pick it up.

But I suspect this reluctance to innovate is actually a rational calculus based on the expected value of an innovation.

Cost Overruns and Fat Tails

Construction projects are notorious for being over budget and behind schedule. KPMG’s 2015 Construction Survey found just 31% of projects were within 10% of their original budget, and only 25% were within 10% of their original deadline. A literature review in Aljohani 2017 found average overrun rates anywhere from 16.5% to 122%, depending on the type of project being studied.

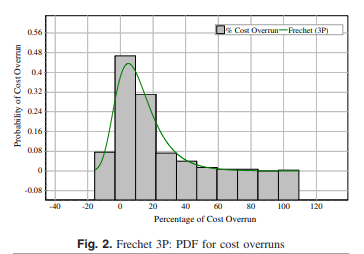

But more important than the average cost overrun rate is the shape of distribution. Consider Jahren 1990, which looked at construction costs for over 1500 Navy construction projects. The graph of the distribution of overrun rates is below:

We see that the distribution of cost outcomes is right skewed - though the median cost overrun is actually fairly low (just above 0%), projects were more than 10 times as likely to be over budget than under budget, and much more likely to be far above budget than far below budget.

Other studies have found similar results. Love 2013, when investigating 276 Australian construction and civil engineering projects, also found a right-skewed distribution:

As did Bhargava 2010 in an analysis of over 1800 Indiana DOT construction projects (cost overrun is the Y axis):

In addition to being right-skewed, these distributions are also fat-tailed - we’re much more likely to encounter values far from the mean than we might expect.

The most central example of a probability distribution is the normal distribution. The normal distribution, in addition to being symmetrical, is thin-tailed - the probability of a given outcome drops off rapidly as you get farther from the mean. The likelihood of exceeding the average value by more than 1 standard deviation is 15.87%; by 2 standard deviations, 2.28%; by 3, 0.22%. So for instance, if we’re looking at the distribution of human heights (governed by something like a normal distribution), we might expect to see the occasional 7 foot tall person, but we don’t expect to ever encounter someone 10 feet tall, no matter how big our sample is.

But the tails on construction cost distributions appear to be fat. On the Jahrens Navy study, around 80% of the projects were within 10% of their design budget (pretty dang good, if KPMG is to be believed). On a normal distribution, this would mean that fewer than 0.5% of projects should be expected to exceed their budget by 20% or more. But the actual rate was 25 times higher than that.

What this means is what everyone who works in construction knows intuitively - a project that goes extremely well might come in 10 or 20% under budget. But a project that goes badly could be 100% over budget or more.

Nails and Fat Tails

Where do the fat tails in construction projects come from?

Consider a worker with just one job, driving nails with a nail gun. Assume the time it takes to drive a nail is normally distributed [0], with a fairly narrow spread - each nail takes an average time of one second, with a standard deviation of 0.5 seconds. But every 100 nails, he needs to reload the nail gun, an action which is also normally-distributed but has a mean of 10 seconds. Every 500 nails, he needs to go to his truck and get another box of nails, which has a mean time of 60 seconds. And every 5000 nails, he has to drive to the store to get more, which has a mean time of 10 minutes.

This sort of model gets us a right-skewed, fat-tailed distribution of nail-driving times. The majority of nail drive times will be clustered near 1 second, but every so often the time to the next nail strike will be far higher; this will occur much more often than we’d expect if process times were normally distributed (for a process with a mean of 1 and a standard deviation of 0.5, the odds of a nail taking 10 seconds to be struck are well over a quadrillion to one [1]).

In this model, the “average” times are only achieved if the inputs to the process are reliable. It takes an average of 1 second to drive a nail, IF there are nails available to drive. When the inputs fail, when the worker runs out of nails, that average time goes out the window. Average times describe what happens within a process, but don’t take into account the interaction between processes.

What’s more, this input failure will cascade downwards - the nailing itself is an input that some downstream process is assuming will be completed. If the worker runs out of nails, he can’t finish building the frame. If the frame isn’t complete, the waterproofing can’t be installed, which prevents the electrical from being installed, which prevents the drywall from going in, and so on. For want of a nail, the kingdom was lost, etc.

We saw similar results occur in our pin factory simulation - the occasional failure rate in a process causes work to get backed up in the system, reducing throughput and increasing completion time. Construction is affected by this sort of failure more than other production systems, as there is often limited ability to keep slack in the system. A car factory can buffer against potential failures by keeping extra inventory, or by having extra capacity, or by simply tightly controlling their process. But construction is much closer to a series of one-off steps, making it much harder to buffer.

Cascading Failures

Construction projects employ an army of project managers specifically to prevent these cascading failures from happening. They keep an eye on the schedule and the critical path, track deliverables, prioritize urgent tasks, and identify and tackle blockers that are preventing the various contractors from getting their work done. These efforts prevent process failures (“sorry, the plumber didn’t show up today” “sorry, the wrong concrete mix got ordered”, etc.) from spiraling out of control bringing the project to a halt.

But even with project managers, cascading failures remain a risk due to the nature of construction. Construction has the unfortunate combination of building mostly unique things each time (even similar projects will be built on different sites, in different weather conditions, and likely with different site crews) and consisting of tasks that are costly to undo (it’s a lot easier to pour concrete than to unpour it), and are highly sequential (and thus time-sensitive). A building, for the most part, can’t be beta-tested to work the bugs out. Combined with the riskiness inherent of building large, heavy things that will be occupied by people (any failure becomes a potential life safety issue) and are heavily regulated, this means that any given process failure has the potential to completely derail your project.

So for instance, an improperly installed connection might mean that your building partially collapses during construction. Or failure to measure lane width correctly might mean a diligent fire marshall stops construction until he’s satisfied that firetrucks can turn around near the building. Or your crew might do a bad job pouring concrete, leaving no option except to rip it out and redo it.

The upside of any given project is usually a single-digit profit margin. But the downside is “whatever your company is worth” [2]. A project that goes bad might force you to declare bankruptcy, or get you banned from doing business for 10 years.

Costs, Uncertainty, and Innovation

This risk of cascading failure means that for a construction project to go well (or at least NOT go poorly) it’s important to have a deep understanding of each task involved, and how those tasks relate to each other. The contractor needs to know how the work of the electrician is influenced by the structural system used (for instance, when the light switches go in will vary depending on if the walls are wood or masonry), and how in turn the electrician's work affects the other trades. This is why requests for proposals often require some number of years of experience with a given construction type, or ask for resumes of the project team.

Each task has a web of influence that can impact every other part of the project. Any system that disrupts an established process is thus inherently risky.

We can illustrate this with a simplified model. Consider a process that’s similar to our nailgun model above:

82% of projects will be completed with a mean of 1 (100% of budget), with a standard deviation of 0.1.

10% of projects will be completed with a mean of 1.5, and a standard deviation of 0.25, capped below at zero.

5% of projects will be completed with a mean of 2, and a standard deviation of 0.5

2% of projects will be completed with a mean of 3, standard deviation of 1.

1% of projects will be completed with a mean of 4, standard deviation of 2.

Under this model, most projects would come in near budget, with a skew towards the right - more projects coming in over budget than under. But there would be a small, but non-negligible chance of projects going massively over budget, by 400% percent or more. Running this model gets a mean of about 1.15 - the average cost overage is about 15%, somewhat in-line with the studies on cost overruns above.

What happens if we fatten up the tail, by doubling the likelihood of worst-case outcomes? Now 20% of projects will have a mean of 1.5, 10% will have a mean of 2, etc. Doing this increases the mean project cost to 1.24 - an increase in overall cost of 9% [3]. An innovative construction method would thus not have to just be cheaper, it would have to be SO much cheaper that it would have to bring the cost of the entire building down by 9%. Since buildings are made up of dozens of trades, each just a small fraction of the cost of the whole building, this is a tall order.

Under this model, the risk isn’t that some innovation is more expensive than expected - the risk is that the uncertainties inherent with a system you don’t have experience with, combined with the cascading effects of any possible failure, make worst-case outcomes much more likely.

This needn’t necessarily be a risk of actual collapse - it could be something as simple as waterproofing that fails to work as expected, or your bridge vibrating too much when walked on.

Under this model, constant intervention by the project team is required to keep projects within their cost, schedule, and performance envelope. This in turn requires understanding all the processes that are going on, and their upstream and downstream effects. A completely new system threatens to disrupt all that. Based on this, when presented with some innovative building system, a firm would rationally conclude that the cost savings are more than offset by the potential risk.

This isn’t an unreasonable fear. Lack of experience was found to be one of the most likely causes of cost overruns by Memon 2011 and Aljohani 2017, with “poor site management” and “delays in decision making” also significant factors ie: inability to prevent cascading failure effects between disciplines.

Thus what we see is innovations that are evolutionary in nature - things that slot into an existing process, and don’t fundamentally change the systems involved, or the upstream and downstream effects. So we see things like Huber coming out with a new sheathing that incorporates the air and moisture barrier directly into it, but doesn’t fundamentally change the way the building goes together. Or Hilti coming out with new fasteners that are rated for use in seismic applications, but can be used more or less the same way that the older fasteners were. Or PEX piping replacing PVC and copper piping.

Things that require substantial changes to the process have a more difficult time. Systems like Diversakore, Girder Slab, and ConxTech which require changing how the building goes together seem to have had difficulty finding a place in the market - these systems are all nearing 20 years old, but seem to have collectively been used for just a few hundred buildings. (it’s not impossible though - Delta Beam, a conceptually similar product to Girder Slab, has been used on thousands of buildings). I’m not aware of a single building built using Plenum Panels, an interesting plywood-based timber panel that theoretically should be extremely competitive with CLT, and has been around for 10 years. The fragmented nature of the industry means that you’re constantly fighting the same fight against the risk profile of the contractors and design team.

Risk and Reverse Moral Hazard

And the problem actually gets even worse. In many cases, the person specifying a new system won’t capture the upside from actually using it - they’ll be under a sort of reverse moral hazard, where the benefits accrue to the rest of the project team, but the risks will accrue only to them.

This is often a factor on the design side, where the engineers and architects must do extra work to implement some new system (since it’ll require all new calculations, and all new drawings and details), and must take the responsibility for the design of the system, but won’t capture any of the upside. Girder Slab, for instance, requires the engineer to pay close attention to the construction sequence in a way atypical for steel buildings. But this extra work (and extra risk!) doesn’t come with any additional fee [4].

Construction and Innovation

So, to sum up: construction project costs are right-skewed and fat tailed; costs can easily end up being 50 to 100% higher than projected, or more. Preventing this requires closely monitoring all the processes taking place on a project, and understanding how they influence each other. A new or innovative system that involves changes to the process thus incurs a significant risk penalty that would likely exceed the expected benefits of the innovation. Construction innovations thus tend to be incremental and evolutionary, things that don’t change the underlying processes [5].

Where does this leave us for construction innovation? I suspect it means that any new system that would involve a substantial change to the process needs to be delivered in a way that can address these issues of risk. One option is to make sure the risk doesn’t end up burdening one particular stakeholder; for instance, offering to design and stamp your system as a deferred submittal (in practice, we see a lot of this for new systems). Another is to internalize the risk yourself - instead of selling your new amazing system, use it to build buildings yourself (this obviously screens off all but the highest-impact innovations).

This is also something that might be improved by a less fragmented market - fewer, larger players that could more easily absorb the potential risk, who could capture more value from using it (either by using it in more projects or by being more vertically integrated), and who could receive a system tailored to their specific needs.

[0] - More properly, this would be a distribution that was right-skewed to avoid zero or negative task times, such as a gamma distribution.

[1] - It turns out it’s non-trivial to get computer programs to spit out probabilities for very high z-scores. Excel tops out at a z-score of around 8.

[2] - It’s actually possibly even worse than this - thickening the tail likely adds the possibility for even worse outcomes - processes with means of 6, 7, or 8x the budget or more. We don’t see these kinds of cost-overruns occur in reality, but we do see projects go so poorly that the contractors go bankrupt, which likely caps the potential downside.

[3] - Partially this is an artifact of construction companies working on high-dollar value projects while being worth comparatively little. Most construction companies are small, and are service companies without assets.

[4] - This occurs with other trades as well. We encountered this multiple times at Katerra, where contractors would be extremely reluctant to use some new building technology that could theoretically offer significant cost savings.

[5] - Interestingly, one area where this seems less true is construction management tools. Because monitoring progress is so important, contractors seem fairly willing to adopt tools that make it easier to do this. You see this in things like Plangrid or Procore, the use of iPads and tablets on site, and the movement towards BIM in general.

It's interesting to compare this to the software industry, which seems to share a lot of characteristics with construction (high dollar value, each project has unique conditions, many sequential dependencies, mistakes are often difficult to fix though maybe not as difficult as construction). My impression is that people come up with new/better ways of building software much more frequently.

I think this is good evidence for your idea that a less-fragmented construction industry might be more innovative: a lot of new software tools were pioneered inside one of the few largest tech companies.

(A large part of this is also obviously that the construction industry has existed for >100x longer than the software industry, so there's much more low-hanging fruit in software. Another relevant difference might be that it's easier to experiment with new software tools in a low-stakes way via side projects; I'd guess that the minimum practical size for a software project is much smaller than for a construction project?)

Great piece.

Robert Gordon finds that the construction industry recorded negative productivity growth from 1995-2005 in both the US and EU-10, owing entirely to declining multi-factor productivity, i.e. the residual that's supposed to capture output from new ideas and innovation.

See: "The Industry Anatomy of the Transatlantic Productivity Growth Slowdown" https://www.nber.org/papers/w25703

Construction productivity is notoriously hard to measure, but stipulate that these estimates are at least directionally correct. What drove the decline in construction MFP? Would you consider doing a post on why and how construction innovation is not just hard, but may even be somehow regressing?