“Every one of the stars in the sky uses fusion to generate enormous amounts of energy. Why shouldn’t we?” – The Future of Fusion Energy

Today all nuclear power reactors are driven by fission reactions, which release energy by splitting atoms apart. But there’s another nuclear reaction that’s potentially even more promising as an energy source: nuclear fusion. Unlike fission, fusion releases energy by combining atoms together. Fusion is what powers the sun and other stars, as well as the incredibly destructive hydrogen bomb.

It’s not hard to understand the appeal of using nuclear fusion as a source of energy. Unlike coal or gas, which rely on exhaustible sources of fuel extracted from the earth, fusion fuel is effectively limitless. A fusion reactor could theoretically be powered entirely by deuterium (an isotope of hydrogen with an extra neutron), and there’s enough deuterium in seawater to power the entire world at current rates of consumption for 26 billion years.

Fusion has many of the advantages of nuclear fission with many fewer drawbacks. Like fission, fusion only requires tiny amounts of fuel: Fusion fuel has an energy density (the amount of energy per unit mass) a million times higher than fossil fuels, and four times higher than nuclear fission. Like fission, fusion can produce carbon-free “baseload” electricity without the intermittency issues of wind or solar. But the waste produced by fusion is far less radioactive than fission, and the sort of “runaway” reactions that can result in a core meltdown in a fission-based reactor can’t happen in fusion. Because of its potential to provide effectively unlimited, clean energy, countries around the world have spent billions of dollars in the pursuit of fusion power. Designs for fusion reactors appeared as early as 1939, and were patented as early as 1946. The U.S. government began funding fusion power research in 1951, and has continued ever since.

But despite decades of research, fusion power today remains out of reach. In the 1970s, physicists began to describe fusion as “a very reliable science…a reactor was always just 20 years away.” While significant progress has been made — modern fusion reactors burn far hotter, for far longer, and produce much more power than early attempts — a net power producing reactor has still not been built, much less one that can produce power economically. Due to the difficulty of creating the extreme conditions fusion reactions require, and the need to simultaneously solve scientific and engineering problems, advances in fusion have been slow. Building a fusion reactor has been described as like the Apollo Program, if NASA needed to work out Newton’s laws of motion as it was building rockets.

But there’s a good chance a working fusion reactor is near. Dozens of private companies are using decades of government-funded fusion research in their attempts to build practical fusion reactors, and it's likely that at least one of them will be successful. If one is, the challenge for fusion will be whether it can compete on cost with other sources of low-carbon electricity.

Fusion basics

A fusion reaction is conceptually simple. When two atomic nuclei collide with sufficient force, they can fuse together to form a new, heavier nucleus, releasing particles (such as neutrons or neutrinos) and energy in the process. The sun, for instance, is chiefly powered by the fusion of hydrogen nuclei (protons) into helium in a series of reactions called the proton-proton chain.

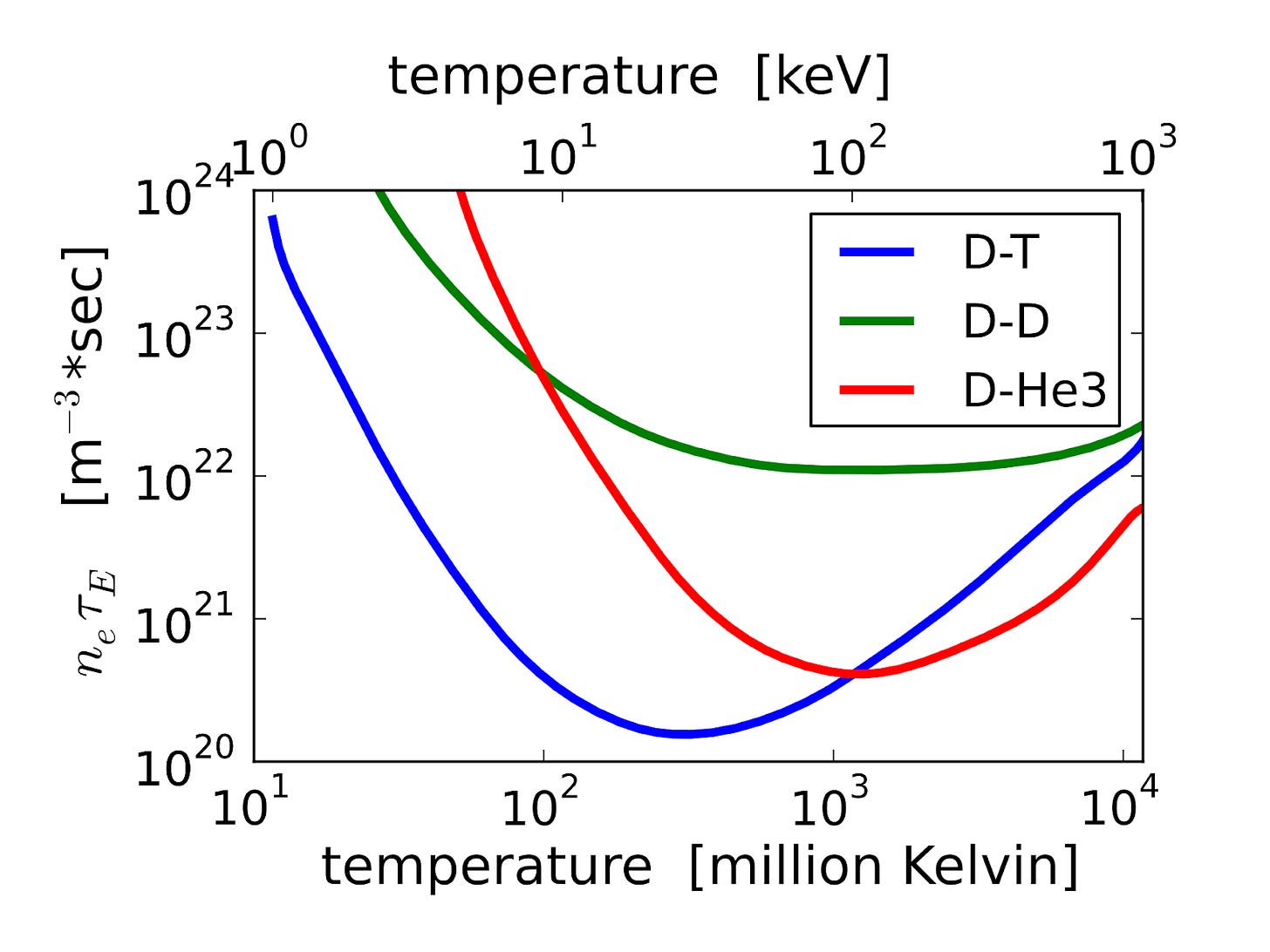

Nuclear fusion is possible because when nucleons (protons and neutrons) get close enough, they are attracted to each other by the strong nuclear force. However, until they get extremely close, the positive charge from the protons in the nuclei will cause the nuclei to repel. This repulsion can be overcome if the nuclei are energetic enough (i.e., moving fast enough) when they collide, but even at high energies most nuclei will simply bounce off each other rather than fuse. To get self-sustained nuclear fusion, you thus need some combination of nuclei that are very high energy (i.e., high temperature), and given enough opportunities to collide to allow a fusion reaction to occur (achieved by high density and high confinement time). The temperatures required to achieve fusion are so high (tens or hundreds of millions of degrees) that electrons are stripped from the atoms, forming a cloud of negatively-charged electrons and positively-charged nuclei called a plasma. If density and confinement time of the plasma are above a critical value (known as the Lawson Criterion), and the temperature is high enough, the plasma will achieve ignition: that is, heat from fusion reactions will be sufficient to keep the plasma at the required temperature, and the reaction will be self-sustaining. The product of temperature, density, and confinement time is known as the triple product, and it’s a common measure of performance of fusion reactors.

Because more protons means more electrostatic repulsion, the bigger the nucleus the harder it is to get it to fuse and the higher the Lawson Criterion (though actual interactions are more complex than this). Most proposed fusion reactors thus use very light elements with few protons as fuel. One combination in particular — the fusion of deuterium with tritium (an isotope of hydrogen with two extra neutrons) — is substantially easier than any other reaction, and it's this reaction that powers most proposed fusion reactors.

The challenge of fusion is that it’s very difficult to keep the fuel at a high temperature and packed densely together for a long period of time. Plasma that touches the walls of a physical container would be cooled below the temperatures needed for fusion, and would melt the container in the process, as the temperature needed for fusion is above the melting point of any known material. How do you keep the plasma contained long enough to achieve fusion?

There are, broadly, three possible strategies. The first is to use gravity: Pile enough nuclear fuel together, and it will be heavy enough to confine itself. Gravitational force will keep the plasma condensed into a ball, allowing nuclear fusion to occur. Gravity is what keeps the plasma contained in the sun. But this requires enormous amounts of mass to work: Gravity is far too weak a force to confine the plasma in anything smaller than a star. It’s not something that can work on earth.

The second option is to apply force (such as from an explosion), to physically squeeze the fuel close enough together to allow fusion to occur. Any particles trying to escape will be blocked by other particles being squeezed inward. This is known as inertial confinement, and it’s the method of confinement used by hydrogen bombs, as well as by laser-based fusion methods.

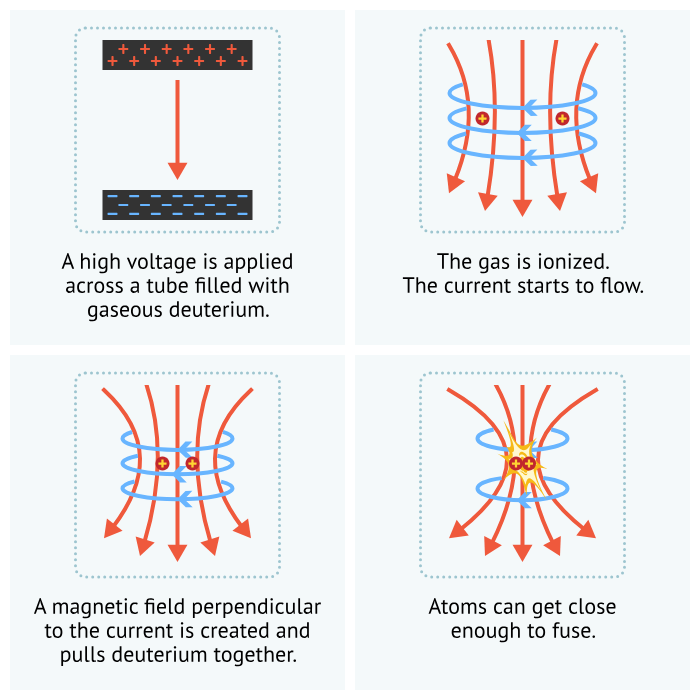

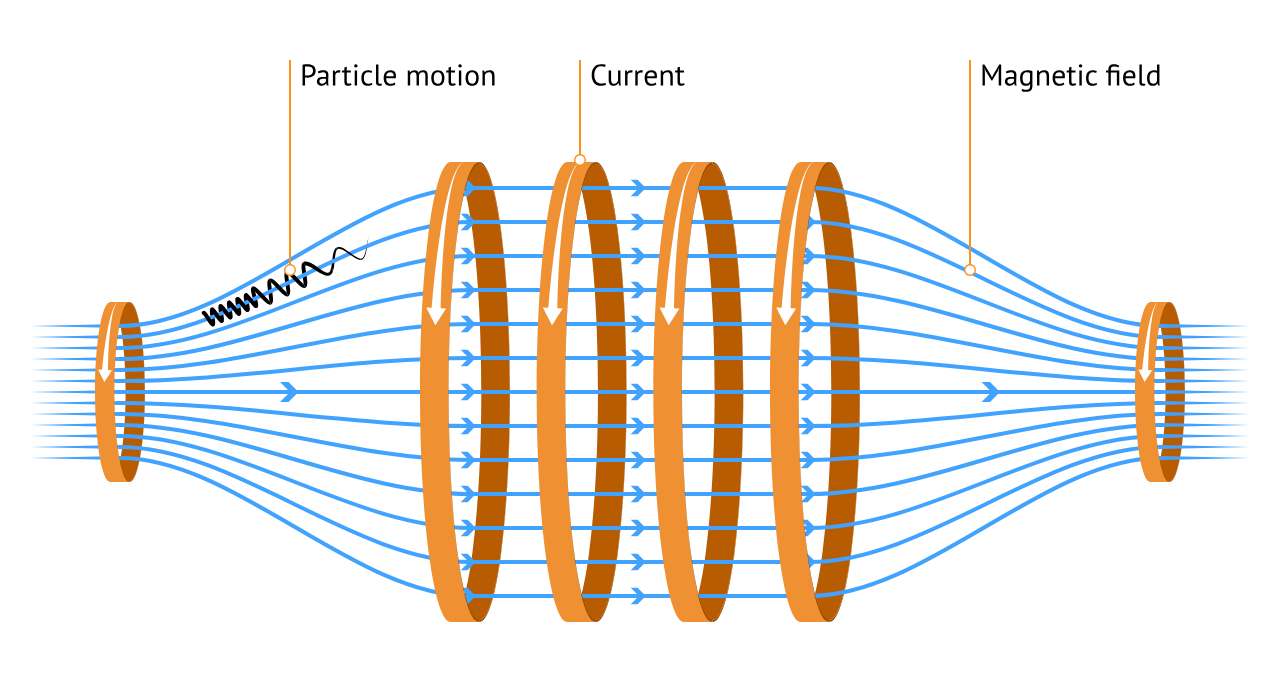

The last method is to use a magnetic field. Because the individual particles in the plasma (positive nuclei and negative electrons) carry an electric charge, their motion can be influenced by the presence of a magnetic field. A properly-shaped field can create a “bottle” that contains the plasma for long enough for fusion to occur. This method is known as magnetic confinement fusion, and is the strategy that most attempts to build a fusion reactor have used.

The spark of fusion power

Almost as soon as fusion was discovered, people began to think of ways to use it as a power source. The first fusion reaction was produced in a laboratory in 1933 by using a particle accelerator to fire deuterium nuclei at each other, though it fused such a tiny number of them (one out of every 100 million accelerated) that experimenter Ernest Rutherford declared that “anyone who expects a source of power from the transformation of these atoms is talking moonshine.” But just six years later, Oxford physicist Peter Thonemann created a design for a fusion reactor, and in 1947 began performing fusion experiments by creating superheated plasmas contained within a magnetic field. Around this same time another British physicist, G.P. Thomson, had similar ideas for a fusion reactor, and in 1947 two doctoral students, Stan Cousins and Alan Ware, built their own apparatus to study fusion in superheated plasmas based on Thompson’s work. This early British fusion work may have been passed to the Soviets through the work of spies Klaus Fuchs and Bruno Pontecorvo, and by the early 1950s the Soviets had their own fusion power program.

In the U.S., interest in fusion was spurred by an announcement from the Argentinian dictator Juan Peron that his country had successfully achieved nuclear fusion in 1951. This was quickly shown to be false, and within a matter of months the physicist behind the work, Ronald Richter, had been jailed for misleading the president. But it triggered interest from U.S. physicists in potential approaches to building such a reactor. Lyman Spitzer, a physicist working on the hydrogen bomb, was intrigued, and within a matter of months had secured approval from the Atomic Energy Commission to pursue fusion reactor work at Princeton as part of the hydrogen bomb project, in what became known as Project Sherwood. Two more fusion reactor programs were added to Sherwood the following year, at Los Alamos (led by James Tuck) and Lawrence Livermore Lab (led by Richard Post).

Early fusion attempts all used magnetic fields to confine a superhot plasma, but they did so in different ways. In Britain, efforts were centered on using an “electromagnetic pinch.” By running an electric current through a plasma, a magnetic field would be created perpendicular to it, which would compress the plasma inward. With a strong enough current, the field would confine the plasma tightly enough to allow for nuclear fusion. Both Thomson and Thonemann’s fusion reactor concepts were pinch machines.

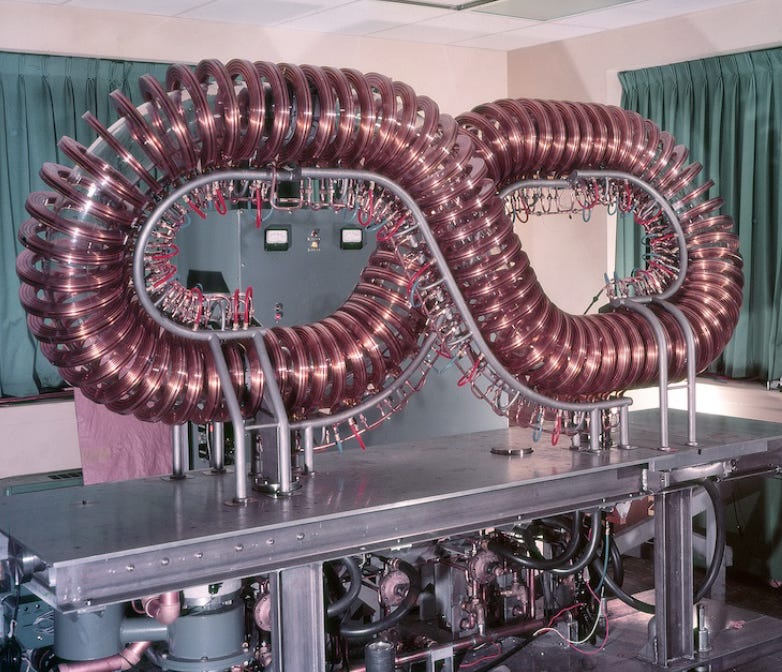

Pinches were pursued in the U.S. as well: James Tuck was aware of the British work, and built his own pinch machine. Skeptical as to whether such a machine would really be able to achieve fusion, Tuck called his machine the Perhapsatron. But other researchers tried different concepts. Spitzer’s idea was to wind magnets around the outside of a cylinder, creating a magnetic field to confine the plasma within. To prevent particles from leaking out either end, Spitzer originally planned to connect the ends together in a donut-shaped torus, but in such a configuration the magnetic coils would be closer together on the inside radius than on the outside, varying the field strength and causing particles to drift. To correct this, Spitzer instead wrapped his tube into a figure eight, which would cancel out the effect of the drift. Because it aimed to reproduce the type of reaction that occurred in the sun, Spitzer called his machine the stellarator.

Richard Post’s concept at Livermore likewise started as a cylinder with magnets wrapped around it to contain the plasma. But instead of wrapping the cylinder into a torus or figure eight, it was left straight: To prevent plasma from leaking out, a stronger magnetic field was created at each end, which would, it was hoped, reflect any particles that tried to escape. This became known as the magnetic mirror machine.

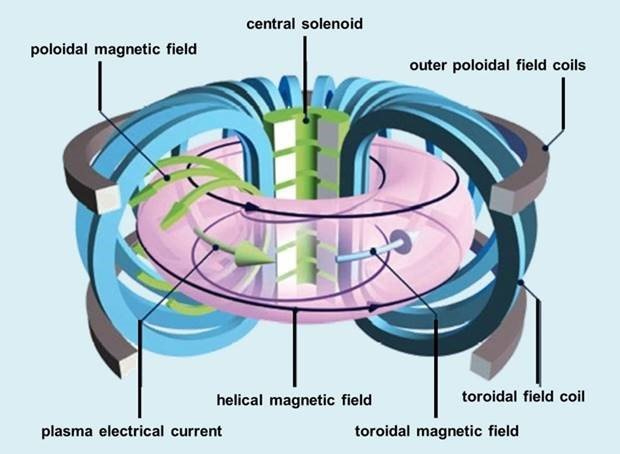

In the Soviet Union, work on fusion was also being pursued by hydrogen bomb researchers, notably Igor Tamm and Andrei Sakharov. The Soviet concept combined Spitzer’s stellarator and the British pinch machines. Plasma would be created in a torus, which would be confined both by external magnetic fields (as in the stellarator) and a self-generated magnetic field created by running a current through the plasma (as in the pinch machines). The Soviets called this a toroidal magnetic chamber, which they abbreviated “tokamak.”

Fusion proves difficult

Early on, it was hoped that fusion power might be a relatively easy problem to crack. After all, it had taken only four years after the discovery of nuclear fission to produce the world’s first nuclear reactor, and less than three years of development to produce the fusion-driven hydrogen bomb. Spitzer’s research plan called for a small “Model A” stellarator designed to heat a plasma to one million degrees and determine whether plasma could be created and confined. If successful, this would be followed by a larger “Model B” stellarator, and then an even larger “Model C” that would reach temperatures of 100 million degrees and in essence be a prototype power reactor. Within four years it would be known whether controlled fusion was possible. If it was, the Model C stellarator would be running within a decade.

But fusion proved to be a far more formidable problem than anticipated. The field of plasma physics was so new that the word “plasma” wasn’t even in common use in the physical sciences (physicists often got paper requests from medical journals assuming they studied the subject of blood plasma), and the behavior of plasmas was poorly understood. Spitzer and others’ initial work assumed that the plasma could be treated as a collection of independent particles, but when experiments began on the Model A stellarator it became clear that this theory was incorrect. The Model A could produce plasma, but it only reached about half its anticipated temperature, and the plasma dissipated far more quickly than predicted. The larger Model B managed to reach the one million degree mark, but the problem of rapid dissipation remained. Theory predicted that particles would flow along magnetic field lines smoothly, but instead the plasma was a chaotic, churning mass, with “shimmies and wiggles and turbulences like the flow of water past a ship” according to Spitzer. Plasmas in the Perhapsatron similarly rapidly developed instabilities.

Researchers pressed forward, in part because of concerns that the Soviets might be racing ahead with their own fusion reactors. Larger test machines were built, and new theories of magnetohydrodynamics (the dynamics of electrically conductive fluids) were developed to predict and control the behavior of the plasma. Funding for fusion research in the U.S. rose from $1.1 million in 1953 to nearly $30 million in 1958. But progress remained slow. Impurities within the reactor vessels interfered with the reactions and proved difficult to deal with. Plasmas continued to have serious instabilities and higher levels of particle drift that existing theories failed to predict, preventing reactors from achieving the densities and confinement times required. Cases of seemingly impressive progress often turned out to be an illusion, in part because even measuring what was going on inside a reactor was difficult. The British announced they had achieved fusion in their ZETA pinch machine in 1957, only to retract the announcement a few months later. What were thought to be fusion temperatures of the plasma were simply a few rogue particles that had managed to reach high temperatures. Similar effects had provided temporary optimism in American pinch machines. When the U.S., Britain, and the Soviet Union all declassified their fusion research in 1958, it became clear that no one was “racing ahead” — no one had managed to overcome the problems of plasma instability and achieve fusion within a reactor.

In response to these failures, researchers shifted their strategy. They had rushed ahead of the physics in the hopes of building a successful reactor through sheer engineering and empiricism, and now turned back towards building up a scaffolding of physical theory to try to understand plasma behavior. But success still remained elusive. Researchers continued to discover new types of plasma instabilities, and struggled to predict the behavior of the plasma and prevent it from slipping out of its magnetic confinement. Ominously, the hotter the plasma got, the faster it seemed to escape, a bad sign for a reactor that needed to achieve temperatures of hundreds of millions of degrees. Theories that did successfully predict plasma instabilities, such as finite resistivity, were no help when it came to correcting them. “People were calculating these wonderful machines, and they turned them on and they didn’t work worth a damn,” noted plasma physicist Harold Furth. By the mid-1960s, it was still not clear whether a plasma could be confined long enough to produce useful amounts of power.

But in the second half of the decade, a breakthrough occurred. Most researchers had initially considered the Soviet tokamak, which required both enormous magnets and a large electrical current through the plasma, a complex and cumbersome device, unlikely to make for a successful power reactor. For the first decade of their existence, no country other than the Soviet Union pursued tokamak designs.

But the Soviets continued to work on their tokamaks, and by the late 1960s were achieving impressive results. In 1968, they announced that in their T-3 and TM-3 tokamaks, they had achieved temperatures of 10 million degrees and confinement times of up to 20 thousandths of a second. While this was far below the plasma conditions needed for a power reactor, it represented substantial progress: The troubled Model C stellarator had only managed to achieve temperatures of one million degrees and confinement times of one thousandth of a second. The Soviets also announced plans for even larger tokamaks that would be able to confine the plasma for up to tenths of a second, long enough to demonstrate that controlled fusion was possible.

Outside the Soviet Union, researchers assumed this was simply another case of stray high-temperature particles confounding measurements. Soviet apparatuses for determining reactor conditions were infamously poor. To confirm their results, the Soviets invited British scientists, who had recently developed a laser-based high-temperature thermometer that would allow measuring the interior of a reactor with unprecedented accuracy. The British obliged, and in 1969 the results were confirmed. After a decade of limited progress, the future of fusion suddenly looked bright. Not only was a reactor concept delivering extremely promising results, but the new British measurement technology would enable further progress by giving far more accurate information on reactor temperatures.

The rush for tokamaks

In response to the tokamak breakthrough, countries around the world quickly began to build their own. In the U.S., at Princeton Spitzer’s Model C stellarator was torn apart and converted into a tokamak in 1970, and a second Princeton tokamak, the ATC, was built in 1972. MIT, Oak Ridge, and General Atomics all built their own tokamaks as well. Around the world, tokamaks were built in Britain, France, Germany, Italy, and Japan. By 1972 there were 17 tokamaks under construction outside the Soviet Union.

The breakthrough of the tokamak wasn’t the only thing driving increased fusion efforts in the 1970s. Thanks to the environmental movement, people were increasingly aware of the damage inflicted by pollution from fossil-fuel plants, and skeptical that fission-based nuclear power was a reasonable alternative. Energy availability, already a looming issue prior to 1973, suddenly became a crisis following OPEC’s oil embargo. Fusion’s promise of clean, abundant energy looked increasingly attractive. In 1967, U.S. annual fusion funding was just under $24 million dollars. 10 years later, it had ballooned to $316 million.

The burst of enthusiasm for tokamaks was coupled with another pivot away from theory and back towards engineering-based, “build it and see what you learn”-style research. No one really knew why tokamaks were able to achieve such impressive results. The Soviets didn’t progress by building out detailed theory, but by simply following what seemed to work without understanding why. Rather than a detailed model of the underlying behavior of the plasma, progress on fusion began to take place by the application of “scaling laws,” empirical relationships between the size and shape of a tokamak and various measures of performance. Larger tokamaks performed better: the larger the tokamak, the larger the cloud of plasma, and the longer it would take a particle within that cloud to diffuse outside of containment. Double the radius of the tokamak, and confinement time might increase by a factor of four. With so many tokamaks of different configurations under construction, the contours of these scaling laws could be explored in depth: how they varied with shape, or magnetic field strength, or any other number of variables.

In the U.S., this pivot towards practicality came with the appointment of Bob Hirsh as director of the Atomic Energy Commission’s fusion branch in 1972. Hirsh stated that, “I came into the program with an attitude that I wanted to build a fusion reactor…I didn’t want to do plasma physics for plasma physics’ sake.” Hirsh believed that U.S. researchers had been too timid, too busy exploring theory instead of trying to make progress towards actual power reactors, and that progress would ultimately require building new, bigger machines before theory had been completely worked out. Efforts should be focused on the most promising concepts, and research efforts aimed at issues of actual power production. For instance, Hirsh favored running experiments with actual deuterium and tritium fuel instead of simply hydrogen; while hydrogen was far easier and less expensive to work with, it would not duplicate power reactor conditions. Hirsh canceled several research projects that were not making progress, and made plans for an extremely large tokamak at Princeton, the Tokamak Fusion Test Reactor (TFTR), that was designed to achieve scientific “breakeven”: getting more energy out of the plasma than was required to maintain it.1 Hirsh also funded a larger mirror machine experiment designed to achieve breakeven, in the hopes that either mirror machines or tokamaks would achieve the necessary progress, and achieve breakeven within 10 years.

And the U.S. wasn’t the only country racing towards breakeven. Japan had responded to the Soviet’s tokamak announcement by building their own tokamak, the JFT-2, and from there moved on to an even larger tokamak, the JT-60. The JT-60 was even larger than the TFTR and designed to achieve “equivalent breakeven” (not true breakeven, because it was not designed to use radioactive tritium fuel). And the Europeans had banded together to build the enormous Joint European Torus (JET) tokamak, also designed to achieve breakeven. By contrast, the Soviets would effectively leave the fusion race. The follow-up to the T-3, the larger T-10, was less capable than similar machines elsewhere in the world, and their planned machine to achieve breakeven, the T-20, would never be built.

The TFTR produced its first plasma in 1982, followed closely by JET six months later. The JT-60 came online a few years later in 1985. And beyond these large machines, numerous smaller reactors were being built. By the early 1980s there were nearly 300 other research fusion devices around the world, including 70 tokamaks, and worldwide fusion annual fusion funding was nearly $1.3 billion.

These research efforts were yielding results. In 1982, West German researchers stumbled upon conditions that created a plasma with superior density and confinement properties, which they dubbed high-mode or H-mode. In 1983, MIT researchers achieved sufficient density and confinement time (though not temperature) to achieve breakeven on their small Alcator-C tokamak. In 1986, researchers on the TFTR accidentally doubled their confinement time after an especially thorough process of reactor vessel cleaning, and soon other tokamaks could also produce these “supershots.” The TFTR was ultimately able to achieve the density, temperature, and confinement time needed for fusion, though not simultaneously.

But this progress was hard-won, and came frustratingly slowly. Neither the TFTR, JET, or the JT-60 would manage to achieve breakeven in the 1980s. The behavior of plasma was still largely confusing, as was the success of certain reactor configurations. Advances had been made in pinning down reactor parameters that seemed to produce good results, but there had been little progress on why they did so. At a 1984 fusion conference, physicist Harold Furth noted that despite the advances, tokamaks were still poorly understood, as were the physics behind H-mode. As a case in point, the JT-60 was unable to enter H-mode for opaque reasons, and ultimately needed to be rebuilt into the JT-60U to do so.

Despite decades of research, in the 1980s a working, practical fusion power reactor still seemed very far away. In 1985, Bob Hirsh, the man responsible for pushing forward U.S. tokamak research, stated that the tokamak was likely an impractical reactor design, and that years of research efforts had not addressed the “fatal flaws” of its complex geometry.

Governments had been spending hundreds of millions of dollars on fusion research with what seemed like little to show for it. And while a new source of energy had been an urgent priority in the 1970s, by the 1980s that was much less true. Between 1981 and 1986, oil prices fell almost 70% in real terms. Fusion budgets had risen precipitously in the 1970s, but now they began to be cut.

In the U.S., Congress passed the Magnetic Fusion Engineering Act in 1980, which called for a demonstration fusion reactor to be built by the year 2000, but the funds authorized by the act would never be allocated. The incoming Reagan administration felt that the government should be in the business of funding basic research, not designing and building new reactor technology that was better handled by the private sector. Fusion research funding in the U.S. peaked in 1984 at $468.5 million, but slowly and steadily declined afterward.

One after another, American fusion programs were scaled back or canceled. First to go was a large mirror machine at Livermore, the Mirror Fusion Test Facility. It was mothballed the day after construction was completed, and then canceled completely in 1986 without running a single experiment. Other mirror fusion research efforts were similarly gutted. The follow-up to the TFTR, the Compact Ignition Tokamak or CIT, which aimed to achieve plasma ignition, was canceled in 1990. An alternative to the CIT, the smaller and cheaper Burning Plasma Experiment or BPX, was canceled in 1991. Researchers responded by proposing an even smaller-scale reactor, the Tokamak Physics Experiment or TPX, but when the fusion budget was slashed by $100 million in 1996 this too was scrapped. The 1996 cuts were so severe that the TFTR itself was shut down after 15 years of operation.

In spite of these setbacks, progress in fusion continued to be made. In 1991 researchers at General Atomics discovered an even higher plasma confinement mode than H-mode, which they dubbed “very high mode” or VH mode. In 1992 researchers at JET achieved an extrapolated scientific breakeven, with a “Q” (the ratio of power out to power in) of 1.14. This was quickly followed by Japan’s JT-60U achieving an extrapolated Q of 1.2. (In both cases, this was a projected Q value based on what would have been achieved had deuterium-tritium fuel been used.) In 1994, the TFTR set a new record for fusion power output by producing 10 megawatts of power for a brief period of time, though only at a Q of 0.3. Theoretical understanding of plasma behavior also advanced, and plasma instabilities and turbulence became increasingly understood and predictable.

But without new fusion facilities to push the boundaries of what could be achieved within a reactor, such progress could only continue for so long. Specifically, after TFTR, JET, and JT-60, what was needed was a machine large and powerful enough to create a burning plasma, in which more than 50% of its heating is generated from fusion.

In 1976, after the TFTR began construction, U.S. fusion researchers laid out several different possible fusion research programs, the machines and experiments they would need to run, and the dates they could achieve a demonstration power reactor. TFTR would be followed by an ignition test reactor that would be large enough to achieve burning plasma (as well as ignition). This would then be followed by an experimental power reactor, and then a demonstration commercial reactor. The more funding fusion received, the sooner these milestones could be hit. The most aggressive timetable, Logic V, had a demo reactor built in 1990, while the least aggressive Logic II pushed it back to 2005. Also included was a plan that simply continued then-current levels of fusion funding, advancing various basic research programs but with no specific plan to build the necessary test reactors. This plan is often referred to as “fusion never.”

Following its peak in the 1980s, American fusion funding has fallen consistently below the “fusion never” level, and none of the follow-up reactors to the TFTR needed to advance the state of the art have been built. As of today, no magnetic confinement fusion reactor has achieved either ignition or a burning plasma.

NIF and ITER

Amidst the wreckage of U.S. fusion programs, progress managed to continue along two fronts. The first was ITER (pronounced “eater”), the International Thermonuclear Experimental Reactor. ITER is a fusion test reactor being built in the south of France as an international collaboration between more than two dozen countries. When completed, it will be the largest magnetic confinement fusion reactor in the world, large enough to achieve a burning plasma. ITER is designed to achieve a Q of 10, the highest Q ever in a fusion reactor (the current record is 1.5), though it is not expected to achieve ignition.

ITER began in 1979 as INTOR, the International Tokamak Reactor, an international collaboration between Japan, the U.S., the Soviet Union, and the European energy organization Euratom. The INTOR program resulted in several studies and reactor designs, but no actual plans to build a reactor. Further progress came in 1985, after Mikhail Gorbachev’s ascent to leader of the Soviet Union. Gorbachev was convinced by Evgeny Velikhov that a fusion collaboration could help defuse Cold War tensions, and after a series of discussions, INTOR was reconstituted as ITER in 1986.

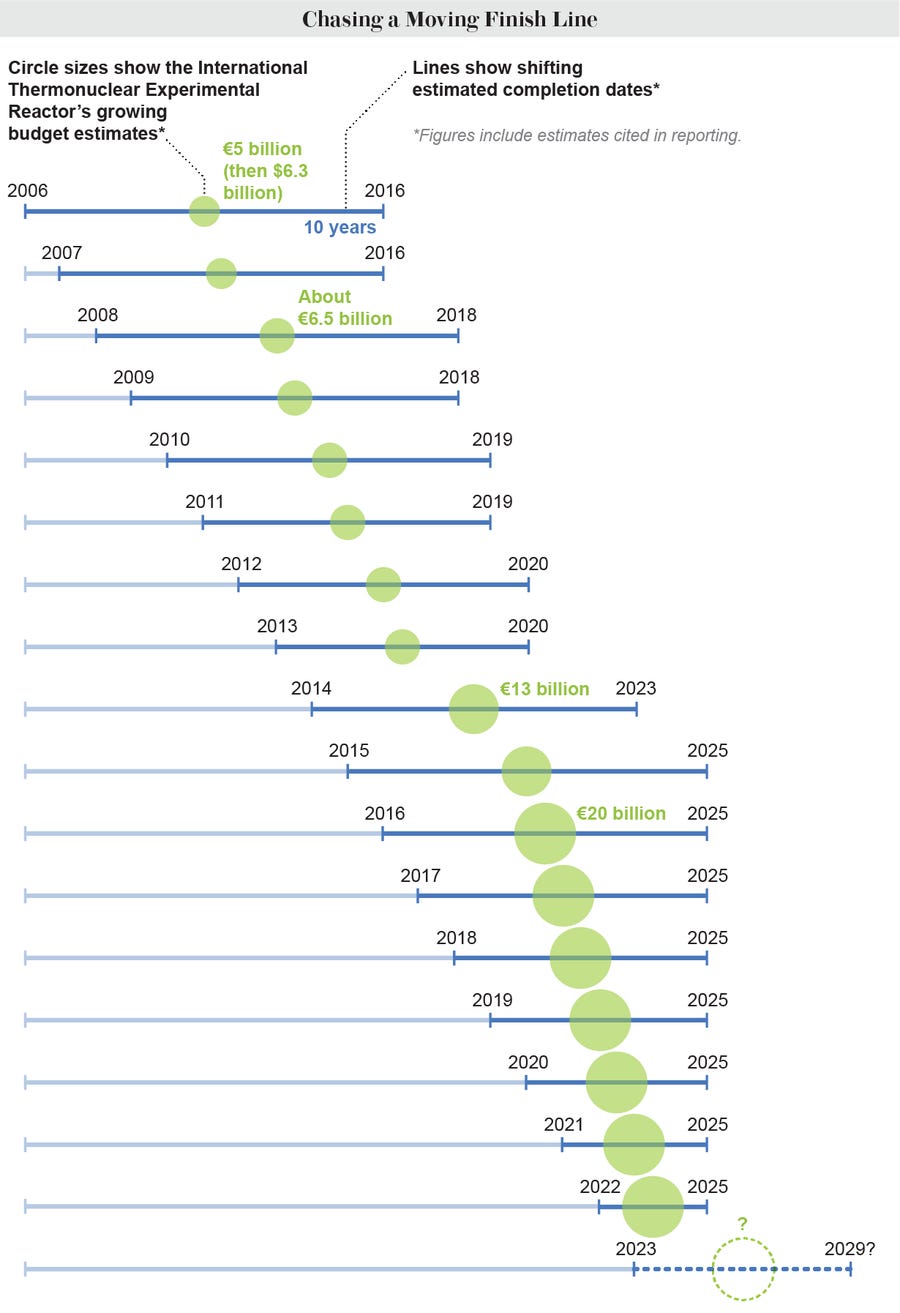

The initial design for the ITER reactor was completed in 1997, and was far larger than any fusion reactor that had yet been attempted. ITER would output 1,500 megawatts of power, 100 times what JET could output, and also achieve plasma ignition. The project would cost $10 billion, and be completed in 2008.

But, unsurprisingly for an international megaproject, ITER stumbled. Japan asked for a three-year delay before construction began, and the U.S. pulled out of the project in 1998. It seemed as if ITER were on the verge of failing. But the reactor was redesigned to be smaller and cheaper, costing an estimated $5 billion but with a lower power output (500 megawatts) and without plans to achieve ignition. The U.S. rejoined the project in 2003, followed by China and South Korea.

Construction of the redesigned ITER kicked off in 2006, and the reactor was expected to come online in 2016. But since then the project has struggled. The completion date has been repeatedly pushed back, and there’s currently no official projected completion date. “Official” costs have risen to $22 billion, with actual costs likely far higher.

The second avenue of progress since the 1990s has been on inertial confinement fusion. As discussed earlier, inertial confinement fusion can be achieved by using an explosion or other energy source to greatly compress a lump of nuclear fuel. Inertial confinement is what powers hydrogen bombs, but using it as a power source can be traced back to an early concept for a nuclear power plant proposed by Edward Teller in 1955. Teller proposed filling a huge underground cavern with steam, and then detonating a hydrogen bomb within it to drive the steam through a turbine.

The physicist tasked with investigating Teller’s concept, John Nuckols, was intrigued by the idea, but it seemed impractical. But what if instead of an underground cavern, you used a much smaller cavity just a few feet wide, and detonated a tiny H-bomb within it? Nuckols eventually calculated that with the proper driver to trigger the reaction, a microscopic droplet of deuterium-tritium fuel could be compressed to 100 times the density of lead and reach temperatures of tens of millions of degrees: enough to trigger nuclear fusion.

This seemed to Nuckols to be far more workable, but it required a driver to trigger the reaction: H-bombs used fission-based atom bombs to trigger nuclear fusion, but this wouldn’t be feasible for the tiny explosions Nuckols envisioned. At the time no such driver existed, but one would appear just a few years later, in the form of the laser.

Most sources of light contain a mix of wavelengths which limits how tightly they can be focused: The different wavelengths will focus in different places and the light will get spread out. But lasers generated light of a uniform wavelength, which allowed it to be focused tightly and deliver a large amount of power to an extremely small area. By focusing a series of lasers on a droplet of nuclear fuel, a thin outer layer would explode outward, driving the rest of the fuel inward and achieving nuclear fusion. In 1961, Nuckols presented his idea for a “thermonuclear engine” driven by lasers triggering a series of tiny fusion explosions.

From there, laser fusion progressed on a somewhat parallel path to magnetic confinement fusion. The government-funded laser fusion research alongside magnetic confinement fusion, and laser-triggered fusion was first successfully achieved by the private company KMS Fusion in 1974. In 1978 a series of classified nuclear tests suggested that it was possible to achieve ignition with laser fusion. And in 1980, while Princeton was building its enormous TFTR tokamak, Lawrence Livermore Lab was building the most powerful laser in the world, Nova, in the hopes of achieving ignition in laser fusion (ignition would not be achieved).

Part of the interest in laser fusion stemmed from its potential military applications. Because the process triggered a microscopic nuclear explosion, it could duplicate some of the conditions inside an H-bomb explosion, making it useful for nuclear weapons design. This gave laser fusion another constituency that helped keep funding flowing, but it also meant that laser fusion work was often classified, making it hard to share progress or collaborate on research. Laser fusion was often considered several years behind magnetic fusion.

Laser fusion research had many of the same setbacks as magnetic fusion: Plasma instabilities and other complications made progress slower than initially expected, and funding that had risen sharply in the 1970s began to be cut in the 1980s when usable power reactors still seemed very far away. But laser fusion’s fortunes turned in the 1990s. The U.S. ceased its nuclear testing in 1991, and in 1994 Congress created the Stockpile Stewardship Program to study and manage aging nuclear weapons and to keep nuclear weapons designers employed should they be needed in the future. As part of this program, Livermore proposed an enormous laser fusion project, the National Ignition Facility, that would achieve ignition in laser fusion and validate computer models of nuclear weapons. In 1995 the budget for laser fusion was $177 million; by 1999 it had nearly tripled to $508 million.

The National Ignition Facility (NIF) was originally planned to be completed in 2002 for $2.1 billion. But like ITER, initial projections proved to be optimistic. The NIF wasn’t completed until 2009 for $4 billion. And like so many fusion projects before it, achieving its goals proved elusive: In 2011 Department of Energy (DOE) Science Undersecretary Steve Koomin stated that “ignition is proving more elusive than hoped” and that “some science discovery may be required.” The NIF didn’t produce a burning plasma until 2021, and ignition until 2022.

And even though the NIF has made some advances in fusion power research, it’s ultimately primarily a facility for nuclear weapons research. Energy-based research makes up only a small fraction of its budget, and this work is often opposed by the DOE (for many years, Congress added laser energy research funding back into the laser fusion budget over the objections of the DOE). Despite the occasional breathless press release, we should not expect it to significantly advance the state of fusion power.

The rise of fusion startups

Up into the 2010s, this remained the state of fusion power. Small amounts of fusion energy research were done at the NIF and other similar facilities, and researchers awaited the completion of ITER to study burning plasma. Advances in fusion performance metrics, which had increased steadily during the second half of the 20th century, had tapered off by the early 2000s.

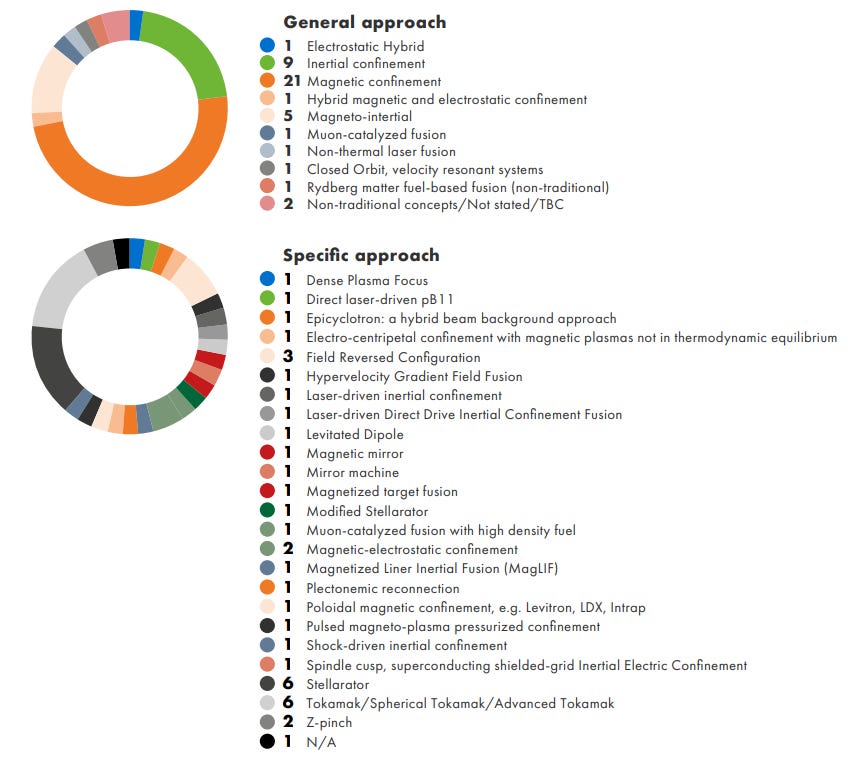

But over the past several years, we’ve seen a huge rise in fusion efforts in the private sector. As of 2023, there are 43 companies developing fusion power, most of which were founded in the last few years. Fusion companies have raised a collective $6.2 billion in funding, $4.1 billion of which has been in the last several years.

These investments have been highly skewed, with a handful of companies receiving the lion’s share of the funding. The 800-pound gorilla in the fusion industry is Commonwealth Fusion Systems, which has raised more than $2 billion in funding since it was founded in 2018. Other major players are TAE Technologies ($1.2 billion in funding), Helion Energy ($577 million in funding with another $1.7 billion of performance-based commitments), and Zap Energy ($200 million).

These companies are pursuing a variety of different strategies for fusion, ranging from tried and true tokamaks (Commonwealth), to pinch machines (Zap) to laser-based inertial confinement, to more exotic strategies. Helion’s fusion reactor, for instance, launches two rings of plasma at each other at more than one million miles an hour; when they collide, the resulting fusion reaction perturbs a surrounding magnetic field to generate electricity directly (most other fusion reactors generate electricity by using the high-energy particles released from the reactor to heat water into steam to drive a turbine.) The Fusion Industry Association’s 2023 report documents ten major strategies companies are pursuing for fusion, using several different fuel sources (though most companies are using the “conventional” methods of magnetic confinement and deuterium-tritium fuel).

Many of these strategies are being pursued with an eye towards building a reactor cheap enough to be practical. One drawback of deuterium-tritium reactions, for instance, is that much of their energy output is in the form of high-energy neutrons, which gradually degrade the interior of the reactor and make it radioactive. Some startups are pursuing alternative reactions that produce fewer high-energy neutrons. Likewise, several startups are using what’s known as a “field reversed configuration,” or FRC, which creates a compact plasma torus using magnetic fields rather than a torus-shaped reactor vessel. This theoretically will allow for a smaller, simpler reactor that requires fewer high-powered magnets. And Zap’s pinch machine uses a relatively simple reactor shape that doesn’t require high-powered magnets: Their strategy is based on solving the “physics risk” of keeping the plasma stable (a perennial problem for pinch machines) via a phenomenon known as shear-flow stabilization.

After so many years of research efforts funded almost exclusively by governments, why does fusion suddenly look so appealing to the private sector? In part, steady advances of science and technology have chipped away at the difficulties of building a reactor. Moore’s Law and advancing microprocessor technology have made it possible to increasingly accurately model plasma within reactors using gyrokinetics, and have enabled increasingly precise control over reactor conditions. Helion’s reactor, for instance, wouldn’t be possible without advanced microprocessors that can trigger the magnets rapidly and precisely. Better lasers have improved the prospects for laser-based inertial confinement fusion. Advances in high-temperature superconductors have made it possible to build extremely powerful magnets that are far less bulky and expensive than were previously possible. Commonwealth Fusion’s approach, for instance, is based entirely on using novel magnet technology to build a much smaller and cheaper tokamak: Their enormous $1.8 billion funding round in 2021 came after they successfully demonstrated one of these magnets, which uses superconducting magnetic tape.

But there are also non-technical, social reasons for the sudden blossoming of fusion startups. In particular, Commonwealth Fusion’s huge funding round in 2021 created a bandwagon effect: It showed it was possible to raise significant amounts of money for fusion, and investors began to enter the space wanting to back their own Commonwealth.

None of these startups have managed to achieve breakeven or a burning plasma, but many are considered quite promising approaches by experts, and it’s believed that at least some will successfully build a power reactor.

How to think about fusion progress

After more than 70 years of research and development, we still don’t have a working fusion power reactor, much less a practical one. How should we evaluate the progress that’s occurred?

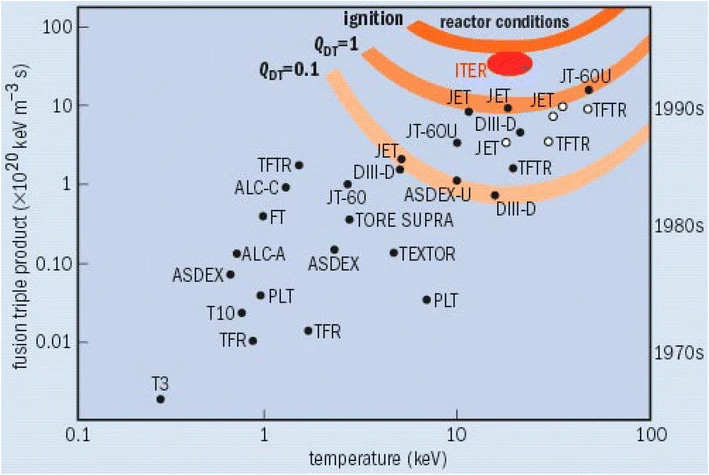

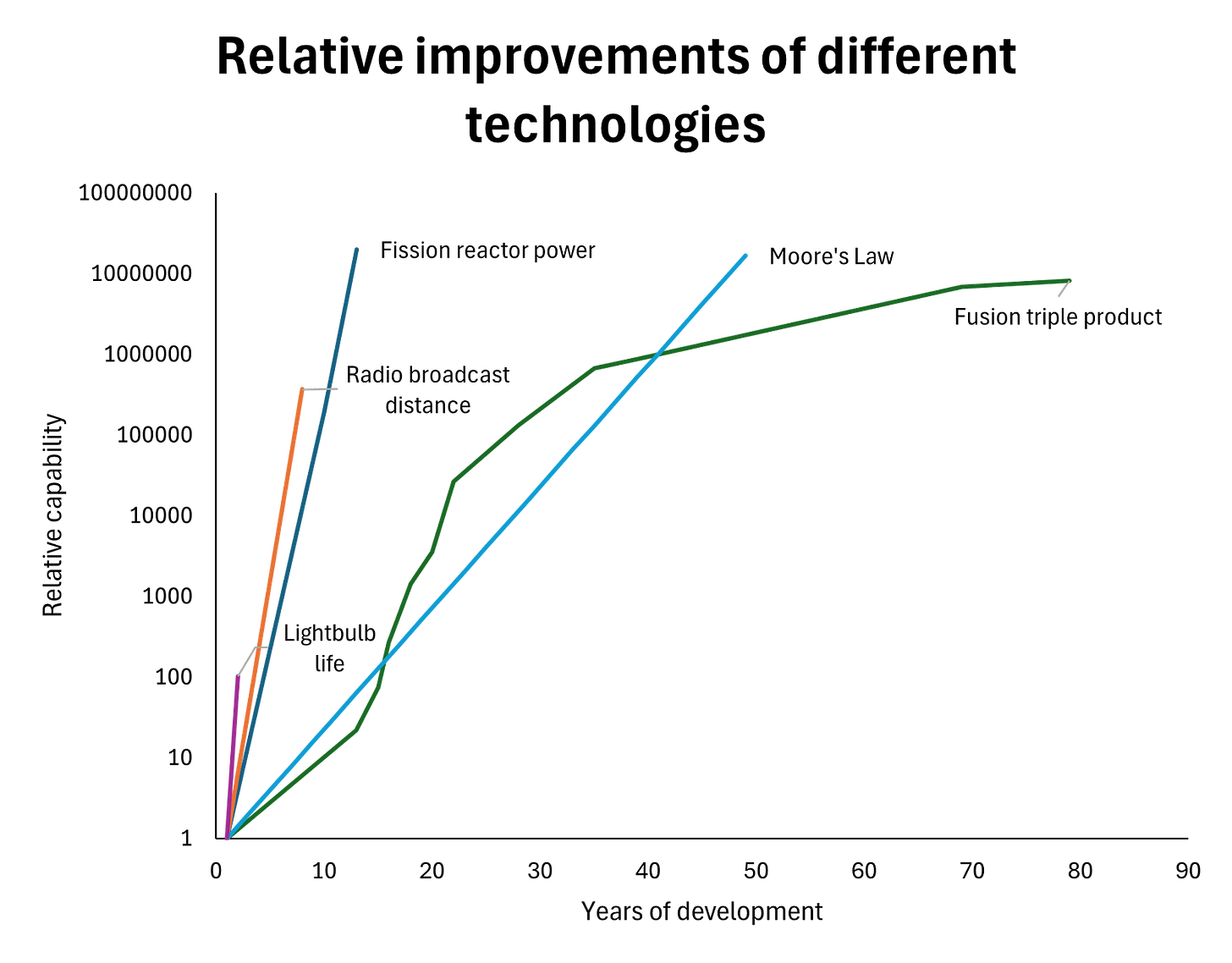

It’s common to hear from fusion boosters that despite the lack of a working power reactor, the rate of progress in fusion has nevertheless been impressive. You often see this graph comparing improvement in the fusion triple product against Moore’s Law, suggesting progress in fusion has in some sense been as fast or faster than progress in semiconductors. You can create similar graphs for other measures of fusion performance, such as reactor power output.

I think these sorts of comparisons are misleading. The proper comparison for fusion isn’t to a working technology that is steadily being improved, but to a very early-stage technology still being developed. When we make this comparison, fusion’s progress looks much less impressive.

Consider, for instance, the incandescent light bulb. An important measure of light bulb performance is how long it lasts before burning out: Edison’s experiment in 1879 with a carbon thread filament lasted just 14.5 hours (though this was a vast improvement over previous filaments, which often failed in an hour or so). By 1880 some of Edison’s bulbs were lasting nearly 1,400 hours. In other words, performance increased in a single year by a factor of 100 to 1,000.

Similarly, consider radio. An important performance metric is how far a signal can be transmitted and still be received. Early in the development of radio, this distance was extremely short. When Marconi first began his radio experiments in his father’s attic in 1894, he could only achieve transmission distances of about 30 feet, and even this he couldn’t do reliably. But in 1901, Marconi successfully transmitted a signal across the Atlantic between Cornwall and Newfoundland, a distance of about 2,100 miles. In just seven years Marconi increased radio transmission distance by a factor of 370,000.

Likewise, the world’s first artificial nuclear reactor, the Chicago Pile-1, produced just half a watt of energy in 1942. In 1951, the Experimental Breeder Reactor-I, the first nuclear reactor to generate electricity, generated 100,000 watts of power. And in 1954, the first submarine nuclear reactors generated 10,000,000 watts of power, a 20-million factor of improvement in 12 years.

In the very early stages of a technology, when it barely works at all, a Moore’s Law rate of improvement — doubling in performance every two years — is in fact extremely poor. If a machine that barely works gets twice as good, it still only barely works. A jet engine that fails catastrophically after a few seconds of operation (as the early jet engines did) is still completely useless if you double the time it can run before it fails. Technology with near-zero performance must often get thousands or millions of times better, and many, perhaps most, technologies exhibit these rates of improvement early in their development. If they didn’t, they wouldn’t get developed: Few backers are willing to pour money into a technology for decades in the hopes that eventually it will get good enough to be actually useful.

From this perspective, fusion is notable not for its rapid pace of progress, but for the fact that it’s been continuously funded for decades despite a comparatively slow pace of development. If initial fusion efforts had been privately funded, development would have ceased as soon as it was clear that Spitzer’s simplified model of an easy-to-confine plasma was incorrect. And in fact, Spitzer had assumed that development would cease if the plasma wasn’t easy to confine: The fact that development continued anyway is somewhat surprising.

Fusion’s comparatively slow pace of development can be blamed on the fact that the conditions needed to achieve fusion are monumentally difficult to create. For most technology, the phenomena at work are comparatively simple to manipulate, to the point where many important inventions were created by lone, self-funded individuals. Even something like a nuclear fission reaction is comparatively simple to create, to the point where reactors can occur naturally on earth if there’s a sufficient quantity of fuel in the right conditions. But creating the conditions for nuclear fusion on earth — temperatures in the millions or billions of degrees — is far harder, and progress is necessarily attenuated.

Fusion progress looks better if we compare it to other technologies not from when development on them began, but from when the phenomena they leverage were first discovered and understood. Fusion requires not just building a reactor, but exploring and understanding an entirely new phenomenon: the behavior of high-temperature plasmas. Building the scientific understanding of a phenomena inevitably takes time: Marconi needed just a few years to build a working radio, but his efforts were built on decades of scientific exploration of the behavior of electrical phenomena. Ditto with Edison and the light bulb. And the behavior of high temperatures plasmas, which undergo highly turbulent flow, is an especially knotty scientific problem. Fusion shouldn’t be thought of as a technology that has made rapid progress (it hasn’t), but as a combination of both technological development and scientific investigation of a previously unexplored and particularly difficult-to-predict state of matter, where progress on one is often needed to make progress on the other.

We see this dynamic at work in the history of fusion, where researchers are constantly pulled back and forth between engineering-driven development (building machines to see how they work) and science-driven development (developing theory to the point where it can guide machine development), between trying to build a power reactor and figuring out if it’s possible to build a power reactor at all. Efforts constantly flipped back and forth between these two strategies (going from engineering-driven in the 50s, to science-driven in the 60s, to engineering-driven in the 70s), and researchers were constantly second-guessing whether they were focusing on the right one.

Conclusion: the bull and bear cases for fusion

Despite decades of progress, it’s still not clear, even to experts within the field, whether a practical and cost-competitive fusion reactor is possible. A strong case can be made either way.

The bull case for fusion is that for the last several decades there’s been very little serious effort at fusion power, and now that serious effort is being devoted to the problem, a working power reactor appears very close. The science of plasmas and our ability to model, understand, and predict them has enormously improved, as have the supporting technologies (such as superconducting magnets) needed to make a practical reactor. ITER, for instance, was designed and planned in the early 2000s and will cost tens of billions. Commonwealth’s SPARC reactor will be able to approach its performance in many ways (it aims for a Q > 10 and a triple product around 80% of ITERs), but at a projected fraction of the cost. With so many well-funded companies entering the space, we’re on the path towards a virtuous cycle of improvement: More fusion companies means it becomes worthwhile for others to build more robust fusion supply chains, and develop supporting technology like mass-produced reactor materials, cheap high-capacity magnets, working tritium breeding blankets, and so on. This allows for even more advances and better reactor performance, which in turn attracts further entrants. With so many reactors coming online, it will be possible to do even more fusion research (historically, machine availability has been a major research bottleneck), allowing even further progress. Many industries require initial government support before they can be economically self-sustaining, and though fusion has required an especially long period of government support, it’s on the cusp of becoming commercially viable and self-sustaining. At least one of the many fusion approaches will be found to be highly scalable and possible to build reasonably sized reactors at a low cost, and fusion will become a substantial fraction of overall energy demand.

The bear case for fusion is that, outside of unusual approaches like Helion’s (which may not pan out), fusion is just another in a long line of energy technologies that boil water to drive a turbine. And the conditions needed to achieve fusion (plasma at hundreds of millions or even billions of degrees) will inevitably make fusion fundamentally more expensive than other electricity-generating technologies. Even if we could produce a power-producing reactor, fusion will never be anywhere near as cheap as simpler technology like the combined-cycle gas turbine, much less future technologies like next-generation solar panels or advanced geothermal. By the time a reactor is ready, if it ever is, no one will even want it.

Perhaps the strongest case for fusion is that fusion isn’t alone in this uncertainty about its future. The next generation of low-carbon electricity generation will inevitably make use of technology that doesn’t yet exist, be that even cheaper, more efficient solar panels, better batteries, improved fission reactors, or advanced geothermal. All of these technologies are somewhat speculative, and may not pan out — solar and battery prices may plateau, advanced geothermal may prove unworkable, etc. In the face of this risk, fusion is a reasonable bet to add to the mix.

Thanks to Kyle Schiller of Marathon Fusion for feedback on this article. All errors are my own.

If you’re interested in reading more about fusion, a recommended reading list on the topic is available here for paid subscribers.

Scientific breakeven is different than engineering breakeven; in the former, there is more energy leaving the plasma than entering it, while the latter includes all the power needed to run the entire reactor, including any ancillary systems.

Nice article. I especially liked the history of fusion research. A couple points:

(1) As you mentioned, the energy density (energy per unit mass) of fusion is four times higher than nuclear fission. However, the power density (power per unit volume) of fusion is 1-2 orders of magnitude lower than fission. In other words, fusion is great if you want to build a thermonuclear warhead, but if you want to build a power plant, fusion's low power density is a significant drawback compared to fission. To explain in more detail: LWRs have a power density on the order of 100 MW/m^3 in the core, while D-T fusion has a (maximum) power density of 0.1 MW/m^3 times the pressure (in atmospheres) squared. ITER is expected to have a pressure of 2.6 atm (0.5 MW/m^3), while tokamak power plants would likely have a pressure of about 7 atm (5 MW/m^3). So, a D-T fusion reactor is expected to have somewhere in the ballpark of 20x lower power density than a LWR.

(2) The power density (at constant pressure) of deuterium-tritium (D-T) fusion is 2 orders of magnitude higher than the second-highest power density reaction, deuterium-helium 3 (D-3He). Other fuels (such as D-D or p-11B) are roughly another order of magnitude lower still than D-3He. Anyone attempting to build a fusion reactor with "alternative" (non-D-T) fuels will either need to create extraordinarily high pressures, build an extraordinarily large reactor, or produce extraordinarily little power. In my opinion, it's almost impossible to imagine reactions with such low power densities being used for power plants.

(3) The "success" of a fusion reactor is often discussed, as it mostly is here, in terms of achieving a triple product greater than the Lawson criterion (8 atm*s for DT). However, there are two other essential things a fusion reactor must do. First, it must be able to create tritium fuel inside the reactor. Second, it must not destroy itself. These are extremely difficult engineering problems. Part of what makes them so challenging is that, while possible solutions can be analyzed theoretically before a reactor is built, those solutions can only be tested inside a power-producing reactor. Thus, progress on these difficult problems must be made in series, not in parallel. Only once you've spent a few billion dollars on a power-producing fusion reactor can you actually test whether it "works". At that point, you might not produce as much tritium fuel as you had calculated that you would. Or, your reactor could destroy itself -- and it is quite likely to do so, either via neutron damage, heat flux damage, or in a disruption. In my opinion -- and as far as I know this is a widespread view among academics unaffiliated with fusion startups -- it is certainly possible that one of the fusion startups will "successfully build a" [power-producing] machine, but it is very unlikely that any of them will successfully build a fusion power plant. The opposing view -- and to the best of my knowledge, this is how Commonwealth Fusion Systems thinks about these challenges -- is that the public excitement created by the achievement of high-Q magnetic confinement fusion will lead to so much investment in fusion that we'll be able to afford to solve those difficult engineering challenges later on. Kicking the can down the road, if you will. In any case, I think a discussion of the engineering challenges associated with fusion power plants is essential.

(4) Using the development of the atomic bomb as an analogy: in 1940, the Frisch-Peierls memorandum performed some simple calculations suggesting that an atomic bomb was theoretically possible, and would require a few kilograms of U-235. We now have enough knowledge, at least for tokamaks (the reactor concept with by far the best performance), to calculate whether a fusion reactor can scale to a power plant. This is done in "Designing a tokamak fusion reactor—How does plasma physics fit in?" (J.P. Friedberg et al., 2015), which writes "Unfortunately, a tokamak reactor designed on the basis of standard engineering and nuclear physics constraints does not scale to a reactor." In my opinion, this is a fundamental difference between the development of nuclear fusion and nuclear fission or the atomic bomb: with fusion, our best calculations suggest that it will not work. Or more precisely, tokamaks will not work barring significant improvements in certain engineering constraints.

I think you've done a nice job of laying out the bull and bear cases for fusion. However, I think the bear case for fusion could be made much stronger with some relatively simple technical arguments. (I've thought about writing some of that up myself, now that I'm done with my PhD and have some time off.)

Thank you for this outstanding article. I teach fusion physics on a university level, and this is the best historical overview I have ever read. Keep up the great work!