Robot Dexterity Still Seems Hard

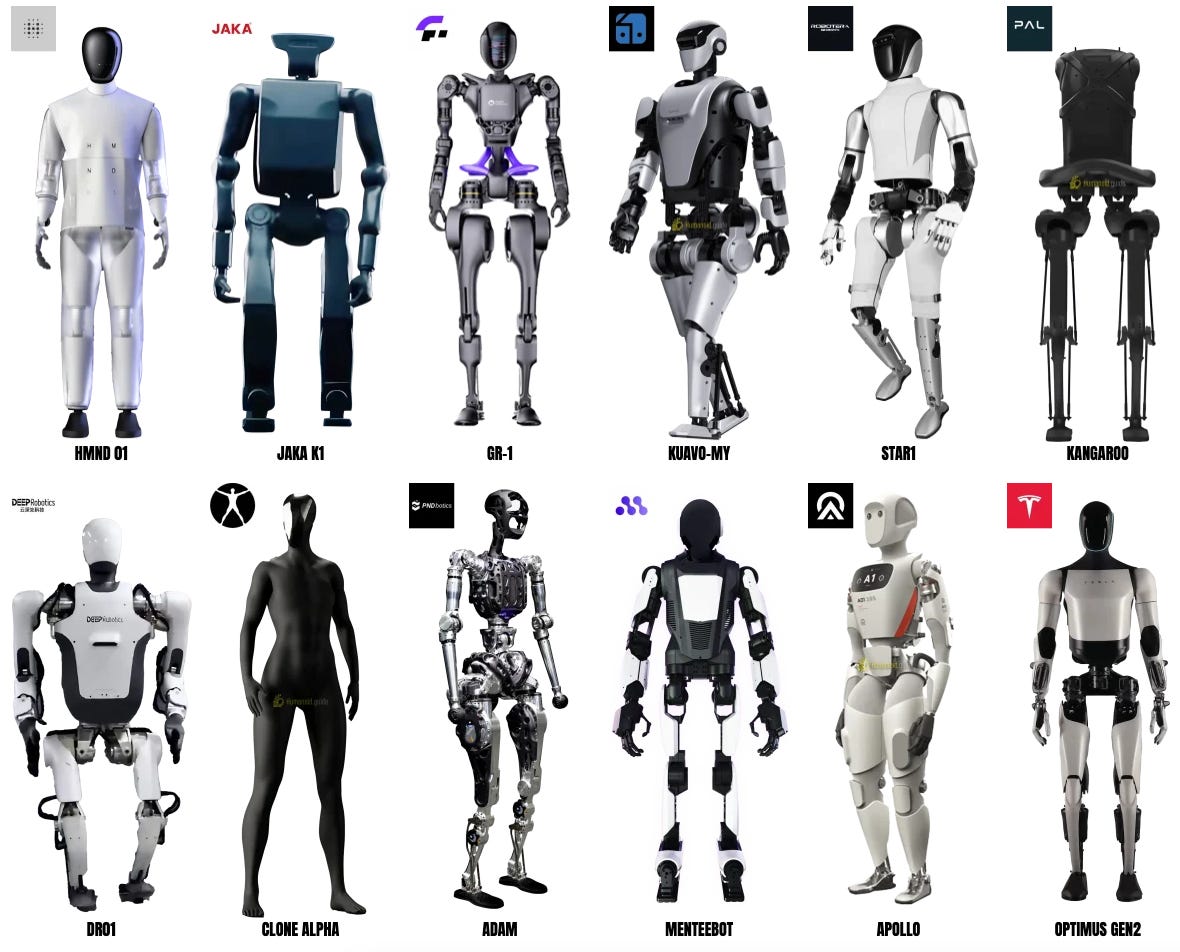

You can’t throw a rock these days without hitting someone trying to build humanoid robots. The Humanoid Robot Guide lists 47 different humanoids made by 38 different manufacturers, and this Technology Review article claims 160 companies worldwide are building humanoids. Many of these manufacturers are startups that have raised (or are raising) hundreds of millions of dollars in venture capital. 1X Technologies has raised around $125 million in funding, Apptronik has raised $350 million, and Agility Robotics is looking to raise $400 million. Figure AI has raised $675 million, and is aiming to raise $1.5 billion more. Since 2015, humanoid robot startups have raised more than $7.2 billion.

Other manufacturers are existing companies trying their hand at humanoids. Tesla is developing its Optimus humanoid, Unitree its G1, Boston Dynamics its Atlas. A variety of Chinese EV manufacturers, such as Xpeng, Xiaomi, and Nio, are also trying their hand at humanoids (and why not, as both electric vehicles and humanoid robots are fundamentally collections of batteries, electric motors, sensors, and control electronics).

These humanoids are also getting increasingly capable. Boston Dynamics has been showing off its humanoid capabilities for years, with the most recent Atlas iteration displaying the ability to run, crawl, somersault, and cartwheel. Unitree has shown off its G1 doing kung fu, boxing, flips, and kip ups. Booster Robotics has shown its T1 kicking a soccer ball, and EngineAI has shown its humanoid doing elaborate dance routines. And while not quite as showy, a variety of humanoids now have the ability to walk with a smooth, human-like gait, including (but not limited to) Figure's 02, Tesla’s Optimus, and Xpeng’s Iron. This past weekend, a humanoid half marathon was held in Beijing, where 21 humanoid robots competed to complete the race (though only 6 finished, and they did so with significant help from human assistants).

Beyond these sorts of demos, humanoids are also starting to be tested in the field to see if they can do useful work. Agility Robotics’ Digit is moving containers in warehouses. Figure’s 02 robot is being tested in a BMW assembly plant, and Apptronik’s Apollo is being trialed with Mercedes. Unitree’s G1 is being used to install and repair electric power components in China. In a recent TED Talk, the CEO of 1X showed off its Neo robot doing household tasks like vacuuming and watering plants, and the company plans to start deploying Neo to homes later this year (though this seems like a data-gathering exercise to help improve robot behavior, rather than selling a polished and developed consumer product.)

While a lot of these capabilities are impressive, robot progress still seems somewhat uneven to me. It’s cool to see these robots move in such human-like ways, but as former OpenAI Chief Research Officer Bob McGrew notes, “Manipulation is the hard problem we need to solve to make humanoid robots useful, not locomotion.” The value of a humanoid robot isn’t whether it can dance, run, or flip, but how capable it is at manipulating objects in the real world. And while manipulation capabilities are improving, they appear to have a very long way to go.

Dexterity is hard

Robots have long had the ability to make extremely precise movements. This industrial robot arm from FANUC, for instance, has a repeatability of plus or minus 0.03 millimeters. Robots can also vary their actions based on environmental feedback. This video shows delta robots rapidly grasping and moving randomly placed objects on a conveyor belt. Likewise, when we looked at welding robots, we noted that there’s a variety of feedback systems that robots can use to keep the robot aligned to the weld.

What robots have traditionally struggled with isn’t controlled, precise movements, but dexterity, which is something like “the ability to manipulate a broad variety of objects in a broad variety of ways, quickly and on the fly.” Humans can complete almost any object manipulation task you ask them to do — folding a piece of clothing, opening a gallon of milk, wiping up a spill with a cloth — even if it's an object and/or task they’ve never encountered before. With robots, on the other hand, while it’s usually possible to automate any specific task (given the right hardware, enough time, and a narrow enough task definition), building a robot that can flexibly perform a variety of actions in a novel or highly variable environment is much harder. Robotic flexibility has improved over time (it’s much easier today to program a welding robot to follow a new path, for instance), but this flexibility still exists within a very narrow range of acceptable variation. The delta robot system above can grab randomly positioned objects, but would almost certainly require reprogramming if the size and shape of the objects changed, and I wouldn’t be surprised if even varying the color of the objects was enough to disrupt the existing automation.

This is an instance of what’s known as Moravec’s Paradox: the idea that tasks that seem to require a lot of intelligence are often relatively easy to get a machine to do, while tasks that are simple for humans are often incredibly difficult to automate. It’s trivial to get a computer to do calculus, but building a robot that can unwrap a bandaid and put it on — something a two-year-old can do — is massively more difficult.

Humanoids and dexterity

The current crop of humanoid robots seem to be making progress on dexterity, but are still nowhere near human capabilities. In the TED Talk above where 1X’s Neo displays its capabilities, the robot starts by picking up a vacuum cleaner and pushing a button to turn it on, but it doesn’t push the button smoothly (its finger seems to first miss the button then slide over). When Neo grabs a watering can, it has a hard time putting its fingers around the handle and gripping it tightly, and withdrawing the hand when it's done (Neo ultimately has to use its other hand to help free it from the can). In another video of Neo operating in a home, Neo pours water into a pot to make pour-over coffee, but seems to struggle to keep the kettle steady, and it doesn’t seem to be able to pour the water from the pot into a cup (a human assistant does that). Neo struggles to fold a shirt (not surprising, as folding clothes has long been a fiendishly difficult task to get robots to do), and while it successfully picks up an egg, the motion is jerky and imprecise. Neo also slides the egg container across the counter very awkwardly (it lays its hand on the container and moves it rather than gripping it), and it can’t remove the egg from the container smoothly.

Aside from folding the shirt (which Neo doesn’t appear to completely fold), none of these tasks are particularly dexterously demanding. They all involve manipulating relatively large, rigid objects that behave predictably, without a great deal of precision. For more dexterously demanding tasks, it’s obvious Neo would struggle even more. It’s also notable that Neo’s actions are done with a combination of automation and teleoperation (where a human remotely controls Neo), so its actual autonomous capabilities are even less than what we see.

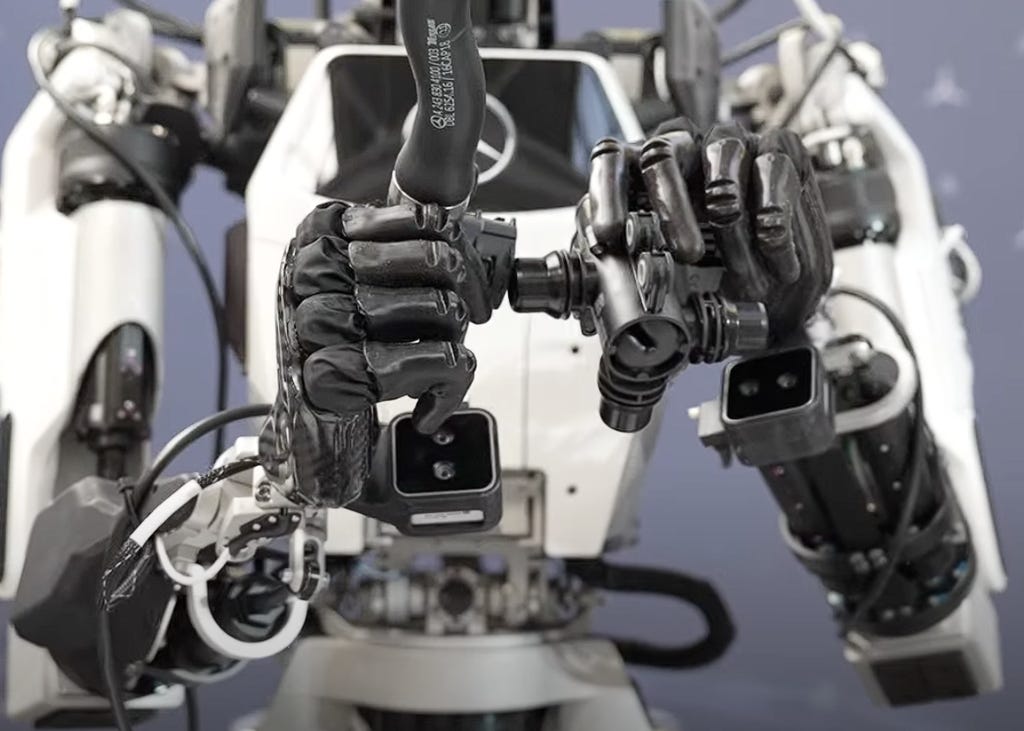

I used Neo as my first example since there’s a lot of available footage of it manipulating objects, but its performance seems to be broadly comparable to other humanoids. The factory-type tasks done by Figure’s 02, Boston Dynamics’ Atlas, and Apptronik’s Apollo all appear to involve moving large, rigid objects in relatively straightforward ways, and they often seem to struggle with precise, subtle movements. 02 moves large parts on fixtures aligned to receive them (probably allowing some imprecision in where the robot drops it), though to its credit 02 does this fairly smoothly and quickly. Apptronik’s Apollo is shown here attaching two parts together, but we can see that it struggles a bit to get them properly aligned and attached. Boston Dynamics’ Atlas is shown here moving large, rigid parts from one container to another, but when one of the parts gets caught on a container edge, the robot doesn’t seem to notice until there’s a lot of resistance, and isn’t able to fix it without backing off and starting over completely.

The robotic dexterity problem is such that humanoid demos and deployments will often avoid complex manipulation tasks. Agility’s Digit, for instance, seems to be often equipped with simple, hinged effectors capable of holding the sides of a container but not much else. At other times it has little claws that are capable of grasping, but don’t seem like they could do more complex movements. Unitree has a lot of impressive demonstrations showing the movement of the G1, but most of those demonstrations don’t include much if any object manipulation (though this video shows it grabbing and cracking nuts, and this one demonstrates some likely-teleoperated capabilities of a new, humanlike hand.) Boston Dynamics’ Atlas demonstrations are often done without any hands or manipulators attached to the robot. Tokyo Robotics’ Torobo demonstrations are likewise done with tools attached directly to the robots arms, without any sort of dexterous effectors.

The fact that humanoids (and robots in general) still struggle with dexterity isn’t exactly a secret. Roboticist Rodney Brooks recently predicted that deployable humanoid robot dexterity will be “pathetic” compared to humans beyond 2036.

There are some examples of impressive-looking robotic dexterity demonstrations. Five years ago OpenAI showed off a robotic hand autonomously solving a Rubik’s Cube. Figure recently released a demo showing two 02 robots working together to put away previously unseen objects in a kitchen. Not only are the movements smooth (though not particularly fast), but the robots are capable of handling deformable objects like bags of pasta. Google DeepMind has several videos showing a Gemini-powered pair of robot arms doing dexterously demanding tasks like autonomously manipulating a timing belt, folding origami, and plugging in an electrical socket. (Though there are also videos that show some struggles: here the arms struggle to precisely put glasses in a glasses case.) But it’s not clear to me how much these capabilities extend beyond controlled demonstration conditions.

It’s also my sense that many impressive robot capabilities are the result of a lot of training on that specific task. This isn’t nothing, but it feels like an incremental, evolutionary advance to me. We already live in a world where almost any given specific task can be automated with enough investment, and past automation advances have often been about reducing the upfront investment required to automate some specific task without eliminating it entirely. Industrial welding robots follow specific, preprogrammed paths, but they were adopted by the car industry because it was easier to reprogram a robot to follow a new path than to retool fixed automation. Getting a robot to perform a task by way of extensive, task-specific training feels like another step along these lines.

Related to this, it’s also often difficult to tell how “real” robotic capabilities are from videos. There’s always the possibility of careful editing to make capabilities look more impressive than they are, or of filming many attempts and only showing the successful ones, or of teleoperation (where a human controls the robots’ movements). We noted above that many of 1X’s actions are in practice teleoperated, and most of the impressive-looking demonstrations of Tesla’s Optimus (such as shirt-folding) are also teleoperated. In general I assume that if an impressive-looking robot demo doesn’t specifically say that a task is done autonomously, there’s a good chance it wasn’t.

Dexterity difficulties

From what I can tell, difficulties in dexterous manipulation are partly a hardware problem and partly a software problem. On the hardware side, current robotic manipulators are very far from being as capable as a human hand. Human hands are very strong while being capable of complex and precise motions, and it’s difficult to match this with a robot hand. Robot hands are often surprisingly weak. An average man has enough grip strength to lift 40 kg or more off the ground (20 kg in each hand), and a strong man can lift upwards of 100 kg. By contrast, NASA’s Robonaut 2 hand had a payload capacity of 9 kilograms, and the Shadow dexterous hand (billed as the “most advanced 5-fingered robotic hand in the world”) has a payload capacity of just 4 kilograms.

More importantly, human hands are extremely sensitive, and capable of providing a lot of tactile feedback to help guide our actions. A human hand has around 17,000 touch receptors, and is sensitive enough to discriminate between textures that differ by mere nanometers. Robot hands are getting better, but still don’t appear to be close to what a human hand can do. This robot hand, for instance, boasts “17 tactile sensors,” and this one from Unitree has 94.

Dexterous robot hands also tend to be extremely expensive. The Robonaut 2 hand apparently cost on the order of $250,000 dollars apiece, and the Shadow dexterous hand costs around $100,000. Unitree’s G1 humanoid starts at just $16,000, but that price doesn’t include any hands at all. Add a pair of hands to that and you’re looking at another $16,000, and those will be less capable 3-fingered hands with limited sensor capabilities. (If I’m reading the specs right, the 3-fingered Unitree hands only have a payload capacity of 0.5 kilograms.)

But getting better, cheaper hardware is (probably) only part of the dexterity problem. Humans are capable of being incredibly dexterous without hands at all: amputees can carefully manipulate a wide variety of objects quickly and precisely using only hooks. The impressive Gemini demonstrations are also done with very limited robotic manipulators. Capable robotic hardware needs to be paired with software that can sequence the right actions, and make adjustments based on environmental feedback, and right now this sort of software still seems nascent.

Dexterity evals

A popular area of focus with AI right now is “evals”: tests to evaluate the capabilities and competencies of AI models. I’m not aware of any such evals for robotic dexterity, so below is my first attempt. Here is a list of 21 dexterously demanding tasks that are relatively straightforward for a human to do, but I think would be extremely difficult for a robot to accomplish.

Put on a pair of latex gloves

Tie two pieces of string together in a tight knot, then untie the knot

Turn to a specific page in a book

Pull a specific object, and only that object, out of a pocket in a pair of jeans

Bait a fishhook with a worm

Open a childproof medicine bottle, and pour out two (and only two) pills

Make a peanut butter and jelly sandwich, starting with an unopened bag of bread and unopened jars of peanut butter and jelly

Act as the dealer in a poker game (shuffling the cards, dealing them out, gathering them back up when the hand is over)

Assemble a mechanical watch

Peel a piece of scotch tape off of something

Braid hair

Roll a small lump of playdough into three smaller balls

Peel an orange

Score a point in ladder golf

Replace an electrical outlet in a wall with a new one

Do a cat's cradle with a yo-yo

Put together a plastic model, including removing the parts from the plastic runners

Squeeze a small amount of toothpaste onto a toothbrush from a mostly empty tube

Start a new roll of toilet paper without ripping the first sheet

Open a ziplock bag of rice, pull out a single grain, then reseal the bag

Put on a necklace with a clasp

These all require some combination of rapid or precise movements, adjusting motions based on subtle tactile feedback, and manipulating small, fragile, or deformable objects. Completing these tasks at all would be impressive, and the faster they could be done the more dexterously capable the robot. As with AI evals, ideally these would be done without the system being trained on these tasks specifically (I haven’t looked, but it wouldn’t surprise me if there were purpose-built systems capable of doing these tasks right now).

What progress will we see in dexterity?

Humanoid robotic dexterity is improving, but as a non-robotics expert it’s not obvious to me what sort of improvement trajectory it's on. One potential path is that humanoid robots follow a path like self-driving cars: robots gradually get more and more capable and dexterous, but improvement is slow and painstaking, resolving edge cases is hard, and rollout is very slow. This is probably my default model for progress on something that requires gathering a lot of real-world training data, but I can easily imagine other trajectories. If software proves to be the primary bottleneck to performance improvement, and things like simulation and synthetic data allow for rapid performance increase without needing to collect a huge amount of real-world data, rollout might be much faster. On the other hand, I can imagine progress being much slower if performance improvement ends up requiring capturing a lot of high-fidelity tactile data from hardware that doesn’t exist yet.

Self-driving cars also may not be the best reference class for humanoid robot deployment. A self-driving car needs to be very, very reliable before it can be usefully deployed given the high costs of an accident, but depending on where they’re deployed this may not be true for humanoid robots, especially if failures are along the lines of “move an object incorrectly and need to try again”. Slow, plodding task performance might be acceptable in many situations if the alternative is more expensive or otherwise undesirable. A Roomba doesn’t need to follow the most efficient path and clean the floor in the least amount of time to be useful, it just needs to work autonomously most of the time. Humanoid robots might be similar.

And of course, even as dexterity improves (or doesn’t) there’s no guarantee that the humanoid form factor will win out. A humanoid robot has obvious advantages (it fits nicely into a world that has been designed for humans to work in), but for many tasks other form factors are likely superior. So it’s still far from clear to me what our robotic future looks like.

Thanks to Ben Reinhardt and Hersh Desai for reading a draft of this and providing feedback. All errors are my own.

I teach robotics to HS students. "Manipulation is the problem" is something we talk about quite a bit. I show them the old DARPA challenge videos and they get a laugh at robots trying to just open a door. Robots have gotten better, but simple tasks still baffle them. Why? Tactile sensor density.

Computer vision has gotten very good: high resolution, AI pattern rec, the robot knows what's around it. But manipulation isn't visual. It's tactile, and tactile sensor density simply hasn't kept up. Human tactile resolution in the fingertip is about 1/2mm. And that's not just binary -- "am I touching something?". It's quite complex: "How much pressure?"; "Is it hot or cold?"; "Is it hard or soft?" The human palm is less sensor dense, but still far denser than any robotic fingertip. This is a biggest limitation to humanoid robots today: they can't feel. (And I don't mean emotions.)

You're also correct that dexterity and strength are largely a tradeoff and probably always will be. Strength requires higher power servos, but their larger size limits how many of them can be put in something small like a finger. Also, the higher pressures of lifting heavy things tend to damage the tactile sensors needed for more dexterous applications. This is likely unsolvable but won't matter long-term though. Robots will become cheap enough they will be specialized. The unit that can crack eggs for an omelet doesn't need to lift 50 pounds.

Vision is there. AI is there. Servos are close. But tactile sensors are the biggest hurdle. There's lots of folks working on this, and it's going to get there. But it's not there yet.

It's not just tactile sensor efficacy and density that's the issue. It's proprioception, or kinesthesia. Having a "sense" of position and movement, both of one's own body and the surrounding environment, without the use of visual or auditory inputs. Another commentor mentioned having an "almost visual image" of the task of buttoning a shirt. That's what we're talking about here.

This is vitally important to human (or, really, animal) dexterity. It's not enough to just have the tactile input. Or, rather inputs, because as a different commenter mentioned, our sense of touch is multi-channel in terms of both the number and types of inputs, all of which are analog, not binary. Pick up a pencil. The sensation you experience is an integration of signals sent by potentially thousands of different nerve endings. Those signals are integrated in both time and space, with each signal being interpreted in relation to the others. That's how you can know that a ball is round, for instance: integrating the sensation from your entire hand taking into account the position of each finger in relation to the whole.

This appears to be a very difficult problem even for biological nervous systems. Humans, and most mammals, appear to have a pretty good sense of proprioception. But mammals are vertebrates. We have rigid internal skeletons. Our limbs may move in relationship to each other, but their dimensions are basically fixed. This means that our nervous system can treat its own dimensions as a constant.

Which is why we're so awkward around puberty, for what it's worth: for a while there, we grow faster than our nervous system has time to account for. We literally outgrow our own feet.

Anyway, this probably why animals like octopuses don't appear to have much in the way of proprioception. But their legs are boneless. They can change not only the relative position of their legs (independently!) but bend them at every point along their length as well as change both the diameter and length of each one. Their nervous systems are pretty damn complex, though clearly not as complex as ours. But unlike mammals, with our rigid limbs, their nervous systems can't take any of their own dimensions as a given. So they basically don't bother with proprioception, as far as we've been able to tell.

Octopuses can get away without having proprioception because they're basically all legs, live in the water (meaning their limbs don't have to support their own weight or the weight of things they're trying to manipulate), and they are basically infinitely flexible. This means that for an octobus, the answer to "Where are my legs?" is effectively "Everywhere!" They can also squeeze themselves through any hole larger than their eyeballs, which is a pretty neat trick.

Needless to say, we can't build robots that way. But that being the case, we're left trying to replicate an incredibly sophisticated, analog, multi-variate, multi-channel sensory phenomenon with digital, algorithmic brute force.