Today, the electrical grid has over 500,000 miles of high-voltage transmission lines and more than 5 million miles of lower voltage distribution lines, which supply power from nearly 6,000 large power plants. Together, this system supplies more than 4 trillion kilowatt-hours of electricity to the US each year. The extent of it has led the US electrical grid to be called “the largest machine in the world.”

Building this machine took decades, millions of workers, and billions of dollars. Today investor-owned electric utilities collectively own nearly $1.6 trillion in assets, some of which have been in use for more than a century. The Whiting hydroelectric power plant was built in 1891 and still operates today, and the 2018 Camp Fire in California was caused by a PG&E transmission line built in 1921.

Modern civilization would be impossible to operate without cheap, widely available electric power. The grid makes modern society possible. But today, the grid faces several challenges that threaten its ability to distribute electric power. They are increasing use of variable sources of electricity, decreasing grid reliability, increasing delay in building electrical infrastructure, and increasing demand for electricity. Addressing these challenges will require massively rebuilding our electrical infrastructure. How we choose to do this will shape the future of the grid.

Introduction of variable sources of electricity

For most of its history, electric power generation worked like any other machine - it was turned on when it was needed, and turned off when it wasn’t. Power plants had a well-defined amount of generation capacity, and a well-understood amount of time it took them to ramp up or down, making it relatively straightforward (if not exactly simple) to meet demand for electricity - turn on enough power plants to make supply match demand. Even variable sources of electricity like hydroelectric power were predictable in the short term, and able to ramp up and down as needed.

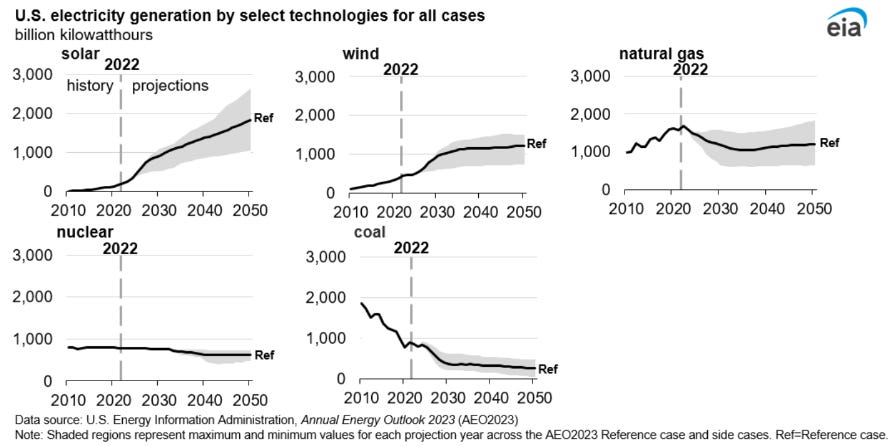

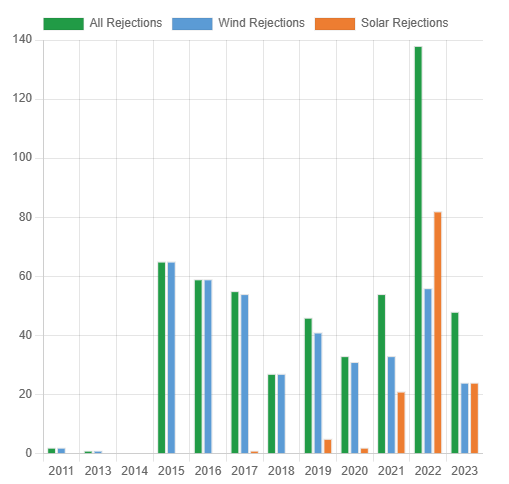

But the predictability of power generation is slowly going out the window. Predictable, firm sources of electricity (such as coal plants) are being removed from the grid, and being replaced by sources of electricity generation such as wind and solar, which fluctuate in their output based on whether the wind is blowing or the sun is shining. Between 2011 and 2020, roughly 1/3rd of US coal plants were shut down. By 2030, that’s expected to fall by another 25%. By contrast, of the 150 gigawatts of new electrical generation projects being tracked by the EIA, 2/3rds of them are wind or solar projects.

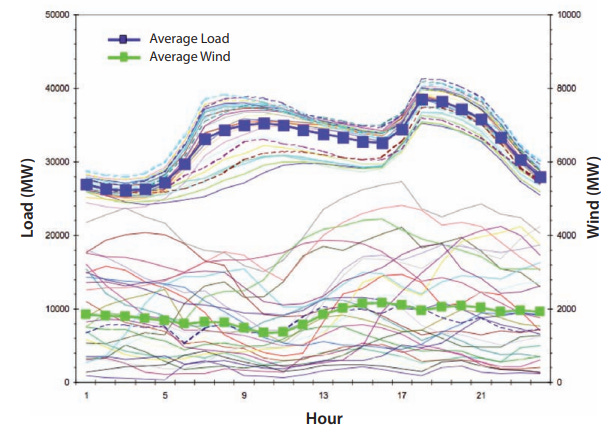

Because large-scale electricity storage is essentially non-existent today (less than 1% of current generation capacity), electricity generation and consumption must balance, day by day, hour by hour, minute by minute. When electrical generation can be turned off and on on demand, and provides a steady, predictable amount of power, this is comparatively straightforward - errors in load forecasting are typically on the order of just 1%. With variable sources of generation, which might vary 15-30% from their projected next-day output, this becomes more difficult, and requires greater reserve margins to ensure electricity demand can be met if variable generation is less than predicted.

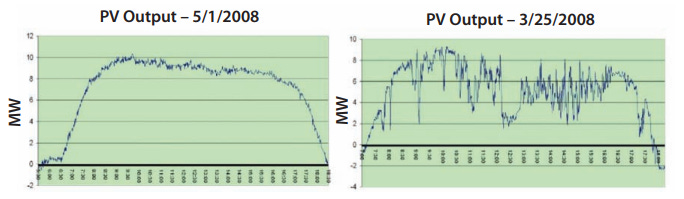

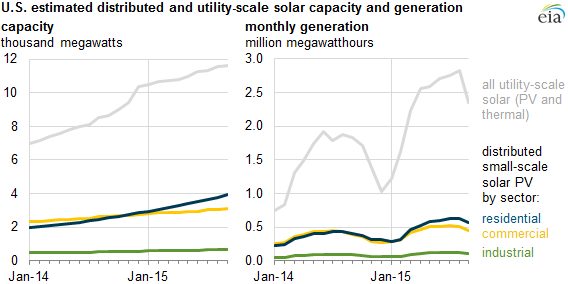

Most wind and solar power is generated at large, utility scale “farms” which generate several megawatts of power. However, solar power is also increasingly being generated via rooftop solar panels mounted to individual homes and businesses, and which generate power in the kilowatt range. These “distributed energy resources” (DERs) are typically located “behind the meter” - unlike traditional power plants, they’re controlled by the individual home or business owner, not the utility company, though they can still feed power to the grid. Beyond being another variable source of generation, DERs can put stress on the grid that it wasn’t designed to handle. Most distribution systems were designed for one-way flow from central power stations to consumers, but high-enough DER penetration can cause power to flow from customers back through substations to transmission lines, a reverse flow that it wasn’t designed for.

What’s more, distribution systems were historically designed with a “fit and forget” approach – they were sized to accommodate peak load, but weren’t built with the monitoring or control systems that would allow active, flexible management that variable sources of electricity require. Variable sources of electricity like solar and (to some degree) wind also lack much of the grid-steadying effect you get when generation is provided by the large rotating masses of conventional generators. Variable sources of electric power upend much of the logic that the power grid was built on. As Gretchen Bakke notes in her book “The Grid,” the grid “isn’t made for modern power.”

Decreasing grid reliability

The increasing use of variable sources of electricity make maintaining grid reliability – ensuring that electricity supply and demand are balanced at all times – more challenging. When supply can’t meet demand, the result is brownouts, blackouts, and requests for customers to use less power.

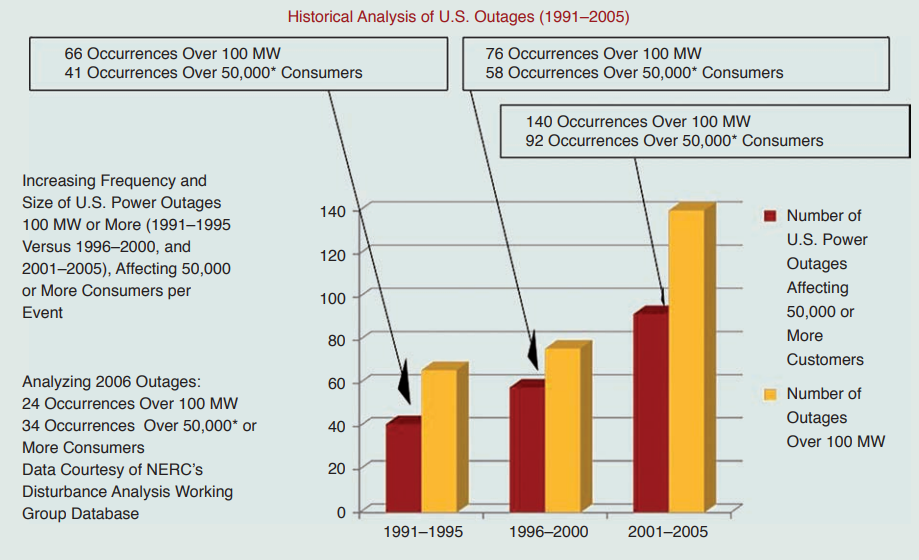

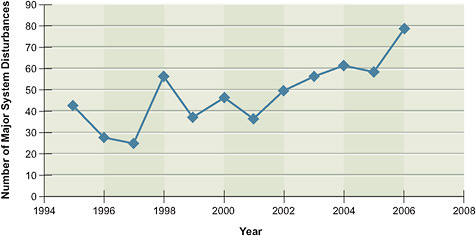

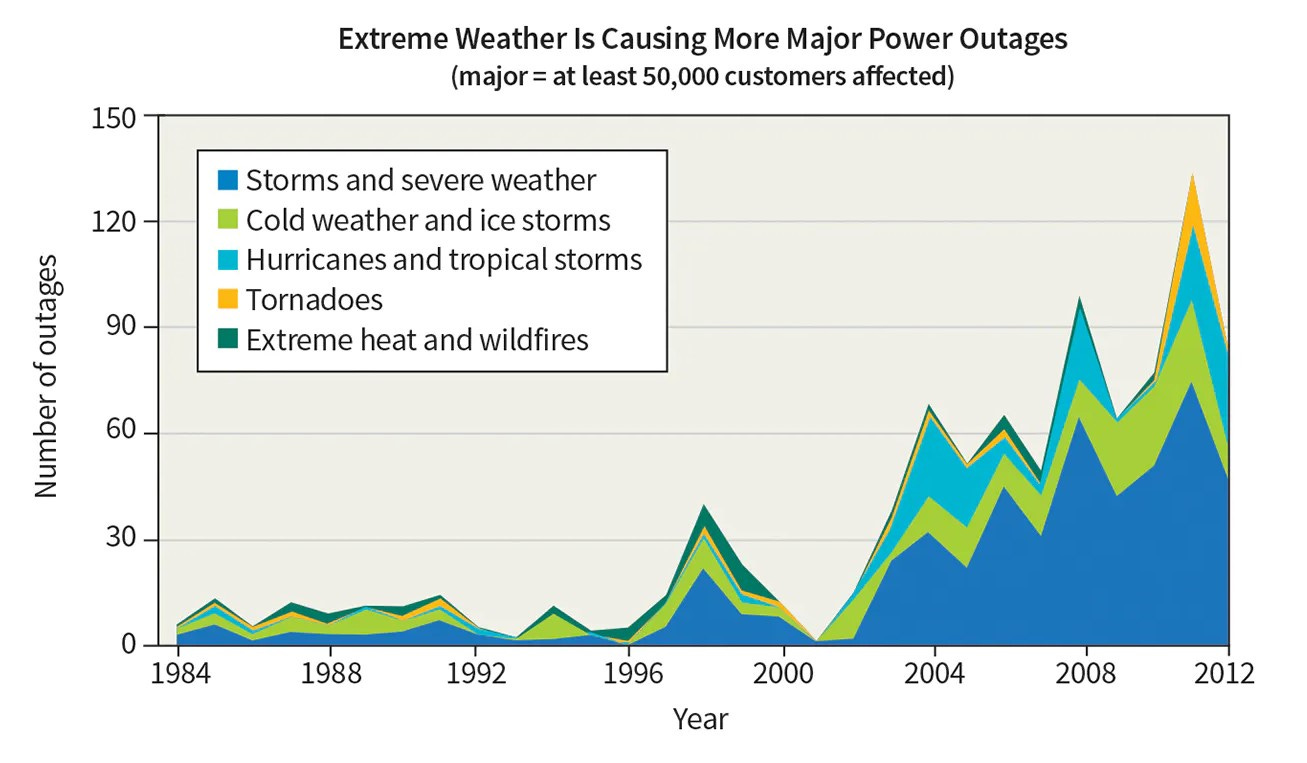

But variable generation is far from the only reliability issue on the modern grid. Grid reliability has been steadily decreasing since the 1990s, long before renewables were being widely deployed. Gretchen Bakke notes that “between the 1950s and 1980s, outages increased modestly, from two to five significant outages each year, compared with 76 in 2007 and a whopping 307 in 2011.” Between the early 1990s and the early 2000s, the number of outages affecting more than 50,000 customers more than tripled.

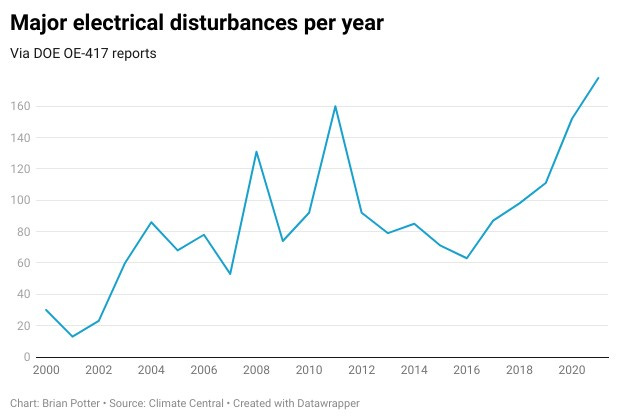

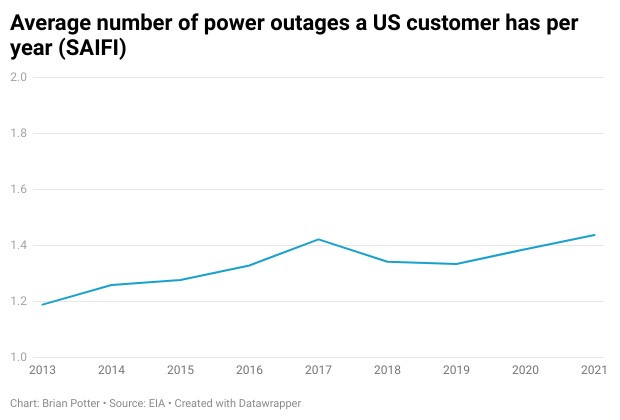

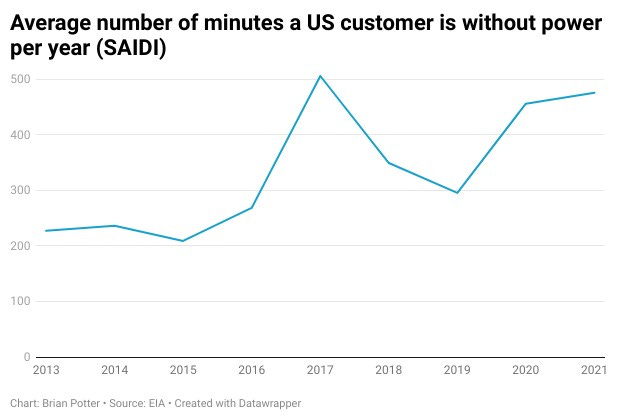

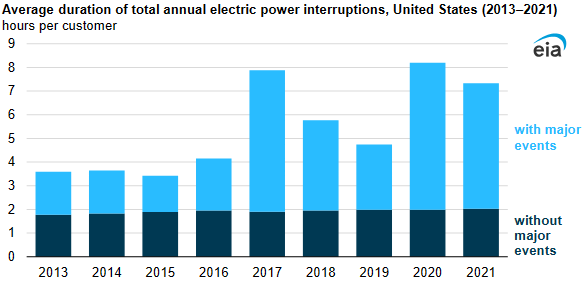

In 2009, a report from the DOE’s Electricity Advisory Committee warned that the current electrical infrastructure “will be unable to ensure a reliable, cost-effective, secure, and environmentally sustainable supply of electricity for the next two decades.” Since then, reliability has continued to decline. Major disturbances to the electrical system are at a 20-year high, the frequency of power outages has increased, and the average yearly total outage duration has more than doubled.

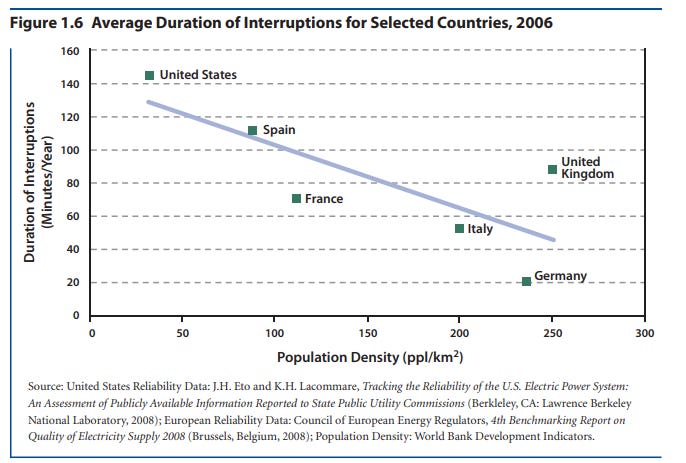

In 2006, the average yearly duration of power outages in the US was seven times that of Germany. Since then, US grid outages have increased to 475 minutes on average, whereas in Germany they are just over 12 minutes a year – more than 30 times less.

What’s behind this decrease in reliability?

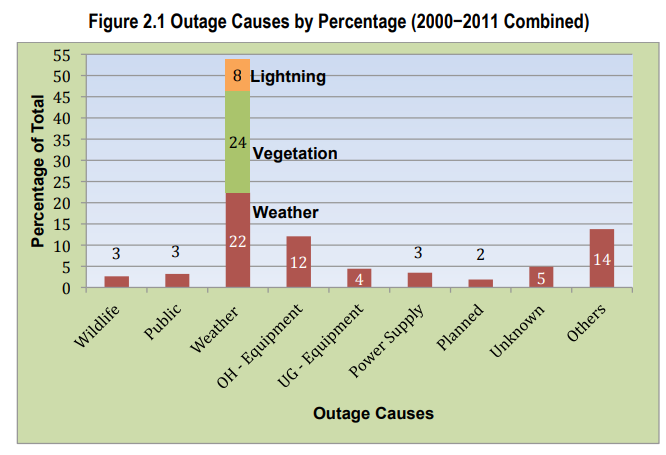

In large part this seems to be due to increasing extreme weather. The majority of power outages (90%) are due to a failure in the distribution system, the (comparatively) low voltage wires that bring the wires directly to your house. High winds during storms, ice during extreme cold weather, and high heat causing power lines to sag can all knock out distribution lines.

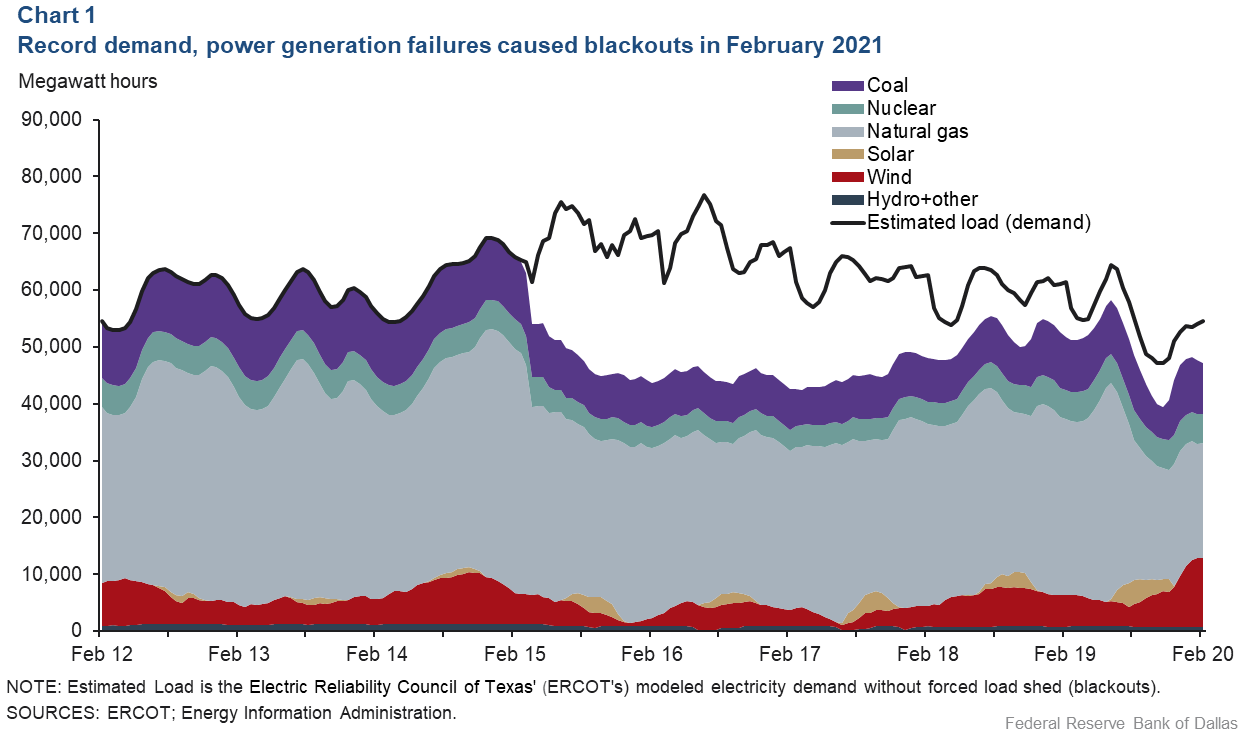

Extreme weather can also shut down electricity generation, as it did in 2021 during Winter Storm Uri in Texas. A similar issue was only averted in 2018 in New England due to ISO-NE’s Winter Reliability program, where power plants store oil on-site as a backup in case natural gas supplies are interrupted.

In some cases, extreme weather can strike many parts of the grid at once. In a heatwave/drought for instance, there is less hydroelectric power available at the same time power demand spikes from increased air conditioner use. A heatwave and drought also increases the risk of wildfire, which (among other things) can knock out power lines. In 2020 in California, a heat wave and drought forced residents to conserve power to avoid overloading the grid.

Over the past several decades, weather-related power outages have become more common. In 1985 there were less than 10 outages affecting more than 50,000 customers caused by extreme weather. In 2011, there were more than 120.

Since then, the average yearly per-customer outage time due to “major events” (snowstorms, wildfires, hurricanes, etc.) has more than tripled:

Between 2000 and 2020, the number of major electric disturbance events due to extreme weather went from 10 to more than 140.

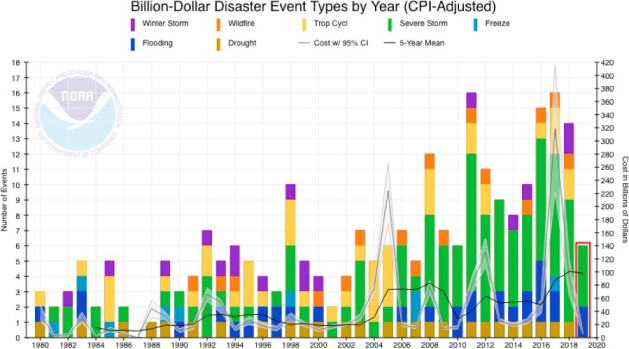

And since the 1980s, the frequency of destructive weather events has increased dramatically:

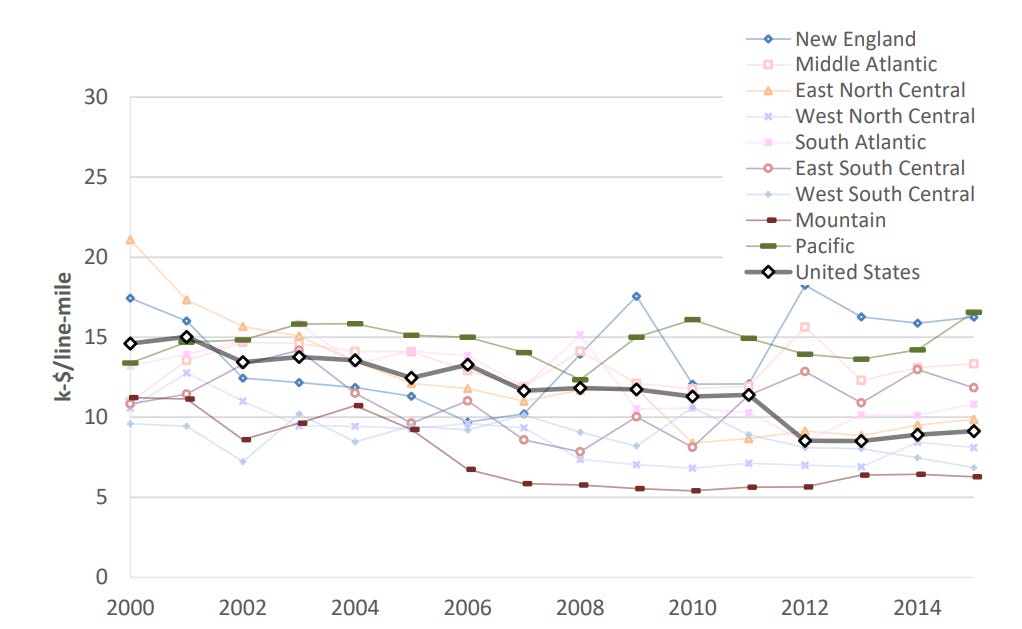

Some experts place the blame for this on decreased maintenance. Gretchen Bakke argues that distribution utilities have been cutting back on tasks like tree-trimming, which increases the likelihood that a rogue limb can knock out a portion of the grid. The 2003 Northeast Blackout initially started when a sagging transmission line hit the branch of a tree that had been allowed to grow more than 50 feet tall. A year earlier, the owner of the transmission line, FirstEnergy, had decreased its tree-trimming schedule from every 3 years to every 5 years. A 2004 fire in Nevada was likewise caused by an excessively tall tree hitting a power line, and the transmission owner (PG&E) had likewise cut back on its tree-trimming operations (decreasing frequency from every 3 years to every 5, and reducing crews from 3 workers to 2). Between 2000 and 2015, utility spending on distribution per-mile fell by more than a third.

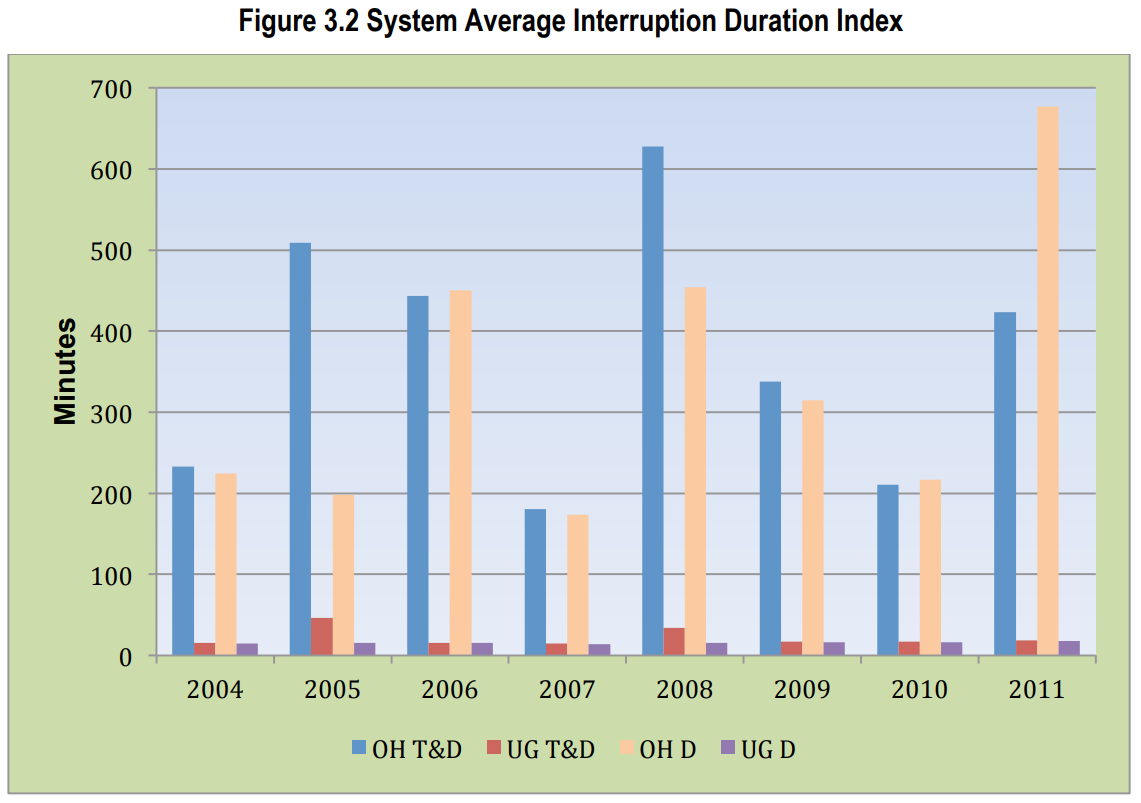

But increasing effects of extreme weather are also likely due to the simple fact that US distribution lines are mostly mounted on poles, and thus highly exposed to weather. In Germany, by contrast, 80% of distribution lines are buried. If we look at US outage frequency broken down by overhead vs underground, we see that the vast majority of outage minutes are caused by a failure in an overhead powerline.

Similarly, in urban areas (which have a much greater fraction of buried power lines), average yearly power outage time is much lower. In 2003, average yearly outage time in urban areas was between 30 seconds and 5 minutes per year, compared to 9 hours and 4 days in rural areas.

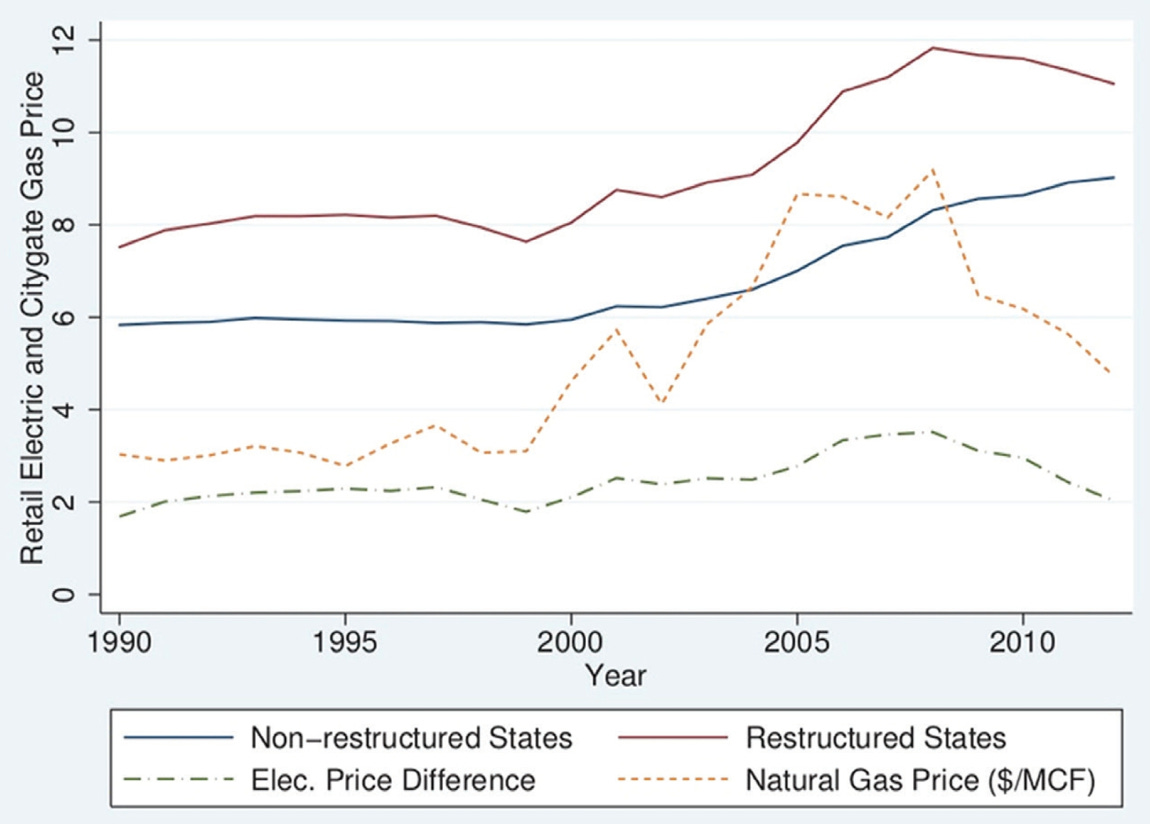

Some experts, such as Gretchen Bakke and Meredith Angwin, put at least part of the blame for decreasing electricity reliability on the industry restructuring aka “deregulation.” Utilities opening up their transmission lines resulted in power getting transmitted longer and longer distances, which places greater stress on the grid. And as Emmett Penney notes, the structure of wholesale power markets often makes it difficult to implement measures that would address grid reliability:

ISOs cannot demand that power plants weatherize, store fuel, or remain on deck to keep the system going. That would bolster the “market power” of traditional plants which provide the baseload power that ensures reliability. In other words, it would be market favoritism. Instead, arcane and kludgy seasonal capacity auctions have been contrived in the hope of solving reliability issues, with limited success and with great resentment among everyone involved. In the case of the Midcontinent Independent System Operator (MISO), where the fragility is particularly acute, the independent market monitor said that recent capacity auction results to get the region through the summer are “the outcome we’ve been worried about for a decade.” MISO is now facing serious capacity shortfalls that leave the region, especially its northern half, vulnerable to blackouts.

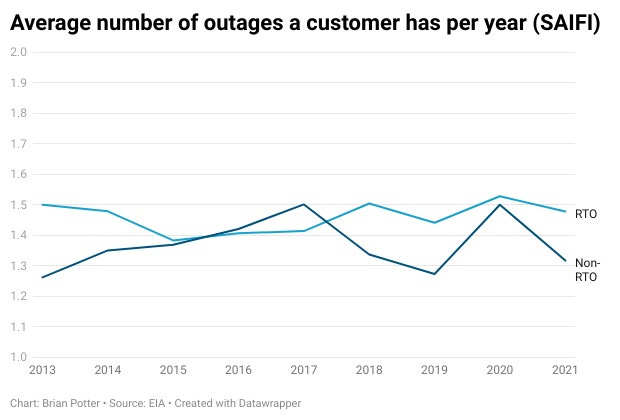

Areas with ISO/RTO management do generally show both less reliability, and higher electricity costs, than non-RTO areas.

Thus, even before the massive addition of variable sources of generation, grid reliability had been steadily declining.

Increasing demand

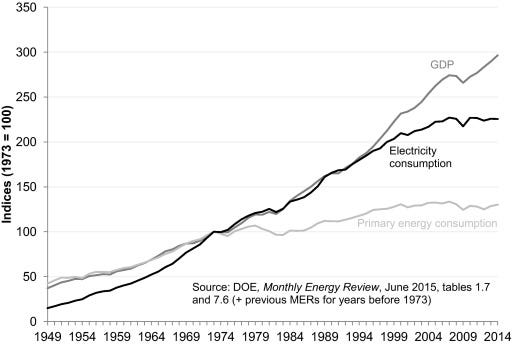

Until the early 1970s, electricity demand grew around 7% per year between 1920 and 1970, more than twice as fast as overall GDP (and before 1920, demand grew even faster). However, since the energy crises of the 1970s, the rate of growth in electric power demand has decreased considerably. Electricity use per-capita peaked in the early 2000s, and since 2010 overall electricity demand has been largely flat.

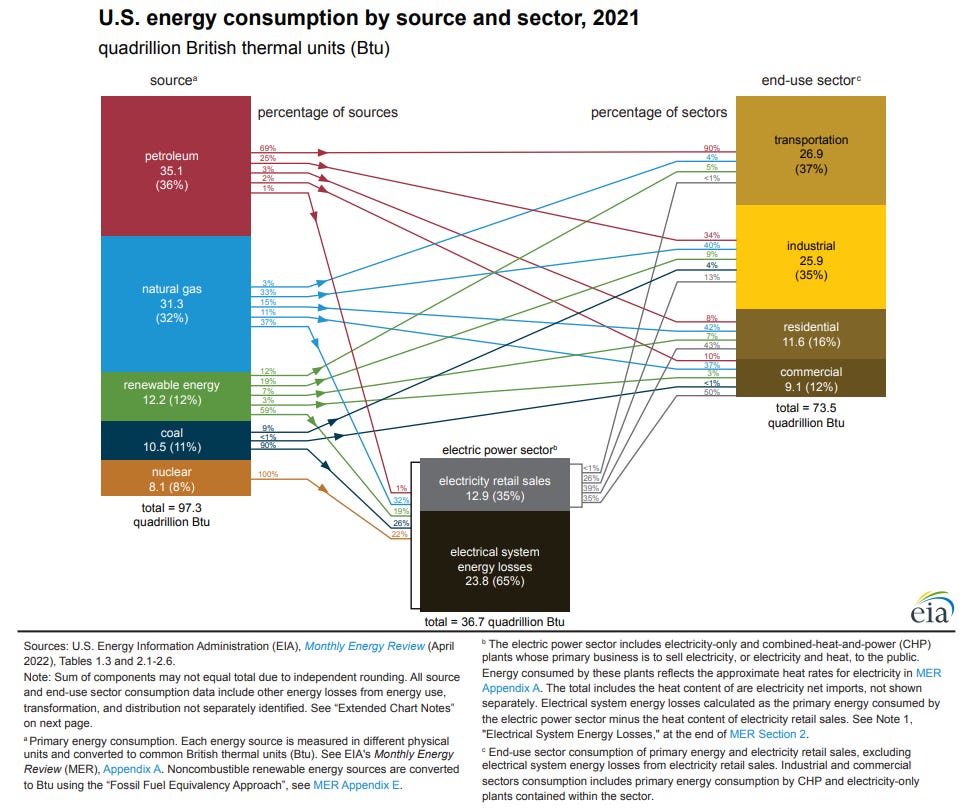

But this is likely to change in the future. Historically, a huge amount of energy in the US was generated directly from burning hydrocarbons – using natural gas or fuel oil for heat, gasoline to power cars and airplanes, burning coke in blast furnaces. Of the 73.5 quadrillion BTUs of energy the US used in 2021, less than 20% of it was provided via electric power

But decarbonizing energy generation likely means electrifying that other 80% of energy use: replacing gas-burning cars with electric cars, replacing gas furnaces with electric heat pumps, replacing gas stoves with induction stoves, replacing blast furnaces with electric arc furnaces and direct iron reduction, and so on.

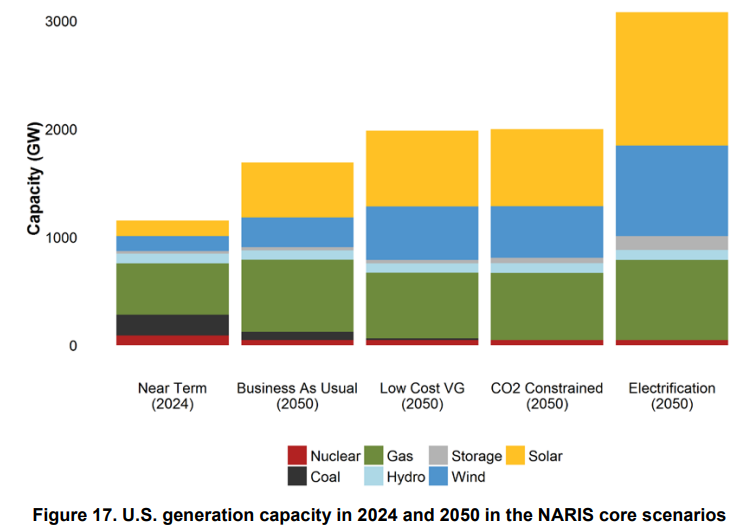

Large-scale electrification would require an enormous increase in electric power generation. In “Electrify,” Saul Griffith estimates that electrification of all fossil-fuel power sources would require quadrupling the amount of electricity we currently generate (and that’s AFTER significantly cutting energy demand by way of various efficiency improvements). An NREL model similarly estimated that wide-scale electrification would require nearly tripling electricity generation by 2050 (and that does not totally eliminate carbon emissions, only reduces them by 80% from 2005 levels).

Delay

The enormous amount of construction large-scale electrification will be made harder by the fact that, since the 1970s, it has become increasingly difficult to build electrical infrastructure in the US.

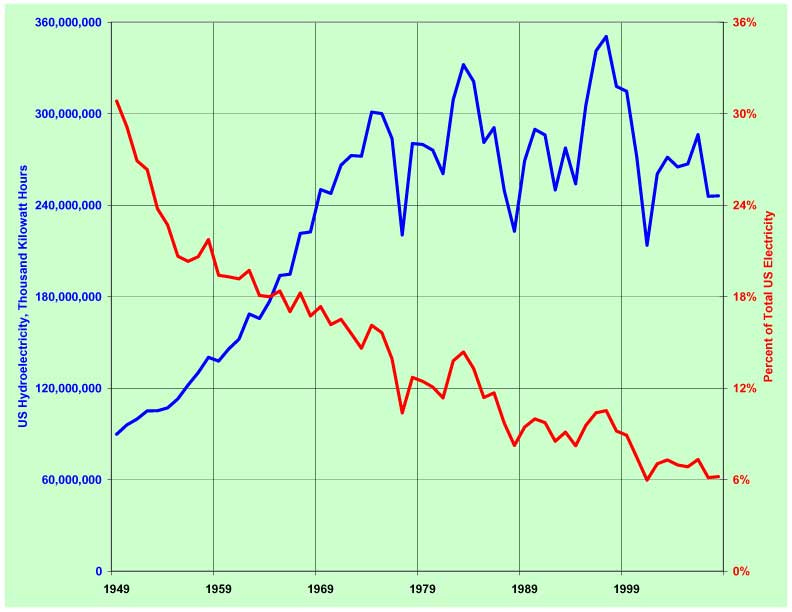

Some types of infrastructure have become nearly impossible to build. Nuclear power plants, for instance, have become so time consuming and expensive to construct in the US that it’s almost impossible to build one profitably. Of the two attempts at building new nuclear reactors in the US in the last 20 years, one was canceled after spending billions of dollars, and the other is seven years late and $17 billion dollars over budget. There are currently no new large nuclear plants in EIA’s list of planned power plants (the only nuclear plant at all is a small modular plant being built in Idaho). Large hydroelectric power plants have similarly been made almost impossible to build. Large hydroelectric dam construction largely stopped in the 1970s due to environmental concerns, and US hydroelectric power production peaked in the mid-1990s, despite the fact that the US has only tapped roughly 16% of its potential hydropower resources. While there are 80 planned hydroelectric projects currently being tracked by the EIA, they’re all very small facilities, many of them installing small turbines on existing, non-powered dams.

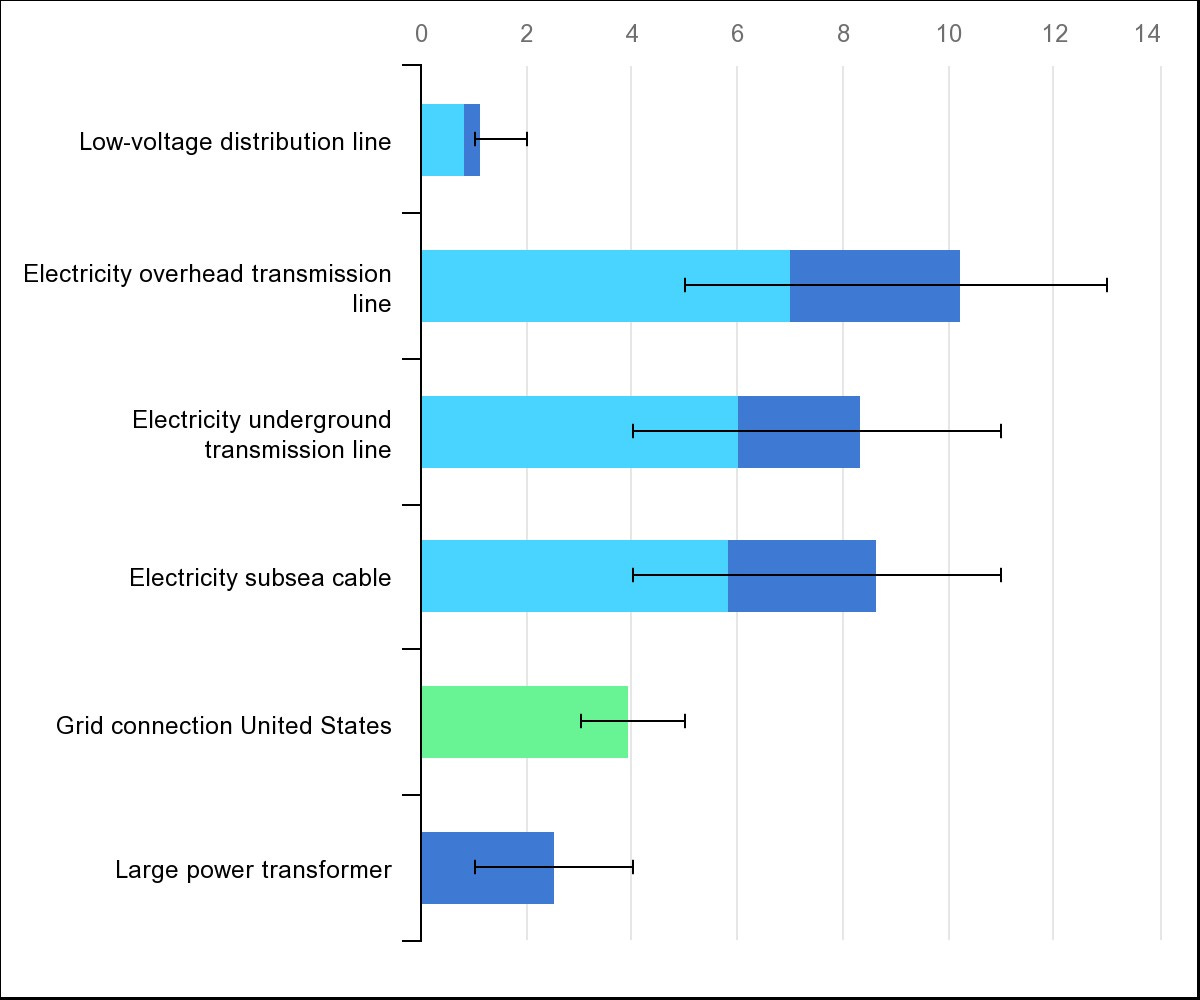

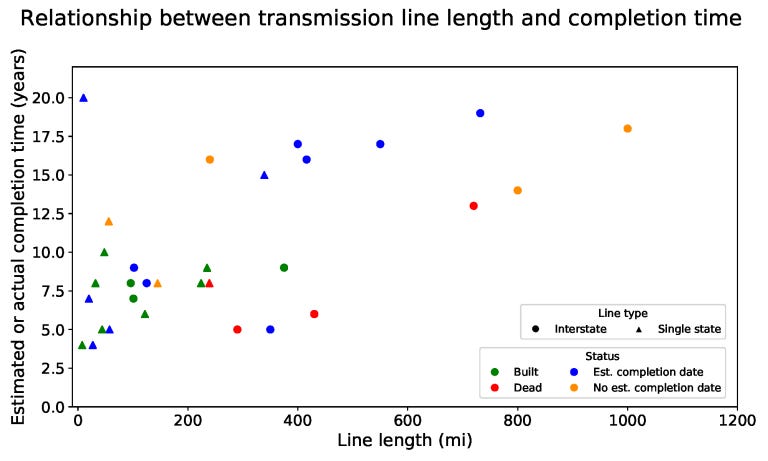

In other cases, rather than being impossible, electrical infrastructure is merely incredibly time consuming to build. Transmission lines, for instance, take on average 10 years to build in the US, and in some cases can take up to 20 years.

Most of this time is spent on getting the proper permits. Transmission lines require approval from every state (and in some states, every county) that they cross, and states often only consider in-state benefits when making their decision, rather than the benefits to the grid as a whole. This makes it difficult for long-distance transmission lines that don’t provide power to the states they cross to get approval. Transmission lines also need to secure the right to build on every parcel of land that they cross, which for long transmission lines can be thousands of parcels. The Grain Belt Express, a planned transmission line stretching from Kansas to Indiana, needs approval from 1700 landowners, many of which are still holding out.

Like with any large construction project, local residents often oppose new transmission construction, though transmission lines seem especially likely to galvanize opposition. In 1974 a proposed 430 mile transmission line in Minnesota which used eminent domain to secure construction rights on agricultural land was met by opposition from thousands of protestors:

Farmers employed tractors, manure spreaders, and ammonia sprayers and used direct action and civil disobedience in an attempt to prevent construction of the line. Powerline protests drew national attention when over 200 state troopers, nearly half the Minnesota Highway Patrol, were deployed to ensure that construction of the line would continue. During a two-year period, a group of opponents to the line who called themselves "bolt weevils" tore down 14 powerline towers and shot out nearly 10,000 electrical insulators

More recently, activists in Maine have spent years opposing the construction of a transmission line that would bring in power from Quebec hydroelectric plants.

Congress tried to address this issue in the 2005 Energy Act, which gave FERC “backstop authority” to approve transmission line construction if states withheld it if transmission was built in “National Transmission Corridors” designated by the DOE. However, early attempts to use this power was challenged in federal court, and after those cases were lost it hasn’t been used since.

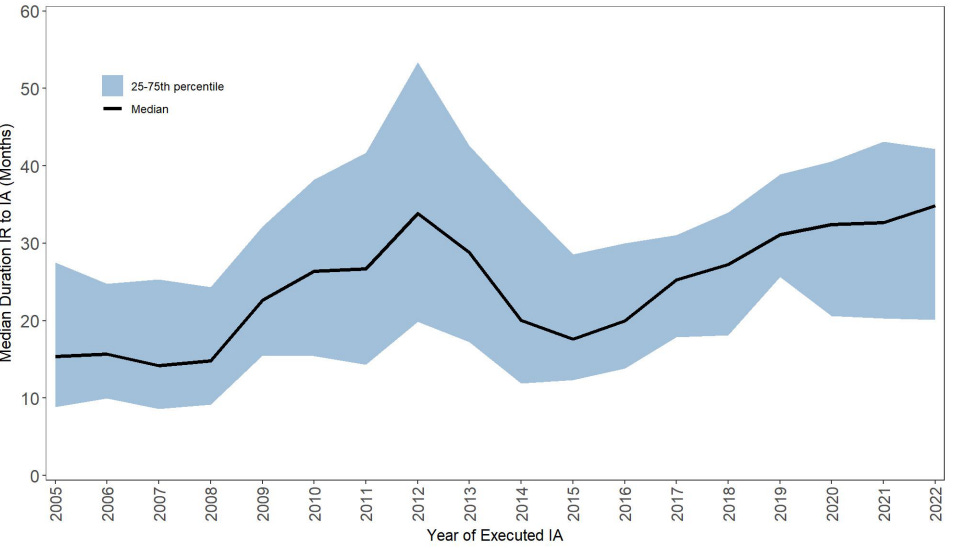

The difficulty of building transmission lines, in turn, holds back the construction of other electrical infrastructure. In the early stages of planning a new electricity generation or storage project, a project must undergo an interconnection study, that determines the effect the project will have on the grid and what, if any, additional infrastructure will be needed to accommodate it (any new infrastructure will need to be paid for by the project developer). In part due to a lack of available transmission infrastructure, the time it takes to get interconnection approval is increasing – since 2005, it has doubled. There is currently more generation capacity waiting in the interconnection queue than all existing US power plants combined (though many of these projects will be withdrawn before they get built). When plants are authorized to interconnect, they face increasing costs for connection. Interconnection costs are often 50 to 100% of the cost of the plant itself due to the need to build additional grid infrastructure to accommodate them.

The lack of sufficient transmission infrastructure also shows up in the form of higher-than-necessary electricity costs. Customers near sources of wind or solar power often enjoy cheaper power than those farther away, because there’s insufficient transmission infrastructure to move the cheap power long distances. These “congestion costs” have increased from around $1 billion in 2002 (in 2023 dollars) to more than $13 billion in 2021.

But transmission lines aren’t the only thing that takes an extreme amount of time to build. Offshore wind farms can take 10 years to permit, due to the number of agencies and the number of potential NEPA reviews involved:

The permitting process for offshore wind is involved, and can trigger, among other things, numerous NEPA reviews. First, the BOEM identifies areas that might be suitable to designate for offshore wind development, a process which includes a NEPA review (though most so far have been Environmental Assessments with findings of no significant impact). This process is time-consuming — the BOEM is currently developing 7 areas around the US coast for leasing, the last of which (off the coast of Maine) won’t be ready for auction until the end of 2024.

Once the BOEM has found a candidate site, it auctions off the rights to develop offshore wind in that area. The lease winner creates a construction and operations plan (COP) for their proposed wind farm. This is submitted to the BOEM, who then reviews and performs another NEPA review, typically an EIS. Once the COP is approved, construction can start. Altogether, it can take up to 10 years of planning before construction can start on an offshore wind farm

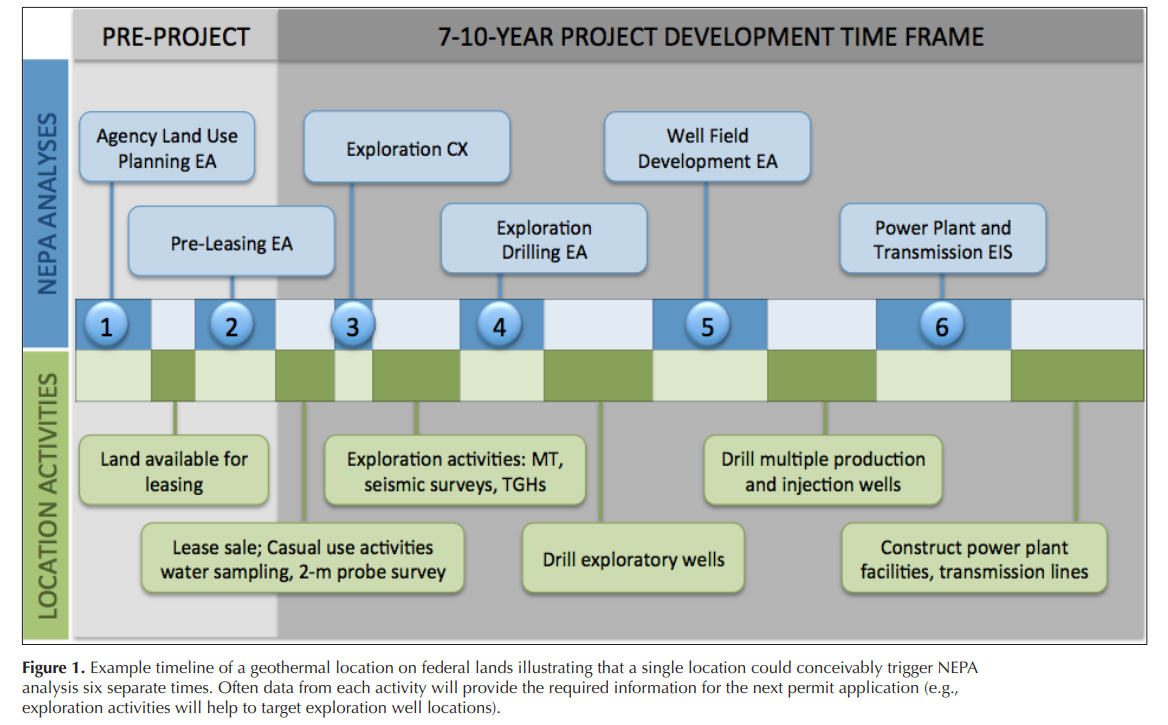

Geothermal projects likewise might take 10 years to develop, in part because they can require up to six(!) separate NEPA reviews:

Like everything else in the US, building electrical infrastructure is getting harder and harder.

The hard path and the soft path revisited

How can we address these challenges? How will we build an electric power system that can reliably provide the power that’s needed, where it’s needed?

In 1976, when US energy policy loomed as large as it does today, physicist and energy expert Amory Lovins articulated two strategies for the future of US energy in a soon to be famous article in Foreign Affairs titled “Energy Strategy: The Road Not Taken?” In this piece, Lovins layed out two possible futures for US energy policy, what he called the hard path and the soft path.

The hard path was, in the words of Lovins, “an extrapolation of the recent past” – meeting energy needs by constructing more large-scale, centralized energy infrastructure (such as large nuclear reactors, coal plants, and high voltage transmission lines), and weaning off fossil fuels by engaging in large-scale electrification of industry and transportation. This path, in the view of Lovins, would only be possible by way of large-scale government intervention, and require enormous capital investment from utilities (or, as Lovins unenthusiastically describes it, would be a world of “subsidies, $100-billion bailouts, oligopolies, regulations, nationalization, eminent domain, corporate statism”).

The other, soft path, was to instead transition to smaller-scale energy technologies that were “flexible, resilient, sustainable, and benign” – rooftop solar panels, buildings designed for increased energy efficiency, industrial cogeneration, solar heating and cooling, and energy conservation. By emphasizing small-scale, local generation, the soft path would eliminate the need for large, centralized infrastructure, including much of the transmission and distribution system. And it would eliminate the power losses that were an inevitable result of moving electricity long distances, and the conversion losses from burning fuel to turn it into electricity, and then turning that electricity back into heat.

In many ways, Lovins was describing a very different world than the one we find ourselves in today. Lovins argued for the soft path in part out of environmental concern, but, contra Lovins, today large scale electrification of industry and transport is considered the pro-environment path. Similarly, Lovins argued against the construction of large-scale nuclear plants, and for the adoption of small-scale diesel generators, which at best would be a strange position for a modern environmentalist to adopt. And solar and wind power, rather than staying small-scale, have been adopted as the large, utility-scale infrastructure that Lovins opposed.

Nevertheless, we can still broadly map the energy strategies of today onto Lovins’ dichotomy.

The modern hard path, the “extrapolation of the recent past,” is to lean into the strategy of scale that the electric power industry pursued successfully for 70 years, and to expand the scale of the grid. Build enough transmission lines to tie the three separate grids together. Build large-scale solar installations in the southwest and the southeast, large scale wind farms in the Great Plains and offshore, and move that power to where it’s needed with large, cross-country transmission lines. For baseload power, bring back the construction of large nuclear plants.

A larger-scale grid that can move power long distances reduces the effect of solar and wind intermittency, as fluctuations will tend to average out over a large area. It also reduces the impact of extreme weather events. By making the grid “larger than the weather systems that impact it”, power can be supplied from elsewhere even if large weather events cause large-scale generation disruption. The wide scale outages in Texas during Winter Storm Uri could have been avoided if there had been enough transmission capacity to move power from areas outside the storm.

The problems on the modern hard path are primarily bureaucratic in nature. It requires building things that we know how to build, but that as a society we’ve made difficult. Solving these problems requires greater state capacity at the federal level. It means building large transmission capacity to move power cheaply across the country. It means giving FERC the ability to site transmission lines the way that it can site natural gas lines. It means doing grid planning at a larger, regional level, rather than the current “piecemeal” planning where utilities plan for their own interests when building transmission but don’t consider the benefits to the grid as a whole. It means addressing NEPA issues that make it easy to slow down or stop projects. It means a Nuclear Regulatory Commission that doesn’t obstruct nuclear power, and federal support that makes it possible to recover from our withered nuclear supply chain.

The other, soft path strategies, don’t require the construction of large scale infrastructure. As per Lovins, these are things like energy conservation. Things like more energy efficient buildings, but also things like demand-side management technologies which give utilities limited control over things like water heaters and refrigeration compressors, which need to run some time, but are flexible as to when they can run. With demand-side management, energy peaks can be smoothed out, which significantly reduces required generation capacity. Similarly, the use of energy storage (either grid scale or by using the batteries on things like electric vehicles or induction stoves) can dampen the fluctuations from variable sources of energy and demand, requiring less power to be transmitted long distances. Other soft path strategies are the increasing use of rooftop solar, “microgrids” (small grids which can be isolated from the main power grid and thus are less susceptible to cascading failures), and advanced geothermal (which can theoretically be built nearly anywhere so long as you drill deep enough, and could efficiently supply process heat as well as electrical power).

And while current plans for large-scale renewable deployment often assume that wind and solar power will need to be transmitted from geographic locations where it’s most efficient, folks like Terraform Industries are predicting that solar will get so cheap that it will be more economical to simply over-build capacity in low-sunlight places rather than relying on the construction of long-distance, high capacity transmission lines.

The soft path strategies also have problems of bureaucracy (getting more efficient buildings means adopting more stringent building codes, getting more rooftop solar means removing permitting bottlenecks), but they’re also problems of technology. Things like microgrids, advanced geothermal, better energy storage, and solar cheap enough to be economical even in the cloudiest places all still need to be developed. More specifically, we need to find ways to make these things cheaper.

One major difference between Lovins’ hard and soft paths and those of today is that Lovins argued that these strategies were mostly mutually exclusive, but in today’s landscape they’re largely complementary. Demand-side management, leveraging electric-vehicle storage, and advanced geothermal can work hand in hand with the buildout of large transmission lines and mass deployment of solar in the southwest and wind in the great plains.

Rather than having to choose, we’re likely to get a bundle of both hard and soft solutions. Which ones we get will probably come down to what is cheap and easy to do, and what is hard and expensive to do. Solar is cheap and getting cheaper, and has so far been easy, but there’s rumblings of it getting harder. Right now rooftop solar adoption is often encouraged by net metering, where residents get paid for providing excess power to the grid, but states may revise their net metering policies over time to make them less lucrative. Offshore wind construction has historically been difficult, but it’s perhaps trending in the right direction. Large-scale transmission construction has always been hard, and doesn’t seem to be getting easier. Many experts consider microgrids promising, but only a few hundred of them have been installed in the US, and it’s likely cheaper energy storage would be required before they were competitive with utility-scale power. In the early 2000s there was hope of a “nuclear renaissance” in the US, but after VC Summer and Vogtle that seems to have fizzled (though perhaps fusion power will pick up the torch here).

Regardless of what bundle of solutions we get, the power grid of tomorrow is going to look very different from the one we had yesterday.

Thanks to Austin Vernon for reading a draft of this.

Excellent and excellent. Coming from a physics education and 45 yrs as a P&L manager in business, here is the major problem.

Deregulation killed the infrastructure and especially transmission. - Because absolutely no for Profit Power company wants a 10 or 20 year return. America was able to build the long term, low ROI transmission lines when we used "regulated monopolies".

You can not separate "service" from "generation/transmission" as a business model. It's stupid. Because looking at Texas, a complete mess, they have let their CAPEX and long term investment under-invested.

Just on maintainance, say 10% of the $1.6 Trillion of Energy assets should be spend annually on upkeep, improvement and lower cost capital productivity projects. I'm pretty sure you will not find $150 billion on these projects today. annually.

"Some experts, such as Gretchen Bakke and Meredith Angwin, put at least part of the blame for decreasing electricity reliability on the industry restructuring aka “deregulation.” They are quite correct.

My company makes parts for gas turbines, and you have explained some things that I've been confused about for the past 30 years. This is a fantastic series of articles. Subscription upgraded!