A recurring theme of this newsletter is that most new ideas and innovations in the construction industry have been tried, often much earlier than you might expect. But even knowing this, I was surprised at how early the origins of automated building code checking (where software checks a building design for code compliance) are. I may have to make a Hofstadter’s Law-type addition to this, where most construction ideas have earlier origins than you expect, even when you expect that.

A corollary to this idea is that if you want to improve the construction industry, you should have at least some idea for why the idea you’re considering didn’t work previously. In that spirit, let's take a look at the history of automated building code checking systems, and see what we can learn from their attempts.

The basic idea of automated code checking

First, a bit of background. Newly constructed buildings must meet the requirements of the local building code, which is a series of rules governing virtually every aspect of the building - your concrete must be this strong, your handrails must be this high, your travel distance to a stairwell must be less than x feet, etc. Building code provisions are voluminous - the International Building Code, which nearly every jurisdiction in the US uses some flavor of, comes in at 700+ pages for the base code alone, and in many ways it’s just a wrapper for a series of more detailed design requirements spread out amongst hundreds of different documents and design standards. Together these requirements probably amount to 100,000+ pages depending on which level of reference you drop down to, though obviously any given building will only be subject to some of them.

Governments care a great deal whether a building meets code or not, so the process of acquiring a building permit almost always involves a plan reviewer (or several plan reviewers) in the permitting office reviewing the design of the building to ensure it meets code. This review process can be extremely time consuming - in King County Washington, for instance, the average time to receive a building permit for a custom home is currently at 20-30 weeks. San Juan, also in Washington, has an average plan review time of 12-20 weeks. And these are for single family homes, which are small buildings subject to the somewhat simpler International Residential Code. For commercial buildings it’s often worse.

Review times are inevitably extended by the fact that the plan checker will nearly always have comments the designer must address (“the slope here is insufficient to meet drainage requirements, there’s too much/too little glazing on this face” etc.), resulting in a lengthy back and forth between the design team and the jurisdiction before the permit is awarded. Partly this is due to the complexity of the code, which makes it easy for a designer to overlook a provision (especially provisions which have a lot of variation between jurisdictions.) And partly it’s due to variations and inconsistency between plan reviewers and jurisdictions, where different ones will interpret the code differently. One study, for instance, found that the same building, under the same code provisions, had 0 violations of egress provisions in one jurisdiction, and 16 violations in another jurisdiction. Multiply this variation times the 20,000 permitting jurisdictions in the US, and you get a massive amount of coordination effort spent on just agreeing whether the design of a building meets code or not.

This process of coordination at best adds cost (as designers and jurisdictions, contractors and inspectors go back and forth over what’s required to meet code compliance), and at worst adds significant project risk (what if a jurisdiction won’t accept some system, or will only accept it with changes that aren’t feasible to make?) Determining code compliance thus adds a tax of time and effort on every building project, and that tax is especially high for new or innovative building systems. This is the state that mass timber is currently in in the US for instance - provisions allowing tall mass timber buildings were added to the 2021 International Building Code, but most jurisdictions haven’t yet adopted them, and even in the jurisdictions that have, it’s often unclear what the jurisdiction, the fire marshal, etc. will and will not accept.

One potential way of cutting this knot is with automated code checking systems - software programs that can analyze a building design and automatically determine whether it meets code requirements or not. In its ideal form, people have sufficient trust in the software that they will accept this sort of check as proof of compliance (the same way that departments of vehicle registration accept a passing emissions test as proof that your car meets the regulatory requirements for vehicle emissions.)

Reaching this level of acceptance would massively simplify the permitting process in the US. Not only would jurisdictions need much less time and effort to review building permit applications, but architects and engineers could be confident their designs met code requirements before submitting for permit, avoiding the lengthy back and forth with the permitting office.

And with many jurisdictions accepting a software check, such software could become a layer of abstraction over the thousands of permitting jurisdictions in the US, unifying and simplifying permitting requirements. (And once that layer is in place, all the other things that are possible when you have a platform are on the table.)

It’s a compelling case. And because it’s compelling, people have been trying to develop automated code checking systems since computers first began to be used for building design.

A brief history of attempts at automated code checking

We can break automated code checking systems into roughly three problems that need to be solved:

Representing building code requirements in machine-interpretable form

Representing the building itself in machine-interpretable form

Getting stakeholders to accept that the output of the software corresponds to code compliance (beyond the political problems of getting stakeholders on board, this requires making the software’s output reliable, verifiable, and legible.)

(We also might include a 4th category here of performing the actual verification, though most folks would consider that less difficult once the other ones have been addressed.)

Since different attempts at automated code checking have tackled different parts of the problem, we'll take a look at each sub-problem separately (with the understanding that these are, in practice, fuzzy categories with a lot of overlap between them.)

Building code requirements as software

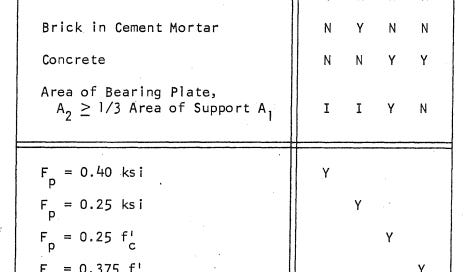

The earliest work on automated code checking was focused on creating software representations of building requirements - starting in the 1960s, Steve Fenves began to work on translating building design specifications into large decision tables, a then-novel way of representing a decision procedure that was both human-interpretable and easily programmed into software. As early as 1969, he was working with the American Institute of Steel Construction (AISC) to develop a decision table version of the steel specification:

The point of this work wasn’t merely to figure out how to program a computer to do steel code-checks - already by the 1960s engineers were writing programs that could perform engineering calculations and compare them against code requirements [0]. The goal was to turn this into a process that was demonstrably reliable - first, by creating a format of the specification (the decision table) where logical consistency could easily be evaluated, and then by implementing that specification in a way that the output could be trusted. The practice then (as it is now) was to “hard code” specifications into the software itself, which made its output difficult to evaluate. The code provisions might be deeply buried in the software as a convoluted series of if-else statements, and there was no real way of verifying whether they had been implemented correctly. Fenves envisioned a more robust procedure, where requirements would be implemented as a stand-alone datastructure (making them much more legible and checkable), that would be processed by a kind of “requirements processing engine”.

Fenves and others did a great deal of work in this area well through the 1980s. He helped develop the SASE [1] system with the National Bureau of Standards (now NIST), which was a methodology for standards creation that would ensure the resulting standards were machine-interpretable. He continued to work with AISC, developing an electronic version of the LRFD specification. And he helped develop the SPEX engine, an attempt at the sort of “standards processing engine” that could operate independently of any particular standards implementation [2].

There were other efforts at this sort of standards processing system, such as the SICAD system, another standards processing engine that was apparently used in some form by AASHTO. However, outside of a few modest successes, most work in this area remained academic.

The development of Building Information Modeling (BIM) in the 1990s opened up a new front on this problem. BIM allowed a building to be modeled as essentially a large database, and as it grew more popular people began to develop what we might call “BIM checking engines” - software that could take a list of requirements (either built into the software or programmed in afterwards) and check whether a BIM model met them or not. Solibri, developed in 1996 in Finland, became the most widely used software in this space (and is still used today), but there were other systems such as FORNAX and Express Data Manager as well. Theoretically, it now became possible to automatically check a building’s design for code compliance by feeding an appropriate list of rules to a checking engine, and many future attempts at automated code-checking systems would use this approach.

The most ambitious of these types of efforts is likely the Singapore Government’s Corenet project, which (among other things) aims to include an automated code checking system. Designers would upload their building designs electronically, which would be automatically checked for compliance by software. The first iteration of this started development in the 1990s (and was apparently planned as far back as 1982), and actually predates BIM - it was initially planned to use 2D object-CAD. This later was replaced with a BIM system built around the FORNAX checking engine.

This effort didn’t succeed (like many failures, it died silently, so the reasons are unclear), but has recently been resurrected with the Corenet-X project. This has similar goals, but instead is based on using the Solibri Model Checker.

There have also been similar efforts in the US, in the form of the SMARTcodes and the AutoCodes projects.

SMARTcodes was an ambitious attempt by ICC in the early 2000s to develop this sort of automated code checking system. The plan was for ICC to develop software interpretable versions of its codes that could then be evaluated by BIM model checking software.

Since ICC is the author of essentially all the building codes used in the US, getting jurisdictions to adopt this system would have been greatly simplified. But while some progress was made (they got as far as creating software to help translate code provisions into model-checker rules), the effort lost funding post-financial crisis, and the project died.

AutoCodes was a similar project. It was started in 2012 by Fiatech, an industry consortium of “capital project stakeholders” and was part of their “guidelines for replicable buildings” project. The project had a huge number of partners on board, including ICC, and did some preliminary work to verify that lack of consistency in permit requirements interpretation was in fact a problem (they performed the egress provisions study mentioned earlier), but otherwise made little progress.

In spite of the development of checking engines, there’s little that can be pointed at in the way of progress to the general problem of “representing building codes in machine-interpretable form.” The situation is roughly similar to the one Fenves complained about in the 1960s - engineering specifications and other objective, quantitative criteria can be implemented in software, but this generally takes the form of a design software that requires careful shepherding and monitoring by an expert, rather than a system that can automatically check compliance. And implementation is generally of the hard-coded variety where the software becomes something of a black box, where it’s often non-obvious how it arrived at the answers it gives.

The area continues to absorb research time and effort (here, for instance, is a recent research attempt to implement the New Zealand Building Code in machine-interpretable form), but there’s no “in the wild” implementations that I’m aware of.

Part of the difficulty stems from the building code provisions themselves - much of this work has been focused on translating code requirements into clear, unambiguous rules, and for many code provisions that’s not straightforward. Fenves gives an example of this from the 1993 BOCA code:

Getting a piece of software to check this provision requires defining “what special knowledge and effort” is, in sufficient detail that it can encompass every possible future implementation (Does a new type of door handle need special knowledge and effort? What about a new type of latching mechanism? How would you translate that into a software-evaluable statement, that doesn’t require building an entire model of how the world works?)

It’s not hard to find similar provision throughout the building code - consider this provision from the 2018 International Residential Code:

Automatically checking a building for code compliance means figuring out how to explain to a piece of software what “clearly” means. In general, creating machine-interpretable building codes means not only translating the rules themselves, but translating all the tacit assumptions about how the world works that the rules reflect. As Fenves states, “Most of the provisions of a design standard refer to knowledge that all humans are expected to know. Unfortunately this type of knowledge is not formally expressed anywhere. Everybody just knows it.”

Of course, it's those tacit assumptions which make code interpretation ambiguous in the first place. Essentially, the problem we’re trying to address - making building codes less ambiguous and subject to interpretation - also makes it hard to solve the problem with software.

Despite the difficulties involved, I actually expect that we’re on the cusp of making great headway on this problem. Most previous efforts have been based on manually translating provisions into a series of objective rules, but I suspect before too long AI systems will be able to evaluate existing building codes directly. These sorts of attempts date back to the early 1990s, and have previously been unsuccessful. But language-based AI models are rapidly improving in capability, and tools like OpenAI’s Codex give a glimpse as to what might soon be possible for software interpretation of building codes.

Creating an appropriate representation of the building

Once you have your building code implemented as machine interpretable statements, the next step is to do the same for the design of the building itself.

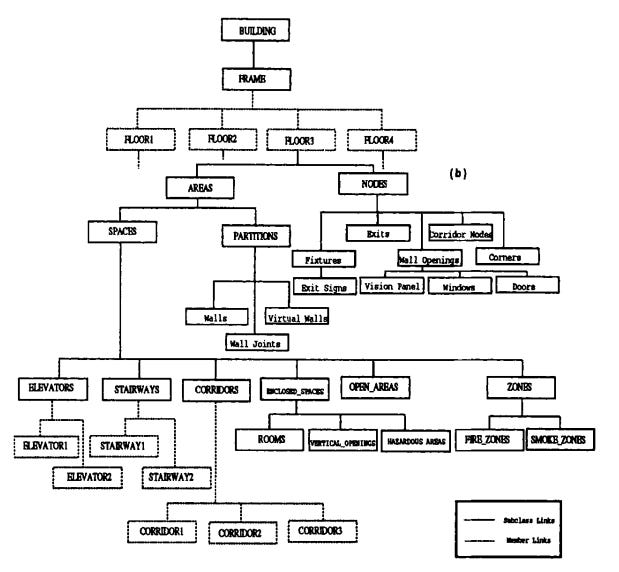

Most early research on automated code checking was in the era of hand drawing or 2D CAD, both of which represented the building as a collection of linework - not something that software could infer any meaning from. So early attempts at automated code checking systems also involved creating a data structure for representing a building that the system could understand.

With the development of BIM in the 1990s, most automated checking efforts became BIM-based - figuring out how to create a BIM model that could be evaluated for code compliance. These efforts typically (though not always) have relied on Solibri or other checking engines for the actual code evaluation process.

There are several challenges with creating a building representation that can be mechanically evaluated for code compliance. For one, there’s no standard way of creating BIM models - different designers will construct their BIM models in different ways. This means that progress will require either software that can evaluate an arbitrarily structured data model, or enforcing a standard way of creating models.

Most implementation attempts of automated checking systems have been the latter. SMARTcodes, for instance, was planned to be paired with the development and roll-out of a National BIM standard. Singapore’s current ambitious attempt at an automated code checking process, Corenet-X is apparently being paired with an apparently extremely restrictive and burdensome BIM modeling standard.

The only attempt I’m aware of at a system that didn’t require a standardized BIM model was UpCodes AI, a software tool that could evaluate a Revit model for code compliance regardless of how the model was structured. But this tool is no longer being developed.

Beyond variance in modeling style, another problem is that creating a building model that can used for code checking requires adding an enormous amount of information to it. As I said in BIM, Revit, and the database dream:

...a truly complete database of building information requires an enormous amount of effort to create. Even a medium-sized building might have hundreds of thousands of parts, hundreds of different specifications and standards, and an endless number of parameters to specify. Specifying all that up front would be an enormous amount of work, and also require a massive amount of ongoing maintenance to ensure that it stayed up to date. Most design professionals learn quickly to purge their drawings of unnecessary details and elements in the name of expediency and maintainability.

These issues have often dogged attempts at BIM based automated checking systems. For instance, a project to create a BIM standard for GSA courthouses in 2009 that could be used for automated checking became bogged down by the hundreds of hours of work required to manually label different spaces before the software could analyze the models. (We see a parallel here between this effort and the massive manual effort often required to manually label input data for AI models.) Some current automated checking efforts, such as the SEEBIM research project, are thus focused on software that can computationally generate this information.

This research has continued at a somewhat steady pace (here’s a recent example), but once again actual in-the-field implementations are coming up short. But this is another area where I expect AI models will likely make a great deal of progress in the next 5-10 years.

Output acceptance

Efforts at creating automated code checking systems have typically been focused on problems 1 and 2 - creating software representations of the code and the building, respectively. But the value of an automated checking system mostly comes from solving problem 3 - getting stakeholders (building jurisdictions, designers, and builders) to treat the software output as proof of compliance. Without this, at best you’ve automated certain repetitive tasks without changing the fundamental process.

Most current implementations of automatic code checking (in the form of building design software) don’t even attempt to solve this problem. For instance, current engineering software packages (such as RAM) can often automatically check nearly the entire structure of the building against code requirements. But while this undoubtedly saves engineering time, a RAM printout saying “all checks passed” doesn’t change anything about the permitting process - the construction documents will still be submitted to the city, where the plan reviewer will examine them and make comments (if anything, the massive volume of calculation output that tools like RAM can generate, and the numerous degrees of freedom they give the engineer, probably lengthens the review time required.)

This problem is as much a political one as it is a technical one. Your software needs to produce legible, verifiable output, but you also need to convince risk-averse jurisdictions and builders that your software does what you say it does. One obvious way of solving this problem is from the top down - have the automated code checking project be government led (or at least government supported.) And this is, in fact, how a lot of automated code checking projects have proceeded - SMARTcodes, AutoCodes, and CoreNet all took this approach.

There have been a handful of other government-led attempts at automated code checking systems around the world - a 2020 Summit contains several prototype efforts with various European governments such as Germany, Norway, and Estonia. Of these, Estonia’s appears to be the most advanced (consistent with their goals of being a “digital society”), but, once again, it does not appear if any of these efforts are yet live.

What does success look like?

So far this has been a long list of projects that haven’t panned out. But there have been successes in this area. ResCheck, ComCheck, and SolarAPP are all examples of “in the wild” systems that successfuly implement at least some of the goals of an automated code checking system.

ResCheck and ComCheck are software developed by the DOE in the 1990s for verifying energy code compliance for residential and commercial buildings, respectively. Both pieces of software work in a similar fashion - the designer inputs the relevant energy parameters into the software (via a dropdown menus and text boxes), which then performs a heat flow calculation, and compares that to code requirements. What separates ResCheck and ComCheck from other pieces of design software is that output is, in fact, treated as compliance. In fact, many jurisdictions require a ResCheck or ComCheck compliance report as part of a permit submittal package, and some have gone so far as to write ResCheck and ComCheck compliance into the local building code itself.

SolarAPP is another tool in the same vein. It’s a web-based code compliance tool developed by the National Renewable Energy Lab for residential solar installations. SolarAPP works in a similar way to ResCheck and ComCheck - the designer enters the design parameters into the software via a series of text boxes and drop down menus, the software checks whether it meets code requirements for residential solar installations, and then spits out a compliance report.

But SolarAPP goes a step further. For jurisdictions that have adopted it, SolarAPP can automatically issue a building permit - a human plan reviewer doesn’t need to sign off on the plans at all.

SolarAPP is relatively new, only becoming generally available last year. As of September 2021, 125 jurisdictions had signed up to use it. A study by NREL of 5 test jurisdictions showed that average permit times dropped to less than a day, and projects were installed and inspected an average of 12 days faster. As of December 2021, the 5 test jurisdictions had collectively saved more than 2000 hours of staff time reviewing permits.

What separates these successes from the many efforts that failed to pan out?

For one, ResCheck, ComCheck, and SolarAPP are very narrowly focused - instead of trying to check an entire building against the entire code, each one only checks compliance against a very narrow list of code requirements. This allows development to proceed much faster, and makes it much more feasible to verify that the output is correct - SolarAPP, for instance, prints every single check that it does, and the list is short enough that examining it is actually practical.

For another, these projects studiously avoided trying to solve any complex, technical problems - all are (relatively) simple applications that work via text boxes and drop down menus. There’s no effort to solve the generic problem of translating code requirements into software-evaluable rules, no effort to automatically analyze an existing BIM model, no effort to do anything clever with “AI” in the name.

In other words, they ignore problems 1 and 2 as much as possible, instead focusing on problem 3. With SolarApp, for instance, getting the acceptance of stakeholders was a key part of the process - development proceeded in an iterative fashion with jurisdictions and installers, asking them questions and getting feedback on the app and the output until they converged on something that everyone would accept.

I suspect this acceptance is likely to be a key hindering factor in the future development of automated code checking systems. It seems likely that in the next 5-10 years (or sooner!) AI models will make significant progress on being able to correctly interpret building code provisions and building designs, and automatically determining if a building meets code compliance or not. But right now AI models are often black boxes, where it’s unclear how they arrived at a particular output, which will make it challenging to implement them as a truly automated checking system.

Conclusion

So, to sum up:

Automated code checking, were it widely implemented, could potentially massively reduce time and effort required to get a building permit.

Automated code checking roughly requires solving three problems: turning the building code into software-interpretable form, turning the building design into software interpretable form, and getting stakeholders to agree that the software output is indicative of code-compliance.

We currently have software that can automate portions of code requirement checking in narrow domains, but require constant watching by expert practitioners. Much of this is engineering design software, but there’s also the occasional effort by other disciplines, such as SmartReview, which can check certain architectural code requirements.

There have been a great number of research efforts and government-supported projects at creating broad, generic automated code checking systems, which have largely failed to pan out.

There have been a small number of successes, which have mostly worked by narrowly focusing narrowly on specific portions of the code, and focusing on solving problem 3 instead of problems 1 and 2.

It’s likely over the next 5-10 years AI models will be successfully applied to solving problems 1 and 2, but this won’t solve problem 3 (and it’s possible the way AI models work will in fact make solving problem 3 even harder.)

Most of the value in an automated code checking system comes from solving problem 3.

(Thanks to Scott Reynolds, Jeffrey McGrew, Anthony Hauck, and other members of the Construction Physics slack for helpful conversations about this topic.)

These posts will always remain free, but if you find this work valuable, I encourage you to become a paid subscriber. As a paid subscriber, you’ll help support this work and also gain access to a members-only slack channel.

Construction Physics is produced in partnership with the Institute for Progress, a Washington, DC-based think tank. You can learn more about their work by visiting their website.

You can also contact me on Twitter, LinkedIn, or by email: briancpotter@gmail.com

I’m also available for consulting work.

[0] - From Fenves 1969:

However, large portions of the detailed design or proportioning operations are now being performed by digital computer programs, and there is every indication that such computer use will continue to increase significantly. This change from manual to computer processing places the Specification in an entirely different environment. The present method of incorporating Specification provisions into computer programs presents some serious problems. The task of programming is often assigned to a junior engineer or a small group of engineers, who may have to interpret the missing or ambiguous portions of the Specification. The program based on such interpretations may process many more designs than an experienced engineer can accomplish in a lifetime. Furthermore, the interpretations and assumptions made in the program may be so deeply buried in flowcharts and program statements as to be almost impossible to ferret out.

[1] - Description of the SASE methodology:

The Standards Analysis, Synthesis, and Expression (SASE) methodology provides an objective and rigorous representation of the content and organization of a standard. Both the methodology and a computer program that implements it are described in this document in terms of two underlying conceptual models. The conceptual model for the information content of a standard is essentially independent of any particular organization and expression of the information. The fundamental unit of information in the model is a provision stipulating that a product or process have some quality. The highest level provisions in a standard are requirements that directly indicate compliance with some portion of the standard and are either satisfied or violated. Techniques are provided in SASE to ensure that individual provisions are unique, complete, and correct, and that the relations between provisions .are connected, acyclic, and consistent. Entities in SASE that represent the information content of a standard are data items, decision tables, decision trees, functions, and information networks. The conceptual model for the organization of a standard is based on a logical classification system in which each requirement is classed in terms of its subject (product or process) and predicate (required quality) . Techniques are provided in SASE for building and manipulating hierarchical trees of classifiers and testing the resulting organization for completeness and clarity. Entities in SASE that deal with the organization of a standard are classifiers, hierarchy, scopelist, index, organization, and outline. The SASE methodology is demonstrated in an analysis of a complete standard for concrete quality. An annotated bibliography of the most significant relevant research reports is presented.

[2] - From Fenves 1995:

The SPEX (Standards Processing Expert) System used a standard-independent approach for sizing and proportioning structural member cross-sections. The system reasoned with the model of a design standard, represented using the four-level SASE representation, to generate a set of constraints on a set of basic data items that represent the attributes of a design to be determined. The only change to the SASE model was that the conditions in a provision were separated into applicability and performance conditions. The constraints were then given to a numeric optimization system to solve for an optimal set of basic data item values. SPEX implemented a standard independent design process in that both the process of generating the set of constraints from the standard and the process for finding the optimal solution of these constraints were generic and thus not specific to the design standard being used.

It’s true that ResCheck covers an area of compliance that’s vastly simpler than the overall IBC and IRC. But I suspect that the main reason for its widespread use is that, unlike most code tools, it covers an area unrelated to life safety.

I’ve probably done a couple of dozen ResCheck reports, and I have yet to be questioned on a single item in any one of them, or hear of anyone else who’s been questioned. The reality is that ResCheck basically works on the honor system. You input square footage numbers for a long list of envelope surfaces, and you often find that skewing the numbers a little one way or the other could bump a certain design from being 1% worse than code to 1% better. (I’m not suggesting that people do this-just stating how it works.)

My observation has been that building inspectors are mostly concerned with critical life safety issues. To confirm that a ResCheck report is accurate, they would have to spend hours measuring all of the envelope surfaces themselves and calculating their areas-essentially, redoing the report themselves. In practice, none of them do this. They aren’t going to lose any sleep over a new house whose energy performance is 2% worse than the 2018 IECC minimum. And if someone tried to do something more extreme, like omit insulation altogether, the inspector would catch it in a site inspection.

I’m not familiar with SolarAPP, but I assume that the structural and electrical items it covers are simple enough that an inspector can confirm they’ve been done correctly in a quick site walkthrough. The challenge is dealing with things that affect life safety and that are too complicated to quickly confirm visually- i.e., much of the material in the IBC and IRC.

I concur completely with Jim's comment on ResCheck and Comcheck. Narrowly defined, 2-dimensional software programs that deal with one aspect of building performance are also open to "gaming the system" with bad inputs. The human element in the building inspection process for energy conservation requires inspectors who are trained and motivated to be picky about insulation installation, air-sealing, etc...

On a related note, the Architectural Registration Exam graphic software is fully automated with respect to evaluating a candidate's "design" for code compliant building elements like egress, stair proportions, furniture placement. I believe the code for this dates to the 1980's. It's crude, but I'd argue that it's sufficient for drilling my profession into thinking about objective results; i.e. making sure a building has minimum life-safety parameters.

Scaling up ARE-like software to a BIM model would require standardization of software inputs and outputs. I suppose machine learning could be applied to the evaluation of floor plans in .pdf format. Where's the incentive in that?