Reading List for 06/14/25

Figure’s new humanoid robot demo, what it would take to create the data for a robot foundation model, “right to repair” on naval vessels, electricity costs and power laws, and more.

Welcome to the reading list, a weekly roundup of news and links related to buildings, infrastructure and industrial technology. This week we look at Figure’s new humanoid robot demo, what it would take to create the data for a robot foundation model, “right to repair” on naval vessels, electricity costs and power laws, and more. Roughly 2/3rds of the reading list is paywalled, so for full access become a paid subscriber.

No essay this week, but I’m working on a more involved one about housing costs in the western US that will be out next week.

Supersonic flight executive order

Supersonic flight over land has been banned in the US since 1973, due to widespread opposition to sonic booms. However, the way this was implemented was needlessly burdensome: rather than setting noise limits for supersonic aircraft, the ban prevented non-military aircraft from travelling faster than the speed of sound at all, even if they could do so without generating a sonic boom. The new executive order requires the FAA to repeal this regulation, and replace it with a noise-based standard:

The Administrator of the Federal Aviation Administration (FAA) shall take the necessary steps, including through rulemaking, to repeal the prohibition on overland supersonic flight in 14 CFR 91.817 within 180 days of the date of this order and establish an interim noise-based certification standard, making any modifications to 14 CFR 91.818 as necessary, as consistent with applicable law.

I’m aware of various efforts for quieter supersonic travel, including NASA’s X-59 and Boom Supersonic’s “boomless cruise”, but I’m not an aerospace expert and I’m not sure how practical they’ll end up being. But regardless, replacing a burdensome prescriptive standard (“to minimize noise, you shall not travel faster than this”) with a performance-based one (“you shall make less noise than this”) seems very positive to me.

Figure AI’s 1-hour humanoid demo

Humanoid robot developer Figure AI released an hour-long video of one of their robots autonomously (no teleoperation) scanning packages and placing them on a conveyor belt:

This was apparently made possible due to improvements in Helix, the “Vision-Language-Action model” that powers Figure’s robots. From Figure:

Helix’s logistics policy has broadened to handle a much wider diversity of packages. In addition to standard rigid boxes, the system now manages polyethylene bags (poly bags), padded envelopes, and other deformable or thin parcels that pose unique challenges. These items can fold, crumple, or flex, making it harder to grasp and locate labels. Helix addresses this by adjusting its grasp strategy on the fly – for example, flicking away a soft bag to flip it dynamically, or using pinch grips for flat mailers. Despite the greater variety in shape and texture, Helix increased its throughput, processing items in about 4.05 seconds each on average without bottlenecks…

Helix’s policy now maintains a short-term visual memory of its environment, rather than acting only on instantaneous camera frames. Concretely, the model is equipped with a module that composes features from a sequence of recent video frames, giving it a temporally extended view of the scene…

We also augmented Helix’s proprioceptive input with a history of recent states, which has enabled faster, more reactive control. Originally, the policy operated in fixed-duration action chunks: it would observe the current state and output a short trajectory of motions, then observe anew, and so on. By incorporating a window of past robot states (hand, torso and head positions) into the policy’s input, the system maintains continuity between these action chunks…

To grant Helix a basic sense of touch, we integrated force feedback into the policy’s input observations. The forces that Figure 02 applies on its environment and the objects it manipulates are now part of the state fed into the neural network…

I’m not a robotics or AI expert, but this is probably the most impressive humanoid demonstration that I’ve seen from a dexterity standpoint. It handles a variety of different object types of different rigidities (boxes, soft packages, thin envelopes) quickly and smoothly, for a very long period of time. (At one point it successfully picks up what looks like a thin piece of cardstock with the aid of a ramp.) The tasks aren’t incredibly dexterously demanding, but it’s still much more than I’ve seen other humanoid robots accomplish.

In other humanoid news, 1X Robotics released a new demo showing their Neo robot doing things like walking outside and climbing stairs.

Robot foundation model training

AI software, like OpenAI’s ChatGPT and Anthropic’s Claude, are powered by foundation models: large neural network models trained on enormous amounts of data. These foundation models can then be used as a base for more specialized AI models trained to perform specific tasks (hence the name “foundation model”).

Efforts are underway to create similar foundation models for robotics, but a common bottleneck is the lack of availability of data. The models behind things like ChatGPT had the benefit of having the entire internet as their training data. GPT-3, for instance, was trained on 300 billion tokens (where a token is a chunk of data roughly equivalent to 3/4ths of a word), and it’s estimated that the training set for GPT-4 is in the trillions of tokens. No such easily accessible dataset currently exists for training robot foundation models.

On substack, Chris Paxton estimates how much effort it would require to create a large dataset that could be used to train a robot foundation model.

Assume we have a robot that can collect 1 (valuable) token per frame at 10 frames per second. We make this assumption because, largely, robot tokens are not as valuable as LLM tokens - the information Qwen or Llama is trained on is very semantically rich, whereas robot frames often have a lot of redundant information. To put it another way: machine learning works best the closer you are to an independent and identically distributed (iid) dataset; neither web data nor robot data is iid, but robot data is far less iid than web data.

Okay, now let’s assume the robot collects its data all day, every day, forever. A year has 365.25 days × 24 hours × 3600 seconds = 31,557,600 seconds total; at 10 fps, to get to 2 trillion tokens, we need 6,377 years worth of data (rounding up).

Now, that’s assuming it runs 24/7, and is getting useful data every second of every day, which seems wildly implausible. Let’s say we 10x it, and then round up a bit more - call it 70,000 robot-years to get to our 2 trillion (useful) token llama2 dataset…

Paxton estimates that getting the 2-trillion tokens needed for a robot GPT would require ~70,000 robot-years of training(!), but by using the data from 1000 robots, and augmenting real-world training data with simulated training data and videos of humans performing tasks, this could be achieved in less than a year. A multi-billion dollar project, to be sure, but one that’s achievable.

Reconductoring

Rising power demand requires lots of new electrical generation capacity, but it also requires more transmission lines to bring that power where it’s needed. Unfortunately, building transmission lines in the US has become more and more difficult.

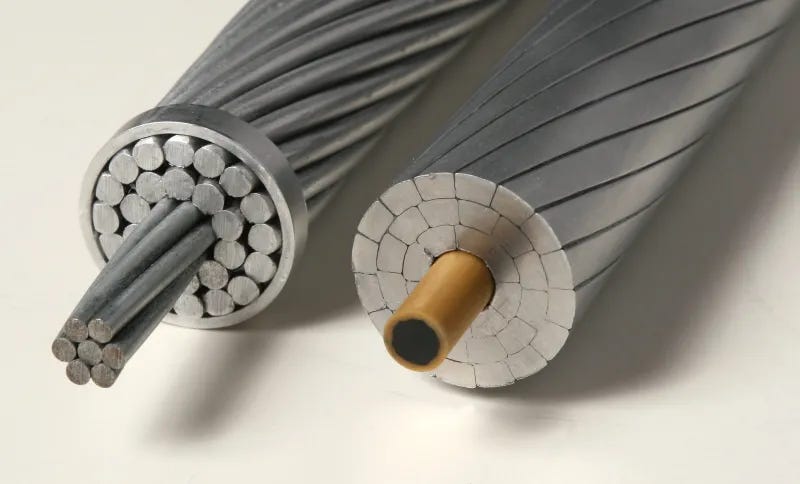

One possible way to cut this knot is to rewire existing transmission lines to have more capacity; this is known as “reconductoring”, and can involve using transmission lines with stronger composite cores, or with trapezoidal-shaped wires that can be more densely packed. An article on Hackaday explains how it works:

The point of composite cores is to provide the conductor with the necessary tensile strength and lower thermal expansion coefficient, so that heating due to loading and environmental conditions causes the cable to sag less. Controlling sag is critical to cable capacity; the less likely a cable is to sag when heated, the more load it can carry. Additionally, composite cores can have a smaller cross-sectional area than a steel core with the same tensile strength, leaving room for more aluminum in the outer layers while maintaining the same overall conductor diameter. And of course, more aluminum means these advanced conductors can carry more current.

Another way to increase the capacity in advanced conductors is by switching to trapezoidal wires. Traditional ACSR with round wires in the core and conductor layers has a significant amount of dielectric space trapped within the conductor, which contributes nothing to the cable’s current-carrying capacity. Filling those internal voids with aluminum is accomplished by wrapping round composite cores with aluminum wires that have a trapezoidal cross-section to pack tightly against each other. This greatly reduces the dielectric space trapped within a conductor, increasing its ampacity within the same overall diameter.